|

ITK

4.13.0

Insight Segmentation and Registration Toolkit

|

|

ITK

4.13.0

Insight Segmentation and Registration Toolkit

|

It is a common task in image analysis to require to compare how similar two image might be. This comparison may be limited to a particular region of each image. Image Similarity Metrics are methods that produce a quantitative evaluation of the similarity between two image or two image regions.

This techniques are used as a base for registration methods because they provide the information that indicates when the registration process is going in the right direction.

A large number of Image Similarity Metrics have been proposed in the medical image and computer vision community. There is no a right image similarity metric but a set of metrics that are appropiated for particular applications. Metrics fit very well the notions of tools in a toolkit. You need a set of them because none is able to perform the same job as the other.

The following table presents a comparison between image similarity metrics. This is by no means an exhaustive comparison but will at least provide some guidance as to what metric can be appropiated for particular problems.

Metrics are probably the most critical element of a registration problem. The metric defines what the goal of the process is, they measure how well the Target object is matched by the Reference object after the transform has been applied to it. The Metric should be selected in function of the types of objects to be registered and the expected kind of missalignment. Some metrics has a rather large capture region, which means that the optimizer will be able to find his way to a maximum even if the missalignment is high. Typicaly large capture regions are associated with low precision for the maximum. Other metrics can provide high precision for the final registration, but usually require to be initialized quite close to the optimal value.

Unfortunately there are no clear rules about how to select a metric, other that trying some of them in different conditions. In some cases could be and advantage to use a particular metric to get an initial approximation of the transformation, and then switch to another more sensitive metric to achieve better precision in the final result.

Metrics are depend on the objects they compare. The toolkit currently offers Image To Image and PointSet to Image metrics as follows:

. This metric allows to register objects whose intensity values are related by a linear transformation.

. This metric allows to register objects whose intensity values are related by a linear transformation.  and summed them up. This metric has the advantage of increase simultaneously when more samples are available and when intensity values are close.

and summed them up. This metric has the advantage of increase simultaneously when more samples are available and when intensity values are close.  . Its entropy

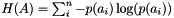

. Its entropy  is defined by

is defined by  where

where  are the probabilities of the values in the set. Entropy can be interpreted as a measure of the mean uncertainty reduction that is obtained when one of the particular values is found during sampling. Given two sets

are the probabilities of the values in the set. Entropy can be interpreted as a measure of the mean uncertainty reduction that is obtained when one of the particular values is found during sampling. Given two sets  and

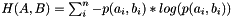

and  its joint entropy is given by the joint probabilities

its joint entropy is given by the joint probabilities  as

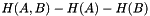

as  . Mutual information is obtained by subtracting the entropy of both sets from the joint entropy, as :

. Mutual information is obtained by subtracting the entropy of both sets from the joint entropy, as :  , and indicates how much uncertainty about one set is reduced by the knowledge of the second set. Mutual information is the metric of choice when image from different modalities need to be registered.

, and indicates how much uncertainty about one set is reduced by the knowledge of the second set. Mutual information is the metric of choice when image from different modalities need to be registered.  1.8.5

1.8.5