Proposals:Condor: Difference between revisions

(→Unix) |

|||

| (72 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

= Introduction = | = Introduction = | ||

Condor is an open source distributed computing software framework. It can be used to manage workload on a dedicated cluster of computers, and/or to farm out work to idle desktop computers. Condor is a cross-platform system that can be run on Unix and Windows operating system. Condor is a complex and flexible system that can jobs in serial and parallel mode. For parallel jobs, it supports the | Condor is an open source distributed computing software framework. It can be used to manage workload on a dedicated cluster of computers, and/or to farm out work to idle desktop computers. Condor is a cross-platform system that can be run on Unix and Windows operating system. Condor is a complex and flexible system that can execute jobs in serial and parallel mode. For parallel jobs, it supports the MPI standard. This Wikipage is dedicated to document our working experience using Condor. | ||

= Downloading Condor = | = Downloading Condor = | ||

Different versions of condor can be downloaded from [http://www.cs.wisc.edu/condor/downloads-v2/download.pl here]. This documentation focuses on our experience installing/ | Different versions of condor can be downloaded from [http://www.cs.wisc.edu/condor/downloads-v2/download.pl here]. This documentation focuses on our experience installing/configuring Condor Version 7.2.0. The official detail documentation for this version can be found [http://www.cs.wisc.edu/condor/manual/v7.2/ here] | ||

= Preparation = | = Preparation = | ||

| Line 24: | Line 24: | ||

* Submit: Any machine in your pool (including the Central Manager) can be configured to allow Condor jobs to be submitted. | * Submit: Any machine in your pool (including the Central Manager) can be configured to allow Condor jobs to be submitted. | ||

For more information regarding other required preparatory work, refer the [http://www.cs.wisc.edu/condor/manual/v7.2/3_2Installation.html#SECTION00422000000000000000 documentation] | |||

= Installation = | = Installation = | ||

== Unix == | == Unix == | ||

'The official instructions on how to install Condor in Unix can be found [http://www.cs.wisc.edu/condor/manual/v7.2/3_2Installation.html#SECTION00423000000000000000 here]'. Below we present some of tweaks we had to do to get it to work on our Unix machines. | ''' The official instructions on how to install Condor in Unix can be found [http://www.cs.wisc.edu/condor/manual/v7.2/3_2Installation.html#SECTION00423000000000000000 here] '''. Below we present some of tweaks we had to do to get it to work on our Unix machines. | ||

==== Prerequisites ==== | ==== Prerequisites ==== | ||

| Line 65: | Line 67: | ||

=== Configuring a Condor Manager in Unix === | === Configuring a Condor Manager in Unix === | ||

* Make sure the condor archive is in your home directory (/home/kitware), then untar it. | * Make sure the condor archive is in your home directory (For example /home/kitware), then untar it. | ||

cd ~ | cd ~ | ||

tar -xzvf condor-7.2.X-linux-x86_64-rhel5-dynamic.tar.gz | tar -xzvf condor-7.2.X-linux-x86_64-rhel5-dynamic.tar.gz | ||

| Line 124: | Line 126: | ||

HOSTALLOW_CONFIG = $(CONDOR_HOST) | HOSTALLOW_CONFIG = $(CONDOR_HOST) | ||

* | * If you have MIDAS integration, in order to allow Midas to run condor command, create a link to /root/condor/etc/condor_config into /home/condor | ||

cd /home/condor | cd /home/condor | ||

ln -s /home/condor/etc/condor_config condor_config | ln -s /home/condor/etc/condor_config condor_config | ||

=== Configuring a Executer/Submitter in Unix === | |||

The different files allowing the server to be also used as a condor submitter/executer have been automatically updated while running the installation script ''condor_install''. Nevertheless, you still need to update its configuration file. | |||

* Edit condor node config_file.local and update the line as referenced below: | |||

vi /home/condor/condor_config.local | |||

= | CONDOR_ADMIN = email@website.com | ||

If the installation went well, the line having ''UID_DOMAIN'' and '' FILESYSTEM_DOMAIN'' should already be set to ''website.com'' | |||

== Windows == | == Windows == | ||

'''The official documentation on how to install Condor in Windows can be found [http://www.cs.wisc.edu/condor/manual/v7.2/3_2Installation.html#SECTION00425000000000000000 here]'''. Below we describe our experience installing Condor in Windows 7. | |||

# Download the Windows install MSI, run it, installing to "C:/condor". | |||

# Accept the license agreement. | |||

# Decide if you are installing a central controller or a submit/execute node | |||

##If installing a Central Controller, then select "create a new central pool" and set the name of the pool | |||

##Otherwise select "Join an existing Pool" and enter the hostname of the central manager ( full address ). | |||

# Decide whether the machine should be a submitter node, and select the appropriate option. | |||

# Decide when Condor should run jobs, if the machine will be an executor. | |||

##Decide what happens to jobs when the machine stops being idle. | |||

# For accounting domain enter your domain (e.g. yourdomaininternal.com) | |||

# For Email settings (I ignored this by clicking next) | |||

# for Java settings (I ignored this as we weren't using Java, by clicking next) | |||

Set the following settings when prompted | |||

Host Permission Settings: | |||

hosts with read: * | |||

hosts with write: * | |||

hosts with administrator access $(FULL_HOSTNAME) | |||

enable vm universe: no | |||

enable hdfs support: no | |||

When asked if you want a custom install or install, choose install. This will install condor to C:\condor. | |||

The install will ask you to reboot your machine. If you want to access the condor command line programs from anywhere on your system, add C:\condor\bin to your system's PATH environment variable. | |||

When you will be running condor commands, start a cygwin or cmd prompt with elevated/administrator privileges. | |||

After the install, I could see the condor system by running | |||

condor_status | |||

and this helped me fix up some problems. My '''condor_status''' at first gave me "unable to resolve COLLECTOR_HOST". There are many helpful log files to review in C:\condor\log. The first of these I looked at was '''.master_address'''. The IP address listed there was incorrect (since my machine has multiple IP addresses, I needed to specify the IP address of my wired connection, which is on the same subnet as my COLLECTOR_HOST. Your IP address might incorrectly be a localhost loopback address like 127.0.0.1, or perhaps just an IP that you would not want). | |||

I shut down condor, right clicked on C:/condor in a Windows explorer, turned off "read only" and set permissions to allow for writing. Then I edited the file "c:/condor/condor_config.local" which started out empty, so that it could pick up some replacement values (some of them didn't seem to be set properly during the install). These values are: | |||

NETWORK_INTERFACE = <IP address> | |||

UID_DOMAIN = *.yourdomaininternal.com | |||

FILESYSTEM_DOMAIN = *.yourdomaininternal.com | |||

COLLECTOR_NAME = PoolName | |||

ALLOW_READ = * | |||

ALLOW_WRITE = * | |||

# Choose one of the following: | |||

# | |||

# For a submit/execute node: | |||

DAEMON_LIST = MASTER, SCHEDD, STARTD | |||

# For a central collector host and submit/execute node: | |||

DAEMON_LIST = MASTER, COLLECTOR, NEGOTIATOR, SCHEDD, STARTD, KBDD | |||

TRUST_UID_DOMAIN = True | |||

START = True | |||

You may want to add DEFAULT_DOMAIN_NAME = yourinternaldomain.com if your machine comes up without a domain name in condor. | |||

and then restarted condor. If you ran "condor_status" and see that the Windows machine had Activity=OWNER rather than UNCLAIMED, be sure that you have added in START=True. But this may not be the best configuration for a Windows workstation that is in use. There is probably some additional configuration needed to make sure a Condor job doesn't use the machine when a physical human user is there using it. | |||

At this point I was able to get one of the Condor Windows examples working, but with a bit of tweaking for Windows 7. | |||

Here is the batch file contents for the actual job (printname.bat) | |||

@echo off | |||

echo Here is the output from "net USER" : | |||

net USER | |||

And here is the printname.sub condor submission file I ran with | |||

condor_submit printname.sub | |||

universe = vanilla | |||

environment = path=c:\Windows\system32 | |||

executable = printname.bat | |||

output = printname.out | |||

error = printname.err | |||

log = printname.log | |||

queue | |||

==== Useful Condor Commands on Windows ==== | |||

To run these commands, get a command prompt or Cygwin terminal, right click the icon to start it up, and click "run with elevated privileges" or "run as administrator". | |||

condor_master runs as a service on Windows, which controls the other daemons. | |||

To stop condor | |||

net stop condor | |||

To start condor | |||

net start condor | |||

At first before you can submit a job to Condor on Windows, you'll need to store your user's credentials (password). Run | |||

condor_store_cred add | |||

then enter your password. | |||

= Running Condor = | = Running Condor = | ||

= Additional | |||

'''The official user's manual on how to perform distributed computing in Condor is [http://www.cs.wisc.edu/condor/manual/v7.2/2_Users_Manual.html here]''' | |||

* run the condor manager | |||

condor_master | |||

* Assuming at the installation process, you setup the type as ''manager,execute,submit'' (the default), running the following command | |||

ps -e | egrep condor_ | |||

* You should get something similar to: | |||

1063 ? 00:00:00 condor_master | |||

1064 ? 00:00:00 condor_collecto | |||

1065 ? 00:00:00 condor_negotiat | |||

1066 ? 00:00:00 condor_schedd | |||

1067 ? 00:00:00 condor_startd | |||

1068 ? 00:00:00 condor_procd | |||

* If you run the command ''ps -e | egrep condor_'' just after you started condor, you may also see the following line | |||

1077 ? 00:00:00 condor_starter | |||

* Check the status | |||

kitware@rigel:~$ condor_status | |||

Name OpSys Arch State Activity LoadAv Mem ActvtyTime | |||

slot1@rigel LINUX X86_64 Unclaimed Idle 0.010 1006 0+00:10:04 | |||

slot2@rigel LINUX X86_64 Unclaimed Idle 0.000 1006 0+00:10:05 | |||

Total Owner Claimed Unclaimed Matched Preempting Backfill | |||

X86_64/LINUX 2 0 0 2 0 0 0 | |||

Total 2 0 0 2 0 0 0 | |||

* Setup condor to automatically startup | |||

cp /root/condor/etc/example/condor.boot /etc/init.d/ | |||

* Update MASTER parameter in ''condor.boot'' to match your current setup | |||

vi /etc/init.d/condor.boot | |||

MASTER=/root/condor/sbin/condor_master | |||

* Add ''condor.boot'' service to all runlevel | |||

kitware@rigel:~$ update-rc.d condor.boot defaults | |||

/etc/rc0.d/K20condor.boot -> ../init.d/condor.boot | |||

/etc/rc1.d/K20condor.boot -> ../init.d/condor.boot | |||

/etc/rc6.d/K20condor.boot -> ../init.d/condor.boot | |||

/etc/rc2.d/S20condor.boot -> ../init.d/condor.boot | |||

/etc/rc3.d/S20condor.boot -> ../init.d/condor.boot | |||

/etc/rc4.d/S20condor.boot -> ../init.d/condor.boot | |||

/etc/rc5.d/S20condor.boot -> ../init.d/condor.boot | |||

== A simple example demonstrating the use of Condor == | |||

#include <unistd.h> | |||

#include <stdio.h> | |||

int main( int argc, char** argv ) | |||

{ | |||

printf( "%s\n", argv[1] ); | |||

fflush( stdout ); | |||

sleep( 30 ); | |||

return 0; | |||

} | |||

This exe will repeat the command line argument it is given, wait 30 seconds, then exit. | |||

Save this file as foo.c, then compile it with (note static linking). | |||

gcc foo.c -o foo --static | |||

Create a condor job description, saving the file as condorjob: | |||

universe = vanilla | |||

executable = foo | |||

should_transfer_files = YES | |||

when_to_transfer_output = ON_EXIT | |||

log = condorjob.log | |||

error = condorjob.err | |||

output = condorjob.out | |||

arguments = "helloworld" | |||

Queue | |||

then submit the job to condor: | |||

condor_submit condorjob | |||

After this job finishes, you should have three files in the submission directory: | |||

condorjob.err (contains the standard error, empty in this case) | |||

condorjob.out (should contain standard output, in this case "helloworld") | |||

condorjob.log (should contain info about the execution of the job, such as the machine that submitted the job and the machine that executed the job) | |||

If you want to test this job on multiple slots (say 2 at once so you can see how Condor will execute the job on multiple execute resources), you can | |||

change the condorjob file to be like this: | |||

universe = vanilla | |||

executable = foo | |||

should_transfer_files = YES | |||

when_to_transfer_output = ON_EXIT | |||

log = condorjob1.log | |||

error = condorjob1.err | |||

output = condorjob1.out | |||

arguments = "helloworld1" | |||

Queue | |||

log = condorjob2.log | |||

error = condorjob2.err | |||

output = condorjob2.out | |||

arguments = "helloworld2" | |||

Queue | |||

We had a case where we had 6 slots, 2 were 32 bit with Arch=INTEL, 4 were 64 bit with Arch=X86_64 (but we were unaware of the bit difference at first). We ran 6 jobs and then were wondering why they would only execute on the submitting machine. So we changed the condorjob file to specify a certain architecture by including | |||

Requirements = Arch == "INTEL" | |||

and submitted this from the X86_64 machine. This told condor to execute only on machines with Architecture of Intel, so it was not attempted to execute on the X86_64 submitting machine. We then saw an error in condorjob1.log saying | |||

Exec format error | |||

and we realized we had tried to run an executable compiled for the wrong architecture. I've included this story in case it helps with debugging. | |||

= Additional Information = | |||

== Troubleshooting Condor == | |||

Our experience with Condor involved a lot of errors that we had to systematically understand and overcome. Here are some lessons from our experience. | |||

*Be sure that your executable is statically linked. | |||

*For Unix submission/execution, We found that we needed to run jobs as a user that exists on all machines on the Condor grid, because we do not have shared Unix users on our network. In our case this user was Condor. | |||

Condor supplies a number of utility programs and log files. These are extremely helpful in understanding and correcting problems. | |||

Our setups have Condor log files in /home/condor/localcondor/log and C:\condor\log. | |||

condor_status | |||

Allows you to check the status of the Condor Pool. This is the first command line program you should get working, as it will help you debug other problems. | |||

condor_q | |||

Shows you what processes are active, that you have submitted to condor. It will give you a cluster and process ID for each process. | |||

condor_q -analyze CID.PID | |||

condor_q -better-analyze CID.PID | |||

When given the cluster ID and process ID, these will tell you how many execution machines matched each of your requirements for the job. | |||

condor_config_val <CONDOR_VARIABLE> | |||

Will tell you the value of that CONDOR_VARIABLE for your condor setup. This can help maintain your sanity. | |||

condor_rm CID.PID | |||

Will remove the job with cluster ID CID and process ID PID from your condor pool. Useful for killing Held or Idle jobs. | |||

For more details on these daemons, see section "3.1.2 The Condor Daemons" in the Condor Manual. | |||

=== condor_master === | |||

On any machine, no matter its configuration, there will be a condor_master daemon. This process will start and stop all other condor daemons. condor_master will write a MasterLog and have a .master_address file. Be sure that the .master_address contains the correct IP address. If the correct IP address doesn't appear, set the correct value in the parameter | |||

NETWORK_INTERFACE = <desired IP> | |||

in your condor_config.local file. This must be an interface the machine actually has, but condor may have picked a different IP than you would have wanted, perhaps the local loopback address 127.0.0.1 or a wireless instead of ethernet adapter. | |||

=== condor_startd === | |||

This daemon runs on execution nodes, and represents a machine ready to do work for Condor. Its log file describes its requirements. This daemon starts up the condor_starter daemon. | |||

=== condor_starter === | |||

This daemon runs on execution nodes, and is responsible for starting up actual execution processes, and logging their details. | |||

=== condor_schedd === | |||

This daemon runs on submit nodes, and represents data about submitted jobs. It tracks the queueing of jobs and tries to get resources for all of its jobs to be run. When a job submitted is run, this daemon spawns a condor_shadow daemon. | |||

=== condor_shadow === | |||

This daemon runs on the submission machine when an actual execution of the job is run. It will take care of systems calls that need to be executed on the submitting machine for a process. There will be a condor_shadow process for each executing process of a submission machine, meaning that on a machine with a large number of submitted processes, the number of shadow daemons supported by memory or other resources could be a limitation. | |||

=== condor_collector === | |||

This daemon runs on the Pool Collector machine, and is responsible for keeping track of resources within the Pool. All nodes in the pool let this daemon on the Pool Collector machine know that they exist, what services they support, and what requirements they have. | |||

=== condor_negotiator === | |||

This daemon typically runs on the Pool Collector machine, and negotiates between submitted jobs and executing nodes to match a job with an execution. Log files of interest include NegotiatorLog and MatchLog | |||

=== condor_kbdd === | |||

This daemon is used to detect user activity on a execute node, so it can know whether to allow execution of a job or to disallow it because a human user currently is engaged in some task. | |||

== The right processor architecture == | |||

The initial installation was done with the package condor-7.2.0-linux-ia64-rhel3-dynamic.tar.gz corresponding to [http://en.wikipedia.org/wiki/IA-64 IA64] which corresponds to the Intel Itanium processor 64bits. It doesn't include all 64bits intel processors. | |||

While trying to run the ''condor_master'', the shell returned the following error message ''cannot execute binary file'' | |||

Using the program [http://sourceware.org/binutils/docs/binutils/readelf.html#readelf readelf], it's possible to extract the header of an executable and understand if a given executable could run on a given platform. | |||

kitware@rigel:~/$ readelf -h ~/condor-7.2.0_IA64/sbin/condor_master | |||

ELF Header: | |||

Magic: 7f 45 4c 46 02 01 01 00 00 00 00 00 00 00 00 00 | |||

Class: ELF64 | |||

Data: 2's complement, little endian | |||

Version: 1 (current) | |||

OS/ABI: UNIX - System V | |||

ABI Version: 0 | |||

Type: EXEC (Executable file) | |||

Machine: '''Intel IA-64''' | |||

Version: 0x1 | |||

Entry point address: 0x40000000000bf3e0 | |||

Start of program headers: 64 (bytes into file) | |||

Start of section headers: 9382744 (bytes into file) | |||

Flags: 0x10, 64-bit | |||

Size of this header: 64 (bytes) | |||

Size of program headers: 56 (bytes) | |||

Number of program headers: 7 | |||

Size of section headers: 64 (bytes) | |||

Number of section headers: 32 | |||

Section header string table index: 31 | |||

kitware@rigel:~$ readelf -h /bin/ls | |||

ELF Header: | |||

Magic: 7f 45 4c 46 02 01 01 00 00 00 00 00 00 00 00 00 | |||

Class: ELF64 | |||

Data: 2's complement, little endian | |||

Version: 1 (current) | |||

OS/ABI: UNIX - System V | |||

ABI Version: 0 | |||

Type: EXEC (Executable file) | |||

Machine: '''Advanced Micro Devices X86-6'''4 | |||

Version: 0x1 | |||

Entry point address: 0x4023c0 | |||

Start of program headers: 64 (bytes into file) | |||

Start of section headers: 104384 (bytes into file) | |||

Flags: 0x0 | |||

Size of this header: 64 (bytes) | |||

Size of program headers: 56 (bytes) | |||

Number of program headers: 8 | |||

Size of section headers: 64 (bytes) | |||

Number of section headers: 28 | |||

Section header string table index: 27 | |||

kitware@rigel:~$ readelf -h ./condor-7.2.0/sbin/condor_master | |||

ELF Header: | |||

Magic: 7f 45 4c 46 02 01 01 00 00 00 00 00 00 00 00 00 | |||

Class: ELF64 | |||

Data: 2's complement, little endian | |||

Version: 1 (current) | |||

OS/ABI: UNIX - System V | |||

ABI Version: 0 | |||

Type: EXEC (Executable file) | |||

Machine: '''Advanced Micro Devices X86-64''' | |||

Version: 0x1 | |||

Entry point address: 0x4b9450 | |||

Start of program headers: 64 (bytes into file) | |||

Start of section headers: 4553256 (bytes into file) | |||

Flags: 0x0 | |||

Size of this header: 64 (bytes) | |||

Size of program headers: 56 (bytes) | |||

Number of program headers: 8 | |||

Size of section headers: 64 (bytes) | |||

Number of section headers: 31 | |||

Section header string table index: 30 | |||

Comparing the different output, it's possible to observe the architecture '''Intel IA-64''' isn't the right one. | |||

Be sure that your executable is statically linked. | |||

For Unix submission/execution, We found that we needed to run jobs as a user that exists on all machines on the Condor grid, because we do not have shared Unix users on our network. In our case this user was Condor. | |||

Condor supplies a number of utility programs and log files. These are extremely helpful in understanding and correcting problems. Our setups have Condor log files in /home/condor/localcondor/log and C:\condor\log. | |||

= Links = | = Links = | ||

* Detailed Condor documentation is also available on the website [http://www.cs.wisc.edu/condor/manual/v7.2/3_2Installation.html here] | * Detailed Condor documentation is also available on the website [http://www.cs.wisc.edu/condor/manual/v7.2/3_2Installation.html here] | ||

= MPICH2 on Windows = | |||

Here we record our experience using MPICH2 on Windows, working towards using MPICH2+Condor in (ideally) a mixed Windows and Linux environment or else in a homogeneous environment. | |||

This will have to be cleaned up to make more sense as we gain more experience. | |||

== MPICH2 Environment on Windows 7 == | |||

First install MPICH2, I installed the version 1.3.2p1, windows 32 bit binary. Then add the location of the MPICH2\bin directory to your system path. Add '''mpiexec.exe''' and '''smpd.exe''' to the list of exceptions in the Windows firewall. | |||

I created the same user/password combination (with administrative rights) on two different Windows 7 machines. All work was done logged in as that user. | |||

The following was performed in a Windows CMD prompt run with Administrative Privileges (right click on the CMD executable and run it as an Administrator). | |||

I reset the smpd passphrase | |||

smpd -remove | |||

then set the same smpd passphrase on both machines | |||

smpd -install -phrase mypassphrase | |||

and could then check the status using | |||

smpd -status | |||

I had to register my user credentials on both machines by | |||

mpiexec -register | |||

then accepted the user it suggested by hitting enter, then entered my user's Windows account password. | |||

You can check which user you are with | |||

mpiexec -whoami | |||

And now you should be able to validate with | |||

mpiexec -validate | |||

it will ask you for an authentication password for smpd, this the '''mypassphrase''' you entered above. If all is correct at this point, you will get a result of '''SUCCESS'''. | |||

To test this, you can run the '''cpi.exe''' example application in the '''MPICH2\examples''' directory (I ran it with 4 processors since my machine has 4 cores): | |||

mpiexec -n 4 full_path_to\cpi.exe | |||

You can also test that both machines are participating in a computation by specifying the IPs of the two machines, and how many processes each machine should run, and the executable '''hostname''', which should return the two different hostnames of the two machines. | |||

mpiexec -hosts 2 ip_1 #_cores_1 ip_2 #_cores_2 hostname | |||

== Creating an MPI program on Windows == | |||

I used MS Visual Studio Express 2008 (MSVSE08) to build a C++ executable. | |||

Here is a very simple working C++ example program: | |||

#include "stdafx.h" | |||

#include <iostream> | |||

#include "mpi.h" | |||

using namespace std; | |||

// | |||

int main(int argc, char* argv[]) | |||

{ | |||

// initialize the MPI world | |||

MPI::Init(argc,argv); | |||

// | |||

// get this process's rank | |||

int rank = MPI::COMM_WORLD.Get_rank(); | |||

// | |||

// get the total number of processes in the computation | |||

int size = MPI::COMM_WORLD.Get_size(); | |||

// | |||

// print out where this process ranks in the total | |||

std::cout << "I am " << rank << " out of " << size << std::endl; | |||

// | |||

// Finalize the MPI world | |||

MPI::Finalize(); | |||

return 0; | |||

} | |||

To compile this in MSVSE08, you must right click on the project file (not the solution file), then click properties, and make the following additions: | |||

* Under C/C++ menu, Additional Include Directories property, add the full path to the '''MPICH2\include directory''' | |||

* Under Linker/General menu, Additional Library Directories, add the full path to the '''MPICH2\lib directory''' | |||

* Under Linker/Input menu, Additional Dependencies, add '''mpi.lib''' and '''cxx.lib''' | |||

You can test your application using: | |||

mpiexec -n 4 full_path_to\your.exe | |||

Then to run your exe on two different machines (I had to build a RELEASE for this, at first I had built a DEBUG build, but this caused problems on the second machine): | |||

*create the same directory on both machines (e.g. '''C:\mpich2work''') | |||

*copy the exe to this directory on both machines | |||

*execute the exe on one machine, specifying both IPs and how many processes you want to run on each machine (I run as many processes as cores): | |||

mpiexec -hosts 2 ip_1 #_cores_1 ip_2 #_cores_2 full_path_to_exe_on_both_machines | |||

== MPICH2 and Condor on Windows == | |||

To configure MPI over Condor, I set my Windows 7 laptop up as a dedicated Condor scheduler and executer followed the instructions at this link: | |||

https://condor-wiki.cs.wisc.edu/index.cgi/wiki?p=HowToConfigureMpiOnWindows | |||

I turned off smpd.exe as a service, since Condor will manage it: | |||

smpd.exe -remove | |||

I then created the following MPI example program, and ran this with a MachineCount of 2 and 4 at different times (I ran this on my laptop, which has 4 cores, and had Condor running locally) . This program assumes an even number of processes. It shows examples of both synchronous and asynchronous communication using MPI. | |||

Resources that I found to be helpful in developing this program are: | |||

http://www-uxsup.csx.cam.ac.uk/courses/MPI/ | |||

https://computing.llnl.gov/tutorials/mpi/ | |||

#include "stdafx.h" | |||

#include <iostream> | |||

#include "mpi.h" | |||

using namespace std; | |||

// | |||

int main(int argc, char *argv[]) | |||

{ | |||

MPI::Init(argc, argv); | |||

// | |||

int rank = MPI::COMM_WORLD.Get_rank(); | |||

int size = MPI::COMM_WORLD.Get_size(); | |||

char processor_name[MPI_MAX_PROCESSOR_NAME]; | |||

MPI::Get_processor_name(processor_name,namelen); | |||

// | |||

int buf[1]; | |||

int numElements = 1; | |||

// initialize buffer with rank | |||

buf[0] = rank; | |||

// example of synchronous communication | |||

// have even ranks send to odd ranks first | |||

// assumes an even number of processes | |||

int syncTag = 123; | |||

if (rank % 2 == 0) | |||

{ | |||

// send to next higher rank | |||

int dest = rank + 1; | |||

std::cout << processor_name << "." << rank << " will send to " << dest << ", for now buf[0]= " << buf[0] << std::endl; | |||

MPI::COMM_WORLD.Send(buf,numElements,MPI::INT,dest,syncTag); | |||

// now wait for dest to send back | |||

// even though dest is the param, in the Recv call it is used as the source | |||

MPI::COMM_WORLD.Recv(buf,numElements,MPI::INT,dest,syncTag); | |||

std::cout << processor_name << "." << rank << ", after receiving from " << dest << ", buf[0]= " << buf[0] << std::endl; | |||

} | |||

else | |||

{ | |||

// source is next lower rank | |||

int source = rank - 1; | |||

std::cout << processor_name << "." << rank << ", will receive from " << source << ", for now buf[0]= " << buf[0] << std::endl; | |||

MPI::COMM_WORLD.Recv(buf,numElements,MPI::INT,source,syncTag); | |||

std::cout << processor_name << "." << rank << ", after receiving from " << source << ", buf[0]= " << buf[0] << std::endl; | |||

// send rank rather than buffer, just to be sure | |||

// even though param is source, in Send call it is for dest | |||

MPI::COMM_WORLD.Send(&rank,numElements,MPI::INT,source,syncTag); | |||

} | |||

// now for asynchronous communication | |||

std::cout << processor_name << "." << rank << " switching to asynchronous" << std::endl; | |||

int asyncTag = 321; | |||

int leftRank; | |||

if (rank == 0) | |||

{ | |||

leftRank = size-1; | |||

} | |||

else | |||

{ | |||

leftRank = (rank-1); | |||

} | |||

int rightRank; | |||

if (rank == size-1) | |||

{ | |||

rightRank = 0; | |||

} | |||

else | |||

{ | |||

rightRank = (rank+1); | |||

} | |||

// everyone sends to the leftRank, and receives from the rightRank | |||

// if this were synchronous, would be in danger of deadlocking because of circular waits | |||

// reset buffers to rank | |||

buf[0] = rank; | |||

std::cout << processor_name << "." << rank << " will send to " << leftRank << ", for now buf[0]= " << buf[0] << std::endl; | |||

MPI::Request sendReq = MPI::COMM_WORLD.Isend(buf,numElements,MPI::INT,leftRank,asyncTag); | |||

MPI::Request recvReq = MPI::COMM_WORLD.Irecv(buf,numElements,MPI::INT,rightRank,asyncTag); | |||

// wait on the receipt | |||

MPI::Status status; | |||

recvReq.Wait(status); | |||

std::cout << processor_name << "." << rank << " after receiving from " << rightRank << ", buf[0]= " << buf[0] << std::endl; | |||

// | |||

MPI::Finalize(); | |||

return(0); | |||

} | |||

I then created a two machine Condor parallel universe grid, using the same user/password combination on both machines, and this user had Administrative rights. My 4 core Windows 7 profession laptop (X86_64,WINNT61) was the '''CONDOR_HOST''' and the '''DedicatedScheduler'''. Assuming this laptop is called '''clavicle''' in the '''shoulder.com''' domain, here are the important configuration parameters in its '''condor_config.local''' file: | |||

CONDOR_HOST=clavicle.shoulder.com | |||

SMPD_SERVER = C:\Program Files (x86)\MPICH2\bin\smpd.exe | |||

SMPD_SERVER_ARGS = -p 6666 -d 1 | |||

DedicatedScheduler = "DedicatedScheduler@clavicle.shoulder.com" | |||

STARTD_EXPRS = $(STARTD_EXPRS), DedicatedScheduler | |||

START = TRUE | |||

SUSPEND = FALSE | |||

PREEMPT = FALSE | |||

KILL = FALSE | |||

WANT_SUSPEND = FALSE | |||

WANT_VACATE = FALSE | |||

CONTINUE = TRUE | |||

RANK = 0 | |||

DAEMON_LIST = MASTER, SCHEDD, STARTD, COLLECTOR, NEGOTIATOR, SMPD_SERVER | |||

The second machine, a 2 core Windows XP desktop called '''scapula''' (INTEL,WINNT51) was a dedicated machine with the following important parameters in its '''condor_config.local''' file: | |||

CONDOR_HOST=clavicle.shoulder.com | |||

SMPD_SERVER = C:\Program Files\MPICH2\bin\smpd.exe | |||

SMPD_SERVER_ARGS = -p 6666 -d 1 | |||

DedicatedScheduler = "DedicatedScheduler@clavicle.shoulder.com" | |||

STARTD_EXPRS = $(STARTD_EXPRS), DedicatedScheduler | |||

START = TRUE | |||

SUSPEND = FALSE | |||

PREEMPT = FALSE | |||

KILL = FALSE | |||

WANT_SUSPEND = FALSE | |||

WANT_VACATE = FALSE | |||

CONTINUE = TRUE | |||

RANK = 0 | |||

DAEMON_LIST = MASTER, STARTD, SMPD_SERVER | |||

Note how it points to '''clavicle''' both as '''CONDOR_HOST''' and as the '''DedicatedScheduler''', and that is does not have '''(x86)''' in its path to the '''smpd.exe'''. | |||

MPICH2 gets installed to different locations, to '''C:\Program Files\MPICH2''' for an (INTEL,WINNT51) machine, and to '''C:\Program Files (x86)\MPICH2''' for a (X86_64,WINNT61) machine. Because I needed to refer to the '''mpiexec.exe''' path in the batch file that my Condor submit file referred to, and this batch file would be the same for both machines, I opted to copy '''mpiexec.exe''' to the work directory I was using on all machines, '''C:\mpich2work'''. | |||

After setting up this configuration, I rebooted both machines to start in this configuration. At this point, the command | |||

net stop condor | |||

no longer returns correctly, since Condor is managing the '''smpd.exe''' service now and does not know how to stop that service. | |||

Based on the Condor submit file described in the Condor wiki page, I had to adapt my own submit file for my above MPI executable called '''mpiwork.exe''' to be the following: | |||

universe = parallel | |||

executable = mp2script.bat | |||

arguments = mpiwork.exe | |||

machine_count = 6 | |||

output = out.$(NODE).log | |||

error = error.$(NODE).log | |||

log = work.$(NODE).log | |||

should_transfer_files = yes | |||

when_to_transfer_output = on_exit | |||

transfer_input_files = mpiwork.exe | |||

Requirements = ((Arch == "INTEL") && (OpSys == "WINNT51")) || ((Arch == "X86_64") && (OpSys == "WINNT61")) | |||

queue | |||

and my '''mp2script.bat''' file was adapted to be: | |||

set _CONDOR_PROCNO=%_CONDOR_PROCNO% | |||

set _CONDOR_NPROCS=%_CONDOR_NPROCS% | |||

REM If not the head node, just sleep forever | |||

if not [%_CONDOR_PROCNO%] == [0] copy con nothing | |||

REM Set this to the bin directory of MPICH installation | |||

set MPDIR="C:\mpich2work" | |||

REM run the actual mpijob | |||

%MPDIR%\mpiexec.exe -n %_CONDOR_NPROCS% -p 6666 %* | |||

exit 0 | |||

with the change being that '''MPDIR''' is a location that is known to have '''mpiexec.exe''' on both machines, since I copied that file there on both machines as described above. | |||

A point of confusion I have is that I would have expected the '''mpiwork.exe''' code above to have the name of '''clavicle''' and '''scapula''' in the output when I ran it on 6 nodes. Instead only the name '''clavicle''' appeared. I do believe that this was running on both machines and all 6 cores, as both machine's IP addresses were noted in the work.parallel.log, and both machines had CPU activity at the time the executable ran. | |||

== Adding an Additional Compute Node, for MPICH2 and Condor on Windows == | |||

Once you have an existing pool and you want to add a new node to the pool, it is relatively straightforward if you have Condor initially installed on the node. We have found that it isn't necessary to have the same user account on the new node to run Condor/MPI as it is on the existing pool machines. | |||

After you have Condor set up initially on the new node, stop condor via a command line started with Administrative privileges: | |||

net stop condor | |||

Edit your condor_config.local as shown above for the execute node example, so that this new node is a dedicated execute node. | |||

Restart Condor: | |||

net start condor | |||

Now create a work directory on the new machine, it is important that this has the same name as the work directory on the other machines, in this example '''C:\mpich2work'''. | |||

Copy any needed input files to the work directory (another option is to have Condor transfer them). | |||

Copy the '''mpiexec.exe''' file from the '''MPICH2_INSTALL\bin''' directory to the work directory. | |||

That is all that is necessary. You can have the actual executable and the driver batch script both sent to the new execute node via commands in your Condor submission file, for example, here the executable is '''MetaOptimizer.exe''' and the driver script is '''driver.bat''': | |||

universe = parallel | |||

executable = driver.bat | |||

transfer_executable = true | |||

arguments = MetaOptimizer.exe C:\mpich2work\Input1.mhd C:\mpich2work\Input2.mhd C:\mpich2work\output | |||

machine_count = 6 | |||

output = metaopt.$(NODE).log | |||

error = metaopt.$(NODE).log | |||

log = metaopt.$(NODE).log | |||

should_transfer_files = yes | |||

when_to_transfer_output = on_exit | |||

transfer_input_files = MetaOptimizer.exe | |||

queue | |||

== MPI Based Meta-Optimization Framework == | |||

=== High Level Architecture === | |||

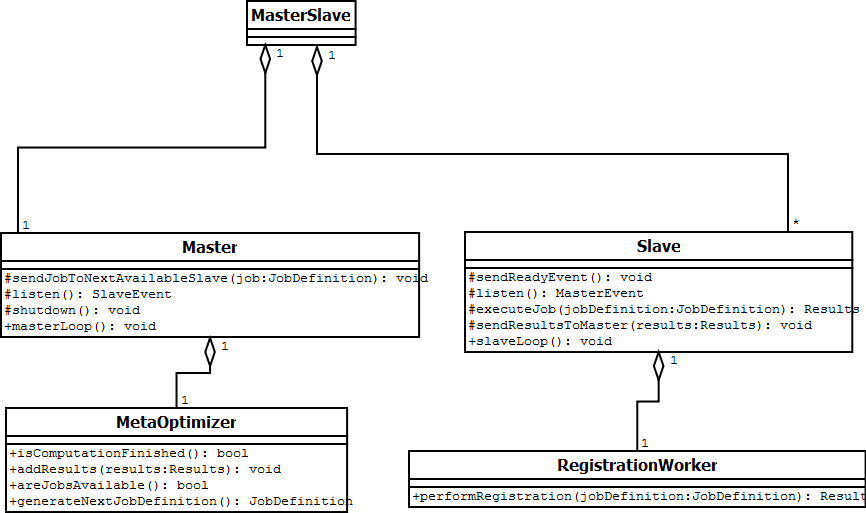

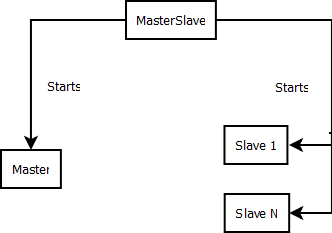

The system was designed for infrastructure reuse. The infrastructure classes take care of organization of the computation nodes and communication between the computation nodes. When a new MetaOptimization is needed, the client programmer should create subclasses of '''MetaOptimizer''', '''JobDefinition''', '''Results''', and '''RegistrationWorker''' specific to the computational problem. | |||

=== MPI Aware Infrastructure C++ classes === | |||

[[File:Mpi metaopt class diagram.png | UML Diagram of C++ classes]] | |||

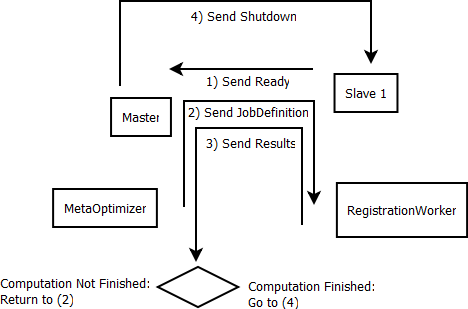

'''MasterSlave''': Entry point of the application, initializes the MPI infrastructure, starts up a '''Master''' (sending it a particular '''MetaOptimizer''' subclass), starts up as many '''Slave''' nodes as are necessary (sending each of them a particular '''RegistrationWorker''' subclass), and finalizes the MPI infrastructure when the computation is complete. | |||

[[File:Mpimeta start.png | MetaOptmization Framework Startup]] | |||

[[File:Mpimeta computation.png | MetaOptmization Framework Computation]] | |||

'''Master''': The central controlling part of the infrastructure, it manages the available '''Slave'''s, sending them '''JobDefinition'''s as needed, collects '''Results''' from the '''Slave'''s as '''Results''' come in, will re-run a '''JobDefinition''' if it has failed or timed out, and shuts down all '''Slave'''s when the computation is finished. The '''Master''' owns a particular '''MetaOptimizer''' subclass. The '''MetaOptimizer''' subclass is aware of the particular computation, the '''Master''' is only knows how to communicate with '''Slave'''s and does not have any responsibility for determining the breakdown of a computation into parts or its completion. | |||

'''Slave''': One of the processing nodes, part of the infrastructure. Each '''Slave''' manages a '''RegistrationWorker''' subclass. '''Slave'''s announce their presence to the '''Master''', receive a '''JobDefinition''' describing a particular job, pass the '''JobDescription''' on to the '''RegistrationWorker''' to run the job, receive back a '''Results''' upon completion of the job, and send the '''Results''' back to the '''Master'''. '''Slave'''s are intended to run multiple rounds of registration during a particular computational run. | |||

=== Infrastructure Event C++ classes === | |||

'''MasterEvent''' & '''SlaveEvent''' (named for the origin of the Event) are Infrastructure Event classes, and each hold a Message Wrapper class as a payload. | |||

The idea behind the job message wrapper classes was to make message wrappers that knew how to bi-directionally convert their specific named parameters to array buffers, thus giving them a pseudo-serialization ability without having them to be aware of MPI specifics. Each message wrapper class is associated with a specific Infrastructure Event class ('''SlaveEvent'''s own a '''Results''', and '''MasterEvent'''s own a '''JobDefinition'''). The Infrastructure Event classes take care of sending from '''Master''' classes to '''Slave''' classes, and from '''Slave''' classes to '''Master''' classes. | |||

The '''MetaOptimizer''' subclasses will return '''JobDefinition''' subclasses to the '''Master''', which then wraps the '''JobDefinition''' subclass in a '''MasterEvent''', and sends the '''MasterEvent''' to a '''Slave'''. A '''Slave''' reads a '''MasterEvent''', extracts the '''Results''' subclass, and sends the '''Results''' subclass to a '''RegistrationWorker''' subclass. Upon completion of the calculation for the '''JobDefinition''', the '''RegistrationWorker''' returns a '''Results''' subclass to the '''Slave''', which then wraps the '''Results''' in a '''SlaveEvent''' and sends the '''SlaveEvent''' to the '''Master'''. The '''Master''' reads the '''SlaveEvent''', extracts the '''Results''' subclass, and sends this '''Results''' subclass to the '''MetaOptimizer'''. | |||

=== Computation Specific C++ classes === | |||

Note: currently these classes are specific to a particular example computation, they need to have the interface abstracted out and the specific example computation extracted and moved to subclasses. | |||

'''JobDefinition''': A Message Wrapper class that defines the parameters of a particular job, a specific subclass of '''JobDefinition''' should be used for a particular computation. | |||

'''Results''': A Message Wrapper class that defines the return values of a particular job, a specific subclass of '''Results''' should be used for a particular computation. | |||

'''MetaOptimizer''': The '''MetaOptimizer''' subclass for a particular computation takes care of determining the parameters for a particular job (in the form of a '''JobDefinition'''), and determining how many '''JobDefinition'''s will be in a round of computation. The '''MetaOptimizer''' then takes in the '''Results''' from each '''JobDefinition''', and upon receiving '''Results''' for each '''JobDefinition''' in a round, can either determine a new round of computation by recalculating the new set of parameters for the new round, or else terminate the computation. | |||

'''RegistrationWorker''': The '''RegistrationWorker''' subclass for a particular computation will take in a particular '''JobDefinition''' subclass germane to the computation, perform the actual registration computation, then return the results in the form of a specific '''Results''' subclass that is relevant to the computation. | |||

Latest revision as of 11:09, 27 June 2011

Introduction

Condor is an open source distributed computing software framework. It can be used to manage workload on a dedicated cluster of computers, and/or to farm out work to idle desktop computers. Condor is a cross-platform system that can be run on Unix and Windows operating system. Condor is a complex and flexible system that can execute jobs in serial and parallel mode. For parallel jobs, it supports the MPI standard. This Wikipage is dedicated to document our working experience using Condor.

Downloading Condor

Different versions of condor can be downloaded from here. This documentation focuses on our experience installing/configuring Condor Version 7.2.0. The official detail documentation for this version can be found here

Preparation

As Condor is a flexible system, there are different ways of configuring condor in your computing infrastructure. Hence, before starting installation, make the following important decisions.

- What machine will be the central manager?

- What machines should be allowed to submit jobs?

- Will Condor run as root or not?

- Do I have enough disk space for Condor?

- Do I need MPI configured?

Condor can be installed as either a manager node, a execute or a submit node. Or any combination of these ones. See The Different Roles a Machine Can Play

- Manager: There can be only one central manager for your pool. The machine is the collector of information, and the negotiator between resources and resource request

- Execute: Any machine in your pool (including the Central Manager) can be configured to execute Condor jobs.

- Submit: Any machine in your pool (including the Central Manager) can be configured to allow Condor jobs to be submitted.

For more information regarding other required preparatory work, refer the documentation

Installation

Unix

The official instructions on how to install Condor in Unix can be found here . Below we present some of tweaks we had to do to get it to work on our Unix machines.

Prerequisites

- Be sure the server has a hostname and a domain name

hostname

should return mymachine.mydomain.com (or .org, .edu, etc.) , if it only returns mymachine, then your server does not have a fully qualified domain name.

To set the domain name, edit /etc/hosts and add your domain name to the first line. You might see something like

10.171.1.124 mymachine

change this to

10.171.1.124 mymachine.mydomain.com

Also edit /etc/hostname to be

mymachine.mydomain.com

Then reboot so that the hostname changes take effect.

- Make sure the following packages are installed:

apt-get install mailutils

- Make sure the server has a hostname and a domainname.

- Download the package condor-7.2.X-linux-x86_64-rhel5-dynamic.tar.gz ( Platform RHEL 5 Intel x86/64 ) See http://www.cs.wisc.edu/condor/downloads-v2/download.pl

For example, you could run a similar command to download the desired package:

wget http://parrot.cs.wisc.edu//symlink/20090223121502/7/7.2/7.2.1/fec3779ab6d2d556027f6ae4baffc0d6/condor-7.2.X-linux-x86_64-rhel5-dynamic.tar.gz

- You should install Condor as root or with a user having equivalent privileges

Configuring a Condor Manager in Unix

- Make sure the condor archive is in your home directory (For example /home/kitware), then untar it.

cd ~ tar -xzvf condor-7.2.X-linux-x86_64-rhel5-dynamic.tar.gz cd ./condor-7.2.X

- If not yet done, create a condor user

adduser condor

- Run the installation scripts condor_install

./condor_install --install=. --prefix=/root/condor --local-dir=/home/condor/localcondor

After running the installation script, you should get the following output:

Installing Condor from /root/condor-7.2.X to /root/condor

Condor has been installed into:

/root/condor

Configured condor using these configuration files:

global: /root/condor/etc/condor_config

local: /home/condor/localcondor/condor_config.local

Created scripts which can be sourced by users to setup their

Condor environment variables. These are:

sh: /root/condor/condor.sh

csh: /root/condor/condor.csh

- Switch to the directory where condor is now installed

cd /root/condor

- Edit /etc/environment and update PATH variable to include the directory /root/condor/bin and /root/condor/sbin

PATH="/root/condor/bin:/root/condor/sbin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/games"

- Add the following line

CONDOR_CONFIG="/root/condor/etc/condor_config"

- Save file and apply the change by running

source /etc/environment

- Make sure CONDOR_CONFIG and PATH are set correctly

root@rigel:~$ echo $PATH /root/condor/bin:/root/condor/sbin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/games root@rigel:~$ echo $CONDOR_CONFIG /root/condor/etc/condor_config

- You can know logout / login or even restart he machine, and you should be able check that CONDOR_CONFIG and PATH environment variables are still set.

- Edit condor manager config_file and update the line as referenced below:

cd ~/condor vi ./etc/condor_config

RELEASE_DIR = /root/condor LOCAL_DIR = /home/condor/localcondor CONDOR_ADMIN = email@website.com UID_DOMAIN = website.com FILESYSTEM_DOMAIN = website.com HOSTALLOW_READ = *.website.com HOSTALLOW_WRITE = *.website.com HOSTALLOW_CONFIG = $(CONDOR_HOST)

- If you have MIDAS integration, in order to allow Midas to run condor command, create a link to /root/condor/etc/condor_config into /home/condor

cd /home/condor ln -s /home/condor/etc/condor_config condor_config

Configuring a Executer/Submitter in Unix

The different files allowing the server to be also used as a condor submitter/executer have been automatically updated while running the installation script condor_install. Nevertheless, you still need to update its configuration file.

- Edit condor node config_file.local and update the line as referenced below:

vi /home/condor/condor_config.local

CONDOR_ADMIN = email@website.com

If the installation went well, the line having UID_DOMAIN and FILESYSTEM_DOMAIN should already be set to website.com

Windows

The official documentation on how to install Condor in Windows can be found here. Below we describe our experience installing Condor in Windows 7.

- Download the Windows install MSI, run it, installing to "C:/condor".

- Accept the license agreement.

- Decide if you are installing a central controller or a submit/execute node

- If installing a Central Controller, then select "create a new central pool" and set the name of the pool

- Otherwise select "Join an existing Pool" and enter the hostname of the central manager ( full address ).

- Decide whether the machine should be a submitter node, and select the appropriate option.

- Decide when Condor should run jobs, if the machine will be an executor.

- Decide what happens to jobs when the machine stops being idle.

- For accounting domain enter your domain (e.g. yourdomaininternal.com)

- For Email settings (I ignored this by clicking next)

- for Java settings (I ignored this as we weren't using Java, by clicking next)

Set the following settings when prompted

Host Permission Settings: hosts with read: * hosts with write: * hosts with administrator access $(FULL_HOSTNAME) enable vm universe: no enable hdfs support: no

When asked if you want a custom install or install, choose install. This will install condor to C:\condor.

The install will ask you to reboot your machine. If you want to access the condor command line programs from anywhere on your system, add C:\condor\bin to your system's PATH environment variable.

When you will be running condor commands, start a cygwin or cmd prompt with elevated/administrator privileges.

After the install, I could see the condor system by running

condor_status

and this helped me fix up some problems. My condor_status at first gave me "unable to resolve COLLECTOR_HOST". There are many helpful log files to review in C:\condor\log. The first of these I looked at was .master_address. The IP address listed there was incorrect (since my machine has multiple IP addresses, I needed to specify the IP address of my wired connection, which is on the same subnet as my COLLECTOR_HOST. Your IP address might incorrectly be a localhost loopback address like 127.0.0.1, or perhaps just an IP that you would not want).

I shut down condor, right clicked on C:/condor in a Windows explorer, turned off "read only" and set permissions to allow for writing. Then I edited the file "c:/condor/condor_config.local" which started out empty, so that it could pick up some replacement values (some of them didn't seem to be set properly during the install). These values are:

NETWORK_INTERFACE = <IP address>

UID_DOMAIN = *.yourdomaininternal.com

FILESYSTEM_DOMAIN = *.yourdomaininternal.com

COLLECTOR_NAME = PoolName

ALLOW_READ = *

ALLOW_WRITE = *

# Choose one of the following:

#

# For a submit/execute node:

DAEMON_LIST = MASTER, SCHEDD, STARTD

# For a central collector host and submit/execute node:

DAEMON_LIST = MASTER, COLLECTOR, NEGOTIATOR, SCHEDD, STARTD, KBDD

TRUST_UID_DOMAIN = True

START = True

You may want to add DEFAULT_DOMAIN_NAME = yourinternaldomain.com if your machine comes up without a domain name in condor.

and then restarted condor. If you ran "condor_status" and see that the Windows machine had Activity=OWNER rather than UNCLAIMED, be sure that you have added in START=True. But this may not be the best configuration for a Windows workstation that is in use. There is probably some additional configuration needed to make sure a Condor job doesn't use the machine when a physical human user is there using it.

At this point I was able to get one of the Condor Windows examples working, but with a bit of tweaking for Windows 7.

Here is the batch file contents for the actual job (printname.bat)

@echo off echo Here is the output from "net USER" : net USER

And here is the printname.sub condor submission file I ran with

condor_submit printname.sub

universe = vanilla environment = path=c:\Windows\system32 executable = printname.bat output = printname.out error = printname.err log = printname.log queue

Useful Condor Commands on Windows

To run these commands, get a command prompt or Cygwin terminal, right click the icon to start it up, and click "run with elevated privileges" or "run as administrator".

condor_master runs as a service on Windows, which controls the other daemons.

To stop condor

net stop condor

To start condor

net start condor

At first before you can submit a job to Condor on Windows, you'll need to store your user's credentials (password). Run

condor_store_cred add

then enter your password.

Running Condor

The official user's manual on how to perform distributed computing in Condor is here

- run the condor manager

condor_master

- Assuming at the installation process, you setup the type as manager,execute,submit (the default), running the following command

ps -e | egrep condor_

- You should get something similar to:

1063 ? 00:00:00 condor_master 1064 ? 00:00:00 condor_collecto 1065 ? 00:00:00 condor_negotiat 1066 ? 00:00:00 condor_schedd 1067 ? 00:00:00 condor_startd 1068 ? 00:00:00 condor_procd

- If you run the command ps -e | egrep condor_ just after you started condor, you may also see the following line

1077 ? 00:00:00 condor_starter

- Check the status

kitware@rigel:~$ condor_status

Name OpSys Arch State Activity LoadAv Mem ActvtyTime

slot1@rigel LINUX X86_64 Unclaimed Idle 0.010 1006 0+00:10:04

slot2@rigel LINUX X86_64 Unclaimed Idle 0.000 1006 0+00:10:05

Total Owner Claimed Unclaimed Matched Preempting Backfill

X86_64/LINUX 2 0 0 2 0 0 0

Total 2 0 0 2 0 0 0

- Setup condor to automatically startup

cp /root/condor/etc/example/condor.boot /etc/init.d/

- Update MASTER parameter in condor.boot to match your current setup

vi /etc/init.d/condor.boot MASTER=/root/condor/sbin/condor_master

- Add condor.boot service to all runlevel

kitware@rigel:~$ update-rc.d condor.boot defaults /etc/rc0.d/K20condor.boot -> ../init.d/condor.boot /etc/rc1.d/K20condor.boot -> ../init.d/condor.boot /etc/rc6.d/K20condor.boot -> ../init.d/condor.boot /etc/rc2.d/S20condor.boot -> ../init.d/condor.boot /etc/rc3.d/S20condor.boot -> ../init.d/condor.boot /etc/rc4.d/S20condor.boot -> ../init.d/condor.boot /etc/rc5.d/S20condor.boot -> ../init.d/condor.boot

A simple example demonstrating the use of Condor

#include <unistd.h>

#include <stdio.h>

int main( int argc, char** argv )

{

printf( "%s\n", argv[1] );

fflush( stdout );

sleep( 30 );

return 0;

}

This exe will repeat the command line argument it is given, wait 30 seconds, then exit.

Save this file as foo.c, then compile it with (note static linking).

gcc foo.c -o foo --static

Create a condor job description, saving the file as condorjob:

universe = vanilla executable = foo should_transfer_files = YES when_to_transfer_output = ON_EXIT log = condorjob.log error = condorjob.err output = condorjob.out arguments = "helloworld" Queue

then submit the job to condor:

condor_submit condorjob

After this job finishes, you should have three files in the submission directory:

condorjob.err (contains the standard error, empty in this case)

condorjob.out (should contain standard output, in this case "helloworld")

condorjob.log (should contain info about the execution of the job, such as the machine that submitted the job and the machine that executed the job)

If you want to test this job on multiple slots (say 2 at once so you can see how Condor will execute the job on multiple execute resources), you can

change the condorjob file to be like this:

universe = vanilla executable = foo should_transfer_files = YES when_to_transfer_output = ON_EXIT log = condorjob1.log error = condorjob1.err output = condorjob1.out arguments = "helloworld1" Queue

log = condorjob2.log error = condorjob2.err output = condorjob2.out arguments = "helloworld2" Queue

We had a case where we had 6 slots, 2 were 32 bit with Arch=INTEL, 4 were 64 bit with Arch=X86_64 (but we were unaware of the bit difference at first). We ran 6 jobs and then were wondering why they would only execute on the submitting machine. So we changed the condorjob file to specify a certain architecture by including

Requirements = Arch == "INTEL"

and submitted this from the X86_64 machine. This told condor to execute only on machines with Architecture of Intel, so it was not attempted to execute on the X86_64 submitting machine. We then saw an error in condorjob1.log saying

Exec format error

and we realized we had tried to run an executable compiled for the wrong architecture. I've included this story in case it helps with debugging.

Additional Information

Troubleshooting Condor

Our experience with Condor involved a lot of errors that we had to systematically understand and overcome. Here are some lessons from our experience.

- Be sure that your executable is statically linked.

- For Unix submission/execution, We found that we needed to run jobs as a user that exists on all machines on the Condor grid, because we do not have shared Unix users on our network. In our case this user was Condor.

Condor supplies a number of utility programs and log files. These are extremely helpful in understanding and correcting problems. Our setups have Condor log files in /home/condor/localcondor/log and C:\condor\log.

condor_status

Allows you to check the status of the Condor Pool. This is the first command line program you should get working, as it will help you debug other problems.

condor_q

Shows you what processes are active, that you have submitted to condor. It will give you a cluster and process ID for each process.

condor_q -analyze CID.PID condor_q -better-analyze CID.PID

When given the cluster ID and process ID, these will tell you how many execution machines matched each of your requirements for the job.

condor_config_val <CONDOR_VARIABLE>

Will tell you the value of that CONDOR_VARIABLE for your condor setup. This can help maintain your sanity.

condor_rm CID.PID

Will remove the job with cluster ID CID and process ID PID from your condor pool. Useful for killing Held or Idle jobs. For more details on these daemons, see section "3.1.2 The Condor Daemons" in the Condor Manual.

condor_master

On any machine, no matter its configuration, there will be a condor_master daemon. This process will start and stop all other condor daemons. condor_master will write a MasterLog and have a .master_address file. Be sure that the .master_address contains the correct IP address. If the correct IP address doesn't appear, set the correct value in the parameter

NETWORK_INTERFACE = <desired IP>

in your condor_config.local file. This must be an interface the machine actually has, but condor may have picked a different IP than you would have wanted, perhaps the local loopback address 127.0.0.1 or a wireless instead of ethernet adapter.

condor_startd

This daemon runs on execution nodes, and represents a machine ready to do work for Condor. Its log file describes its requirements. This daemon starts up the condor_starter daemon.

condor_starter

This daemon runs on execution nodes, and is responsible for starting up actual execution processes, and logging their details.

condor_schedd

This daemon runs on submit nodes, and represents data about submitted jobs. It tracks the queueing of jobs and tries to get resources for all of its jobs to be run. When a job submitted is run, this daemon spawns a condor_shadow daemon.

condor_shadow

This daemon runs on the submission machine when an actual execution of the job is run. It will take care of systems calls that need to be executed on the submitting machine for a process. There will be a condor_shadow process for each executing process of a submission machine, meaning that on a machine with a large number of submitted processes, the number of shadow daemons supported by memory or other resources could be a limitation.

condor_collector

This daemon runs on the Pool Collector machine, and is responsible for keeping track of resources within the Pool. All nodes in the pool let this daemon on the Pool Collector machine know that they exist, what services they support, and what requirements they have.

condor_negotiator

This daemon typically runs on the Pool Collector machine, and negotiates between submitted jobs and executing nodes to match a job with an execution. Log files of interest include NegotiatorLog and MatchLog

condor_kbdd

This daemon is used to detect user activity on a execute node, so it can know whether to allow execution of a job or to disallow it because a human user currently is engaged in some task.

The right processor architecture

The initial installation was done with the package condor-7.2.0-linux-ia64-rhel3-dynamic.tar.gz corresponding to IA64 which corresponds to the Intel Itanium processor 64bits. It doesn't include all 64bits intel processors.

While trying to run the condor_master, the shell returned the following error message cannot execute binary file

Using the program readelf, it's possible to extract the header of an executable and understand if a given executable could run on a given platform.

kitware@rigel:~/$ readelf -h ~/condor-7.2.0_IA64/sbin/condor_master ELF Header: Magic: 7f 45 4c 46 02 01 01 00 00 00 00 00 00 00 00 00 Class: ELF64 Data: 2's complement, little endian Version: 1 (current) OS/ABI: UNIX - System V ABI Version: 0 Type: EXEC (Executable file) Machine: Intel IA-64 Version: 0x1 Entry point address: 0x40000000000bf3e0 Start of program headers: 64 (bytes into file) Start of section headers: 9382744 (bytes into file) Flags: 0x10, 64-bit Size of this header: 64 (bytes) Size of program headers: 56 (bytes) Number of program headers: 7 Size of section headers: 64 (bytes) Number of section headers: 32 Section header string table index: 31

kitware@rigel:~$ readelf -h /bin/ls ELF Header: Magic: 7f 45 4c 46 02 01 01 00 00 00 00 00 00 00 00 00 Class: ELF64 Data: 2's complement, little endian Version: 1 (current) OS/ABI: UNIX - System V ABI Version: 0 Type: EXEC (Executable file) Machine: Advanced Micro Devices X86-64 Version: 0x1 Entry point address: 0x4023c0 Start of program headers: 64 (bytes into file) Start of section headers: 104384 (bytes into file) Flags: 0x0 Size of this header: 64 (bytes) Size of program headers: 56 (bytes) Number of program headers: 8 Size of section headers: 64 (bytes) Number of section headers: 28 Section header string table index: 27

kitware@rigel:~$ readelf -h ./condor-7.2.0/sbin/condor_master ELF Header:

Magic: 7f 45 4c 46 02 01 01 00 00 00 00 00 00 00 00 00 Class: ELF64 Data: 2's complement, little endian Version: 1 (current) OS/ABI: UNIX - System V ABI Version: 0 Type: EXEC (Executable file) Machine: Advanced Micro Devices X86-64 Version: 0x1 Entry point address: 0x4b9450 Start of program headers: 64 (bytes into file) Start of section headers: 4553256 (bytes into file) Flags: 0x0 Size of this header: 64 (bytes) Size of program headers: 56 (bytes) Number of program headers: 8 Size of section headers: 64 (bytes) Number of section headers: 31 Section header string table index: 30

Comparing the different output, it's possible to observe the architecture Intel IA-64 isn't the right one.

Be sure that your executable is statically linked.

For Unix submission/execution, We found that we needed to run jobs as a user that exists on all machines on the Condor grid, because we do not have shared Unix users on our network. In our case this user was Condor.

Condor supplies a number of utility programs and log files. These are extremely helpful in understanding and correcting problems. Our setups have Condor log files in /home/condor/localcondor/log and C:\condor\log.

Links

- Detailed Condor documentation is also available on the website here

MPICH2 on Windows

Here we record our experience using MPICH2 on Windows, working towards using MPICH2+Condor in (ideally) a mixed Windows and Linux environment or else in a homogeneous environment.

This will have to be cleaned up to make more sense as we gain more experience.

MPICH2 Environment on Windows 7

First install MPICH2, I installed the version 1.3.2p1, windows 32 bit binary. Then add the location of the MPICH2\bin directory to your system path. Add mpiexec.exe and smpd.exe to the list of exceptions in the Windows firewall.

I created the same user/password combination (with administrative rights) on two different Windows 7 machines. All work was done logged in as that user.

The following was performed in a Windows CMD prompt run with Administrative Privileges (right click on the CMD executable and run it as an Administrator).

I reset the smpd passphrase

smpd -remove

then set the same smpd passphrase on both machines

smpd -install -phrase mypassphrase

and could then check the status using

smpd -status

I had to register my user credentials on both machines by

mpiexec -register

then accepted the user it suggested by hitting enter, then entered my user's Windows account password.

You can check which user you are with

mpiexec -whoami

And now you should be able to validate with

mpiexec -validate

it will ask you for an authentication password for smpd, this the mypassphrase you entered above. If all is correct at this point, you will get a result of SUCCESS.

To test this, you can run the cpi.exe example application in the MPICH2\examples directory (I ran it with 4 processors since my machine has 4 cores):

mpiexec -n 4 full_path_to\cpi.exe

You can also test that both machines are participating in a computation by specifying the IPs of the two machines, and how many processes each machine should run, and the executable hostname, which should return the two different hostnames of the two machines.

mpiexec -hosts 2 ip_1 #_cores_1 ip_2 #_cores_2 hostname

Creating an MPI program on Windows

I used MS Visual Studio Express 2008 (MSVSE08) to build a C++ executable.

Here is a very simple working C++ example program:

#include "stdafx.h"

#include <iostream>

#include "mpi.h"

using namespace std;

//

int main(int argc, char* argv[])

{

// initialize the MPI world

MPI::Init(argc,argv);

//

// get this process's rank

int rank = MPI::COMM_WORLD.Get_rank();

//

// get the total number of processes in the computation

int size = MPI::COMM_WORLD.Get_size();

//

// print out where this process ranks in the total

std::cout << "I am " << rank << " out of " << size << std::endl;

//

// Finalize the MPI world

MPI::Finalize();

return 0;

}

To compile this in MSVSE08, you must right click on the project file (not the solution file), then click properties, and make the following additions:

- Under C/C++ menu, Additional Include Directories property, add the full path to the MPICH2\include directory

- Under Linker/General menu, Additional Library Directories, add the full path to the MPICH2\lib directory

- Under Linker/Input menu, Additional Dependencies, add mpi.lib and cxx.lib

You can test your application using:

mpiexec -n 4 full_path_to\your.exe

Then to run your exe on two different machines (I had to build a RELEASE for this, at first I had built a DEBUG build, but this caused problems on the second machine):

- create the same directory on both machines (e.g. C:\mpich2work)

- copy the exe to this directory on both machines

- execute the exe on one machine, specifying both IPs and how many processes you want to run on each machine (I run as many processes as cores):

mpiexec -hosts 2 ip_1 #_cores_1 ip_2 #_cores_2 full_path_to_exe_on_both_machines

MPICH2 and Condor on Windows

To configure MPI over Condor, I set my Windows 7 laptop up as a dedicated Condor scheduler and executer followed the instructions at this link:

https://condor-wiki.cs.wisc.edu/index.cgi/wiki?p=HowToConfigureMpiOnWindows

I turned off smpd.exe as a service, since Condor will manage it:

smpd.exe -remove

I then created the following MPI example program, and ran this with a MachineCount of 2 and 4 at different times (I ran this on my laptop, which has 4 cores, and had Condor running locally) . This program assumes an even number of processes. It shows examples of both synchronous and asynchronous communication using MPI.

Resources that I found to be helpful in developing this program are:

http://www-uxsup.csx.cam.ac.uk/courses/MPI/

https://computing.llnl.gov/tutorials/mpi/

#include "stdafx.h"

#include <iostream>

#include "mpi.h"

using namespace std;

//

int main(int argc, char *argv[])

{

MPI::Init(argc, argv);

//

int rank = MPI::COMM_WORLD.Get_rank();

int size = MPI::COMM_WORLD.Get_size();

char processor_name[MPI_MAX_PROCESSOR_NAME];

MPI::Get_processor_name(processor_name,namelen);

//

int buf[1];

int numElements = 1;

// initialize buffer with rank

buf[0] = rank;

// example of synchronous communication

// have even ranks send to odd ranks first

// assumes an even number of processes

int syncTag = 123;

if (rank % 2 == 0)

{

// send to next higher rank

int dest = rank + 1;

std::cout << processor_name << "." << rank << " will send to " << dest << ", for now buf[0]= " << buf[0] << std::endl;

MPI::COMM_WORLD.Send(buf,numElements,MPI::INT,dest,syncTag);

// now wait for dest to send back

// even though dest is the param, in the Recv call it is used as the source

MPI::COMM_WORLD.Recv(buf,numElements,MPI::INT,dest,syncTag);

std::cout << processor_name << "." << rank << ", after receiving from " << dest << ", buf[0]= " << buf[0] << std::endl;

}

else

{

// source is next lower rank

int source = rank - 1;

std::cout << processor_name << "." << rank << ", will receive from " << source << ", for now buf[0]= " << buf[0] << std::endl;

MPI::COMM_WORLD.Recv(buf,numElements,MPI::INT,source,syncTag);

std::cout << processor_name << "." << rank << ", after receiving from " << source << ", buf[0]= " << buf[0] << std::endl;

// send rank rather than buffer, just to be sure

// even though param is source, in Send call it is for dest

MPI::COMM_WORLD.Send(&rank,numElements,MPI::INT,source,syncTag);

}

// now for asynchronous communication

std::cout << processor_name << "." << rank << " switching to asynchronous" << std::endl;

int asyncTag = 321;

int leftRank;

if (rank == 0)

{

leftRank = size-1;

}

else

{

leftRank = (rank-1);

}

int rightRank;

if (rank == size-1)

{

rightRank = 0;

}

else

{

rightRank = (rank+1);

}

// everyone sends to the leftRank, and receives from the rightRank

// if this were synchronous, would be in danger of deadlocking because of circular waits

// reset buffers to rank

buf[0] = rank;

std::cout << processor_name << "." << rank << " will send to " << leftRank << ", for now buf[0]= " << buf[0] << std::endl;

MPI::Request sendReq = MPI::COMM_WORLD.Isend(buf,numElements,MPI::INT,leftRank,asyncTag);

MPI::Request recvReq = MPI::COMM_WORLD.Irecv(buf,numElements,MPI::INT,rightRank,asyncTag);

// wait on the receipt

MPI::Status status;

recvReq.Wait(status);

std::cout << processor_name << "." << rank << " after receiving from " << rightRank << ", buf[0]= " << buf[0] << std::endl;

//

MPI::Finalize();

return(0);

}

I then created a two machine Condor parallel universe grid, using the same user/password combination on both machines, and this user had Administrative rights. My 4 core Windows 7 profession laptop (X86_64,WINNT61) was the CONDOR_HOST and the DedicatedScheduler. Assuming this laptop is called clavicle in the shoulder.com domain, here are the important configuration parameters in its condor_config.local file:

CONDOR_HOST=clavicle.shoulder.com SMPD_SERVER = C:\Program Files (x86)\MPICH2\bin\smpd.exe SMPD_SERVER_ARGS = -p 6666 -d 1 DedicatedScheduler = "DedicatedScheduler@clavicle.shoulder.com" STARTD_EXPRS = $(STARTD_EXPRS), DedicatedScheduler START = TRUE SUSPEND = FALSE PREEMPT = FALSE KILL = FALSE WANT_SUSPEND = FALSE WANT_VACATE = FALSE CONTINUE = TRUE RANK = 0 DAEMON_LIST = MASTER, SCHEDD, STARTD, COLLECTOR, NEGOTIATOR, SMPD_SERVER

The second machine, a 2 core Windows XP desktop called scapula (INTEL,WINNT51) was a dedicated machine with the following important parameters in its condor_config.local file:

CONDOR_HOST=clavicle.shoulder.com SMPD_SERVER = C:\Program Files\MPICH2\bin\smpd.exe SMPD_SERVER_ARGS = -p 6666 -d 1 DedicatedScheduler = "DedicatedScheduler@clavicle.shoulder.com" STARTD_EXPRS = $(STARTD_EXPRS), DedicatedScheduler START = TRUE SUSPEND = FALSE PREEMPT = FALSE KILL = FALSE WANT_SUSPEND = FALSE WANT_VACATE = FALSE CONTINUE = TRUE RANK = 0 DAEMON_LIST = MASTER, STARTD, SMPD_SERVER

Note how it points to clavicle both as CONDOR_HOST and as the DedicatedScheduler, and that is does not have (x86) in its path to the smpd.exe.

MPICH2 gets installed to different locations, to C:\Program Files\MPICH2 for an (INTEL,WINNT51) machine, and to C:\Program Files (x86)\MPICH2 for a (X86_64,WINNT61) machine. Because I needed to refer to the mpiexec.exe path in the batch file that my Condor submit file referred to, and this batch file would be the same for both machines, I opted to copy mpiexec.exe to the work directory I was using on all machines, C:\mpich2work.

After setting up this configuration, I rebooted both machines to start in this configuration. At this point, the command

net stop condor

no longer returns correctly, since Condor is managing the smpd.exe service now and does not know how to stop that service.

Based on the Condor submit file described in the Condor wiki page, I had to adapt my own submit file for my above MPI executable called mpiwork.exe to be the following:

universe = parallel executable = mp2script.bat arguments = mpiwork.exe machine_count = 6 output = out.$(NODE).log error = error.$(NODE).log log = work.$(NODE).log should_transfer_files = yes when_to_transfer_output = on_exit transfer_input_files = mpiwork.exe Requirements = ((Arch == "INTEL") && (OpSys == "WINNT51")) || ((Arch == "X86_64") && (OpSys == "WINNT61")) queue

and my mp2script.bat file was adapted to be:

set _CONDOR_PROCNO=%_CONDOR_PROCNO% set _CONDOR_NPROCS=%_CONDOR_NPROCS% REM If not the head node, just sleep forever if not [%_CONDOR_PROCNO%] == [0] copy con nothing REM Set this to the bin directory of MPICH installation set MPDIR="C:\mpich2work" REM run the actual mpijob %MPDIR%\mpiexec.exe -n %_CONDOR_NPROCS% -p 6666 %* exit 0

with the change being that MPDIR is a location that is known to have mpiexec.exe on both machines, since I copied that file there on both machines as described above.