ParaView/Catalyst/Overview: Difference between revisions

Andy.bauer (talk | contribs) m (→Details) |

Andy.bauer (talk | contribs) m (→Details) |

||

| Line 31: | Line 31: | ||

Note that as the problem size increases as well as the number of processes increases, the benefits of using Catalyst | Note that as the problem size increases as well as the number of processes increases, the benefits of using Catalyst | ||

become more apparent. This is due in a large part because the computing system resources are being stretched to | become more apparent. This is due in a large part because the computing system resources are being stretched to | ||

their limit where inefficiencies become more apparent. | their limit where inefficiencies become more apparent. One possible workflow that ParaView's co-processing tools | ||

enables is demonstrated more fully below. | enables is demonstrated more fully below. | ||

[[File:CatalystFullWorkFlow.png]] | [[File:CatalystFullWorkFlow.png|650px|center]] | ||

In this workflow the user creates a Python script using ParaView's plugin for creating Catalyst co-processing scripts. Here the user can choose a variety of outputs: extracted data such as polygonal output with field data, rendered images, plot information and/or statistics. The Python scripts are then used by Catalyst during the simulation run to output the simulation user's desired information. Typically, the extracted data is orders of magnitude smaller than saving out the full data set. This is shown in the image below for a relatively small problem for several VTK filters. | |||

[[File:CatalystReduceOutputSize.png|450px|center]] | |||

Often the reduced file IO also results in faster simulation runs since in certain cases it is faster | |||

for Catalyst to compute a desired extract and save that to disk compared to just saving the full raw data | |||

to disk. This is shown in the figure below for a small 6 process run for various VTK filters. | |||

[[File:CatalystReduceRunTime.png|450|center]] | |||

Revision as of 15:25, 28 July 2013

Background

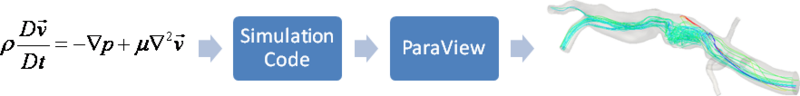

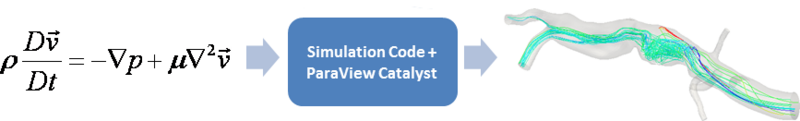

Several factors are driving the growth of simulations. Computational power of computer clusters is growing, while the price of individual computers is decreasing. Distributed computing techniques allow hundreds, or even thousands, of computer nodes to participate in a single simulation. The benefit of this computational power is that simulations are getting more accurate and useful for predicting complex phenomena. The downside to this growth is the enormous amounts of data that need to be saved and analyzed to determine the results of the simulation. Unfortunately, the growth of IO capabilities has not kept up with the growth of processing power in these machines. Thus, the ability to generate data has outpaced our ability to save and analyze the data. This bottleneck is throttling our ability to benefit from our improved computing resources. Simulations save their states only very infrequently to minimize storage requirements. This coarse temporal sampling makes it difficult to notice some complex behavior. To get past this barrier, ParaView can now be easily used to integrate concurrent analysis and visualization directly with simulation codes. This functionality is often referred to as co-processing, in situ processing or co-visualization. This feature is available through ParaView Catalyst (previously called ParaView Co-Processing). The difference between workflows when using ParaView Catalyst can be seen in the figures below.

Technical Objectives

The main objective of the co-processing toolset is to integrate core data processing with the simulation to enable scalable data analysis, while also being simple to use for the analyst. The toolset has two main parts:

- An extensible and flexible library: ParaView Catalyst was designed to be flexible enough to be embedded in various simulation codes with relative ease and minimal footprint. This flexibility is critical, as a library that requires a lot of effort to embed cannot be successfully deployed in a large number of simulations. The co-processing library is also easily-extended so that users can deploy new analysis and visualization techniques to existing co-processing installations. The minimal footpring is through using the Catalyst configuration tools (see directions for generating source and building) to reduce the overall amount of ParaView and VTK libraries that a simulation code needs to link to.

- Configuration tools for ParaView Catalyst output: It is important for users to be able to configure the Catalyst output using graphical user interfaces that are part of their daily work-flow.

Note: All of this must be done for large data. The Catalyst library will often be used on a distributed system. For the largest simulations, the visualization of extracts may also require a distributed system (i.e. a visualization cluster).

Details

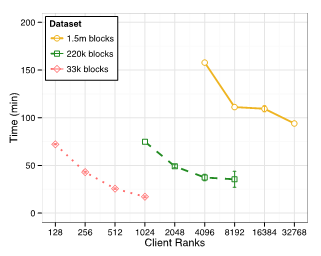

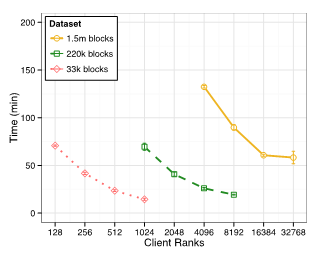

Using ParaView Catalyst is a fundamental change in to the way that simulation results are obtained. The entire goal is to reduce the time to gaining insight into the problem being simulated. The figure below shows the computational time perform a full workflow using Sandia's CTH simulation code for various problem sizes and process counts.

This time includes both simulation and post-processing simulation time. The figure below shows the execution time for gaining the same results with CTH while using Catalyst for 'in situ' analysis and visualization.

Note that as the problem size increases as well as the number of processes increases, the benefits of using Catalyst become more apparent. This is due in a large part because the computing system resources are being stretched to their limit where inefficiencies become more apparent. One possible workflow that ParaView's co-processing tools enables is demonstrated more fully below.

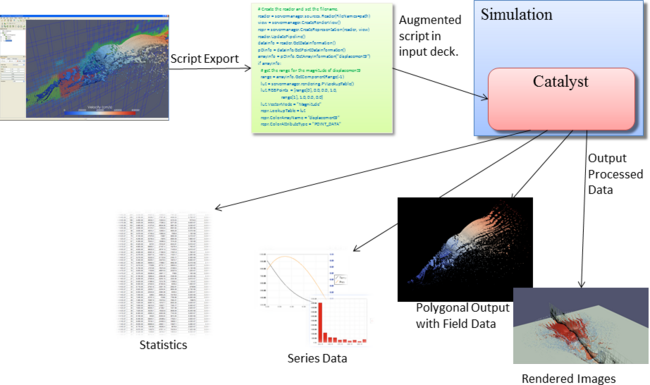

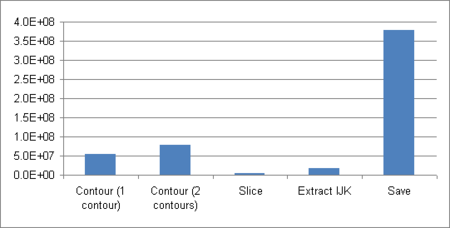

In this workflow the user creates a Python script using ParaView's plugin for creating Catalyst co-processing scripts. Here the user can choose a variety of outputs: extracted data such as polygonal output with field data, rendered images, plot information and/or statistics. The Python scripts are then used by Catalyst during the simulation run to output the simulation user's desired information. Typically, the extracted data is orders of magnitude smaller than saving out the full data set. This is shown in the image below for a relatively small problem for several VTK filters.

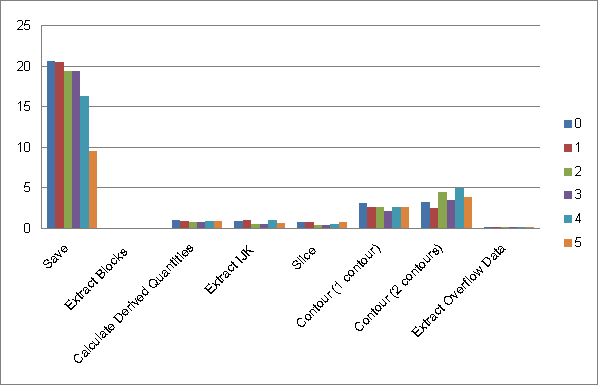

Often the reduced file IO also results in faster simulation runs since in certain cases it is faster for Catalyst to compute a desired extract and save that to disk compared to just saving the full raw data to disk. This is shown in the figure below for a small 6 process run for various VTK filters.

Simulation User's Perspective

From the simulation user's perspective, the goal is to reduce the total time to gaining insight into the problem being simulated.

Important Links

- The main page for ParaView Catalyst.

- The most complete information is available in the ParaView Catalyst User's Guide.

- Example code with samples from Python, C, C++ and Fortran for creating adaptors as well as examples of hard-coded C++ Catalyst pipelines.

- A tutorial on ParaView Catalyst along with sample files.

Information for ParaView's original co-processing tools are still available but are for versions of ParaView before 4.0.