Proposals:Refactoring of optimization framework: Difference between revisions

| (9 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

= Refactor Optimization Framework Based on Available Function Derivative Information = | = Refactor Optimization Framework Based on Available Function Derivative Information = | ||

The proposed changes | The proposed changes attempt to be backwards compatible with the current optimization framework. | ||

The UML like diagrams on this page were created with [http://live.gnome.org/Dia DIA]. The source files for these are available [http://www.itk.org/Wiki/images/5/58/OptimizationRefactoring.zip here]. | |||

{|style="border-top: 1px solid red; border-right: 1px solid red; border-bottom: 2px solid red; border-left: 1px solid red;" | |||

| | |||

#Currently ITK uses vnl as its numerical library, both for linear algebra and nonlinear optimization. | |||

#The ITK classes expose this via various methods, including in very low level functionality (e.g. itk::Matrix::GetInverse(), itk::Matrix::GetTranspose()). | |||

#The goal of the refactoring proposed on this page is to decouple this tight relationship, so that the underlying numerical libraries can be replaced without requiring changes in code developed by ITK users. | |||

|} | |||

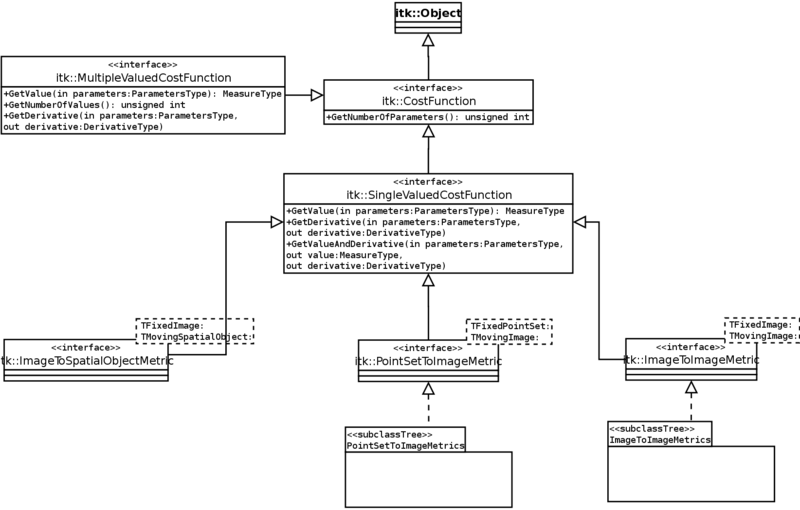

==Modification to cost function hierarchy== | ==Modification to cost function hierarchy== | ||

Currently all cost functions are subclasses of itk::SingleValuedCostFunction or itk::MultipleValuedCostFunction. These two classes are pure virtual, forcing the user to implement a GetDerivative() method. This assumes that the optimized function is differentiable. When this is not the case, developers either provide a bogus derivative which is problematic if they unintentionally use an optimizer that utilizes this information | Currently all cost functions are subclasses of itk::SingleValuedCostFunction or itk::MultipleValuedCostFunction. These two classes are pure virtual, forcing the user to implement a GetDerivative() method. This assumes that the optimized function is differentiable. When this is not the case, developers either provide a bogus derivative which is problematic if they unintentionally use an optimizer that utilizes this information, otherwise they often throw an exception. | ||

The current and proposed hierarchies are given below (these are not valid UML diagrams). | |||

Current hierarchy:<br> | |||

[[File:CurrentOptimizedFunctionHeirarchy.svg| 800px]] | |||

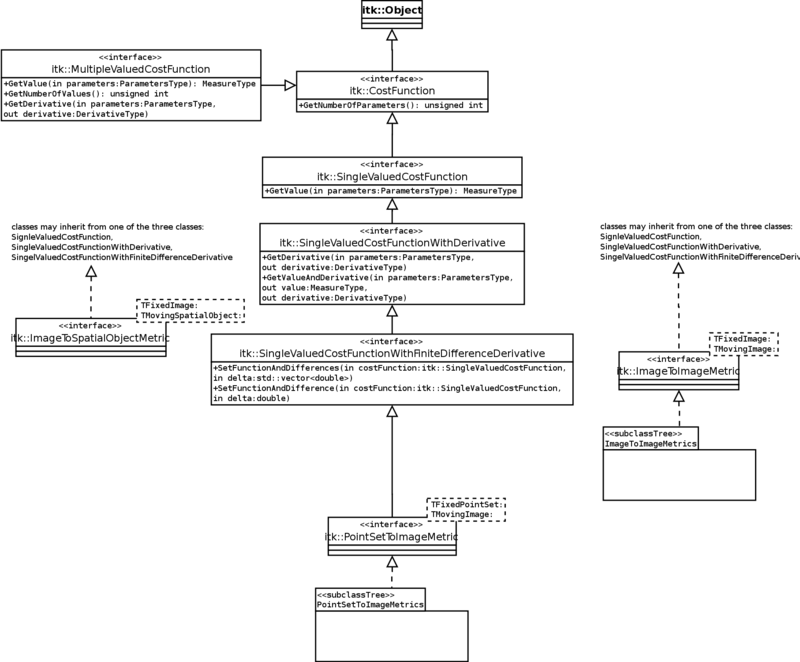

Proposed hierarchy:<br> | |||

[[File:ProposedOptimizedFunctionHeirarchy.svg| 800px]] | |||

The methods GetDerivative() and GetValueAndDerivative() move from the SingleValuedCostFunction to the newly created SingleValuedCostFunctionWithDerivative. In addition we have a decorator class SingleValuedCostFunctionWithFiniteDiffernceDerivative which wraps a user supplied SingleValuedCostFunction. | The methods GetDerivative() and GetValueAndDerivative() move from the SingleValuedCostFunction to the newly created SingleValuedCostFunctionWithDerivative. In addition we have a decorator class SingleValuedCostFunctionWithFiniteDiffernceDerivative which wraps a user supplied SingleValuedCostFunction. | ||

| Line 15: | Line 30: | ||

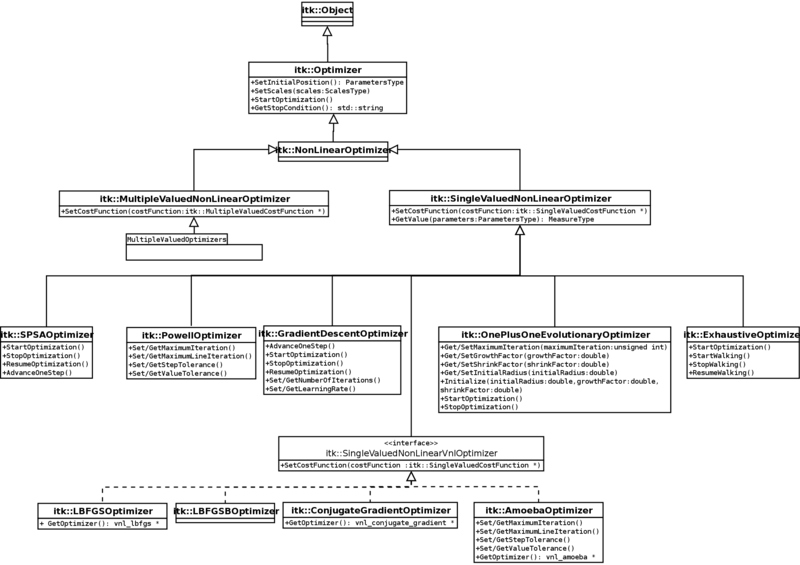

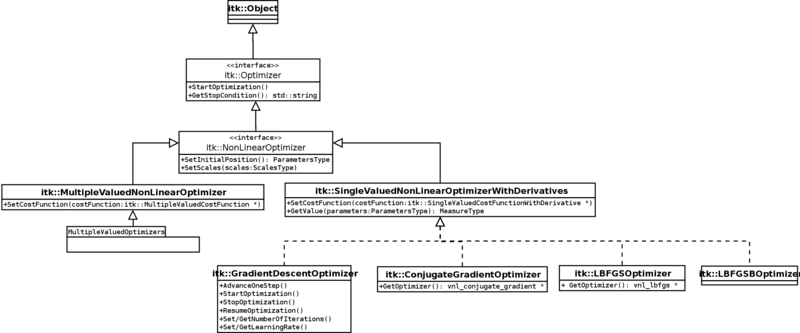

Reflects the hierarchy of cost functions. Add a direct subclass itk::SingleValuedWithDerivativeNonLinearOptimzer to itk::SingleValuedNonLinearOptimzer. Optimizers that use derivatives are decedents of this class, otherwise decedents of the SingleValuedNonLinearOptimzer. | Reflects the hierarchy of cost functions. Add a direct subclass itk::SingleValuedWithDerivativeNonLinearOptimzer to itk::SingleValuedNonLinearOptimzer. Optimizers that use derivatives are decedents of this class, otherwise decedents of the SingleValuedNonLinearOptimzer. | ||

=Current hierarchy:<br>= | |||

[[File:CurrentOptimizerHeirarchy.svg| 800px]] | |||

=Questions/Comments= | |||

<u>itk::Optimizer</u><br> | |||

The itk::Optimizer class seems to be intended for nonlinear optimization, its functionality should be pushed down to itkNonLinearOptimizer. This assumes that the intention is that itk::Optimizer be the superclass for all optimizers, including linear program optimizers such as Dantzig's Simplex? | |||

<u>itkSingleValuedNonLinearOptimizer, itkMultipleValuedNonLinearOptimizer</u>:<br> | |||

Why does the SetCostFunction receive a raw pointer to the cost function and not a smart pointer? | |||

<u>itkGradientOptimizer</u>: | |||

Why is the AdvanceOneStep method public? | |||

The assertion that the scales vector size is appropriate is performed in each step [ln. 189-196], this makes sense if AdvanceOneStep is public, otherwise it is more appropriate to have this | |||

assertion performed once, in ResumeOptimization [ln. 108]. | |||

<u>itkOnePlusOne</u>: | |||

The ivar m_Initialized is never use and should be removed. | |||

Why does this class have an Initialize method and corresponding Get/Set methods | |||

to set the same variables? I believe the ITK interface is via Get/Set and that the | |||

Initalize method should be removed. | |||

<u>itkPowellOptimizer</u>: | |||

Not clear why there are two methods that return the current cost function value, beyond the const difference, GetCurrentCost(), GetValue(). | |||

<u>Optimizers inheriting from vnl</u>: | |||

Why is the internal vnl optimizer exposed, via the GetOptimizer() method? | |||

The classes are not consistent, amoeba, conjugate gradient, and LBFGS do | |||

have the GetOptimizer() method and LBFGSB does not. | |||

<u>itkConjugateGradientOptimizer</u>: | |||

The ivar m_OptimizerInitialized is not required. Only referred to in SetCostFunction and | |||

in constructor. Usage seems to check if the vnl optimizer was already allocated and if yes | |||

then release it and reallocate. Instead: | |||

a. cxx file ln. 68 should be "if ( m_VnlOptimizer!=NULL )" | |||

b. remove cxx file ln. 76 "m_OptimizerInitialized = true;" | |||

c. remove cxx file ln. 30 "m_OptimizerInitialized = false;" | |||

d. remove h file ln. 80. | |||

<u>itkSPSAOptimizer</u>: | |||

Why is the AdvanceOneStep() a public method? | |||

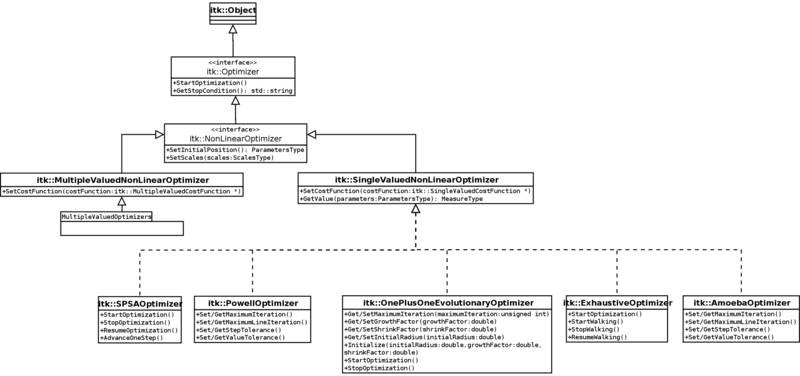

=Proposed hierarchy:= | |||

The main difference is that the explicit references to vnl have been removed. ITK should provide a consistent interface. The underlying implementation be it native ITK or an optimization library is not exposed to the user. | |||

Optimizers that do not use derivatives: | |||

[[File:ProposedOptimizerHeirarchyPart1.svg| 800px]] | |||

Optimizers that use derivatives:<br> | |||

[[File:ProposedOptimizerHeirarchyPart2.svg| 800px]] | |||

Latest revision as of 20:05, 5 November 2010

Refactor Optimization Framework Based on Available Function Derivative Information

The proposed changes attempt to be backwards compatible with the current optimization framework.

The UML like diagrams on this page were created with DIA. The source files for these are available here.

|

Modification to cost function hierarchy

Currently all cost functions are subclasses of itk::SingleValuedCostFunction or itk::MultipleValuedCostFunction. These two classes are pure virtual, forcing the user to implement a GetDerivative() method. This assumes that the optimized function is differentiable. When this is not the case, developers either provide a bogus derivative which is problematic if they unintentionally use an optimizer that utilizes this information, otherwise they often throw an exception. The current and proposed hierarchies are given below (these are not valid UML diagrams).

The methods GetDerivative() and GetValueAndDerivative() move from the SingleValuedCostFunction to the newly created SingleValuedCostFunctionWithDerivative. In addition we have a decorator class SingleValuedCostFunctionWithFiniteDiffernceDerivative which wraps a user supplied SingleValuedCostFunction.

Modification to optimizer hierarchy

Reflects the hierarchy of cost functions. Add a direct subclass itk::SingleValuedWithDerivativeNonLinearOptimzer to itk::SingleValuedNonLinearOptimzer. Optimizers that use derivatives are decedents of this class, otherwise decedents of the SingleValuedNonLinearOptimzer.

Current hierarchy:

Questions/Comments

itk::Optimizer

The itk::Optimizer class seems to be intended for nonlinear optimization, its functionality should be pushed down to itkNonLinearOptimizer. This assumes that the intention is that itk::Optimizer be the superclass for all optimizers, including linear program optimizers such as Dantzig's Simplex?

itkSingleValuedNonLinearOptimizer, itkMultipleValuedNonLinearOptimizer:

Why does the SetCostFunction receive a raw pointer to the cost function and not a smart pointer?

itkGradientOptimizer:

Why is the AdvanceOneStep method public?

The assertion that the scales vector size is appropriate is performed in each step [ln. 189-196], this makes sense if AdvanceOneStep is public, otherwise it is more appropriate to have this assertion performed once, in ResumeOptimization [ln. 108].

itkOnePlusOne:

The ivar m_Initialized is never use and should be removed.

Why does this class have an Initialize method and corresponding Get/Set methods to set the same variables? I believe the ITK interface is via Get/Set and that the Initalize method should be removed.

itkPowellOptimizer:

Not clear why there are two methods that return the current cost function value, beyond the const difference, GetCurrentCost(), GetValue().

Optimizers inheriting from vnl:

Why is the internal vnl optimizer exposed, via the GetOptimizer() method?

The classes are not consistent, amoeba, conjugate gradient, and LBFGS do have the GetOptimizer() method and LBFGSB does not.

itkConjugateGradientOptimizer:

The ivar m_OptimizerInitialized is not required. Only referred to in SetCostFunction and in constructor. Usage seems to check if the vnl optimizer was already allocated and if yes then release it and reallocate. Instead: a. cxx file ln. 68 should be "if ( m_VnlOptimizer!=NULL )" b. remove cxx file ln. 76 "m_OptimizerInitialized = true;" c. remove cxx file ln. 30 "m_OptimizerInitialized = false;" d. remove h file ln. 80.

itkSPSAOptimizer: Why is the AdvanceOneStep() a public method?

Proposed hierarchy:

The main difference is that the explicit references to vnl have been removed. ITK should provide a consistent interface. The underlying implementation be it native ITK or an optimization library is not exposed to the user.