VTK/Time Support: Difference between revisions

(Reverted vandalism.) |

|||

| (25 intermediate revisions by 6 users not shown) | |||

| Line 1: | Line 1: | ||

Time support is now implemented in the VTK pipeline. It is documented in the following paper, which can be downloaded here. | |||

:[[Media:TimeVis-IEEE2007.pdf|John Biddiscombe, Berk Geveci, Ken Martin, Kenneth Moreland, and David Thompson. "Time Dependent Processing in a Parallel Pipeline Architecture." IEEE Transactions on Visualization and Computer Graphics, Volume 13, Number 6, pg. 1376–1383, November/December, 2007. DOI=10.1109/TVCG.2007.70600.]] | |||

The rest of this document provides the original design discussion of the time support. It is a bit out of date and left here for historical purposes. | |||

== Overview == | |||

Many visualization datasets and analysis codes deal directly with temporal data. Historically this has been a weak point in VTK with it having effectively no support for temporal data. Researchers from Sandia National Labs have provided a number of typical use cases that have been used to drive the requirements and design. This has led to a new framework for time in VTK along with it supporting classes and new filters. | |||

Below is a list of use cases | == Requirements == | ||

Historically VTK and ParaView supported 'time', in that a couple readers support setting a timestep ivar when reading data files that have a notion of time (in particular, timesteps). And ParaView supports animations that show time sequences. However, there are many improvements, large and small, that need to be made. Below is a list of use cases sorted in terms of how significant they are for the user community right now. | |||

=== Must Have === | === Must Have === | ||

| Line 45: | Line 47: | ||

Playback of an animation means varying ''time'', instead of varying ''timesteps''. By sampling time at regular intervals, animations can play-back at the correct rate, even if the timesteps in a dataset don't represent regular intervals. When dealing with multiple datasets, the correct data can automatically be displayed, as long as the dataset timesteps share a common reference (if they don't, simple offset and scale transformations are trivial to implement and use). In the case of a single dataset, matching the animation sample points to the timesteps in the dataset can provide backwards-compatible behavior. | Playback of an animation means varying ''time'', instead of varying ''timesteps''. By sampling time at regular intervals, animations can play-back at the correct rate, even if the timesteps in a dataset don't represent regular intervals. When dealing with multiple datasets, the correct data can automatically be displayed, as long as the dataset timesteps share a common reference (if they don't, simple offset and scale transformations are trivial to implement and use). In the case of a single dataset, matching the animation sample points to the timesteps in the dataset can provide backwards-compatible behavior. | ||

== | == Prior Workarounds == | ||

* Plotting time-varying elements - the Exodus reader has functionality that allows single node or cell values to be requested, but this can only be used to plot time-varying data if the data resides in an Exodus file on the client (client-server won't work), and it limits plotting to the source data (the data cannot be filtered). Effectively this is an "out-of-band" request where the consumer of time-varying data must be hard-coded to seek-out and query the Exodus reader directly. | * Plotting time-varying elements - the Exodus reader has functionality that allows single node or cell values to be requested, but this can only be used to plot time-varying data if the data resides in an Exodus file on the client (client-server won't work), and it limits plotting to the source data (the data cannot be filtered). Effectively this is an "out-of-band" request where the consumer of time-varying data must be hard-coded to seek-out and query the Exodus reader directly. | ||

| Line 51: | Line 53: | ||

* Using multiple inputs - DSP-like filters that perform operations across multiple timesteps (e.g: averaging) could be coded with multiple inputs, with each input connected to a different source, each displaying the same file, but at a different timestep. Again, this approach does not scale well due to the memory requirements and management issues associated with multiple otherwise-identical pipelines. It also introduces complexity in the coding of filters. | * Using multiple inputs - DSP-like filters that perform operations across multiple timesteps (e.g: averaging) could be coded with multiple inputs, with each input connected to a different source, each displaying the same file, but at a different timestep. Again, this approach does not scale well due to the memory requirements and management issues associated with multiple otherwise-identical pipelines. It also introduces complexity in the coding of filters. | ||

== | == Design == | ||

Previously, VTK assumed that the contents of the pipeline represent a single timestep. VTK's time support consisted of four main keys. TIME_STEPS is a vector of doubles that lists all the time steps that are available (in increasing order) from a source/filter. This is how a reader reports downstream what timesteps are available. Currently only a couple readers actually set this key. UPDATE_TIME_INDEX was a key that was used to request the data for a specific time index. The index corresponds to a index in the TIME_STEPS vector. DATA_TIME_INDEX and DATA_TIME are keys that indicate for a DataObject what index (int) and time (double) that data corresponds to. | |||

This mechanism is limited in that it can only handle discrete time steps. But some analytical sources may be able to produce data for any time requested. To address this need a new information key will be created called TIME_RANGE that will define a T1 to T2 range of time that the source can provide. Likewise the old mechanism only allowed for requesting a single time step by index. This has the problem that some file formats are more efficient at returning multiple times steps at once, requesting by index creates confusion wrt branching filters | This mechanism is limited in that it can only handle discrete time steps. But some analytical sources may be able to produce data for any time requested. To address this need a new information key will be created called TIME_RANGE that will define a T1 to T2 range of time that the source can provide. Likewise the old mechanism only allowed for requesting a single time step by index. This has the problem that some file formats are more efficient at returning multiple times steps at once, requesting by index creates confusion wrt branching filters. | ||

The result is that a temporal request will return a multiblock dataset with each time step being an independent dataobject. This will also support returning multiple time steps of multiblock data (multi-multi-block | To support these more flexible time requests involves first removing the old request mechanism to reduce complexity. To this end UPDATE_TIME_INDEX was removed as was DATA_TIME_INDEX. A new information key UPDATE_TIME_STEPS (a vector of doubles) was created that allows for requesting temporal data by time, as well as supporting requests for multiple time-steps at once. DATA_TIME_STEPS was added as a key to represent what time steps are in a temporal dataset. The result is that a temporal request will return a multiblock dataset with each time step being an independent dataobject. This will also support returning multiple time steps of multiblock data (multi-multi-block) To this end time support requires using the composite data pipeline executive. This executive has been reworked and extended to include time support. | ||

To support continuous temporal sources a new key TIME_RANGE was added which indicates the range of times which a source can produce. Even discrete sources should set TIME_RANGE. A discrete source can be indentified by the TIME_STEPS key as continuous sources do not provide this key. | |||

Interpolation of time-varying data will be provided by filters written for that purpose; this would minimize the coding impact on sources (which can stay simple), and allows for different types of interpolation to suit user requirements / resources. Interpolation filters would be an example of a filter that expands the temporaral extents of a data request, just as a convolution image filter expands a request's geometric extents. Likewise a shift scale filter will be created that passes the data through but shifts and scales the time. This is useful for visualizing multiple datasets that while intended to be time aligned, are not so due to simple temporal origin and or scale issues. | Interpolation of time-varying data will be provided by filters written for that purpose; this would minimize the coding impact on sources (which can stay simple), and allows for different types of interpolation to suit user requirements / resources. Interpolation filters would be an example of a filter that expands the temporaral extents of a data request, just as a convolution image filter expands a request's geometric extents. Likewise a shift scale filter will be created that passes the data through but shifts and scales the time. This is useful for visualizing multiple datasets that while intended to be time aligned, are not so due to simple temporal origin and or scale issues. | ||

One side effect of the above is that requesting the data for one cell across many timesteps would still be very slow compared to what it could be for a more optimized path. To address this need | One side effect of the above is that requesting the data for one cell across many timesteps would still be very slow compared to what it could be for a more optimized path. To address this need in the future we plan on creating a fast-path for such requests. This fast path will be implemented for key readers and the array calculator initially. It is still unclear exactly how this will be implemented. But it will effectively be a separate pipeline possibly connecting to a different output port. Another option is to have a special iformation request that rturns the data as meta-information as opposed to first class data. | ||

With the above design in-place most operations on time agnostic filters will be handled by the executive which will loop the time steps through each filter. Time aware filters will be passed the multiblock dataset if desired and can then operate on mutliple time steps at once. A time aware consumer is responsible for making reasonable requests upstream. The executive will not crop a temporal request to fit in memory. If a temporal consumer asks for a million timesteps, each a gigabyte in size, then the executive will pass the request upstream, so as with geometric extents, try to request what is needed balanced with efficiency. | |||

== New Classes Added To VTK == | |||

* '''vtkTemporalDataSet''' - this subclass of vtkMultiGroupDataSet is the class to hold all temporal data. Each time step is stored as a seperate datsa object (aka group in composite dataset terminology). Many changes were made to the composite data pipeline to support composite datasets withing composite datasets, basically allows many levels of nesting. This class also introduces the notion of a TEMPORAL_EXTENT type. That is update requests previously were either piece based or structured extent based. With the addition of time there must be a notion of a temproal request type and this dataset defines that. (this is different from the actual update keys such as UPDATE_TIME_STEPS). | |||

* '''vtkTemporalDataSetAlgorithm''' - this class is a parent class for temporal algorithms. It defaults to taking and producint a temporal dataset. | |||

* '''vtkTemporalFractal''' - this class acts as a temporal source and is useful for tesing different aspects of the temporal pipeline. Key features are that it produces composite datasets for each time step, can be switched between acting as a continuous temporal source or discrete, can produce adaptive or non-adaptive subdivision for its hierarchies. | |||

* '''vtkTemporalShiftScale''' - this filter performs no actual changes on the data, but instead modifies the meta information about what time range and steps are available. This is useful for cases where the time in a source or file needs to be shifted and or scaled. | |||

* '''vtkTemporalInterpolator''' - this filter uses linear interpolation to interpolate between discrete time steps of a discrete source. This filter can be used to convert a discrete time series into a continuous one. It will pass data whenever possible and it only requests the time steps it requires to fullfill a request. It also verifies that the structures and types of the arrays are compatible before interpolating. It properly handles nested datasets. | |||

* '''vtkTemporalDataSetCache''' - this filter caches timesteps so that if a recently requested timestep is requested again it may be found in the cache, saving the effort of reloading or recomputing it. If a request is made for multiple timesteps only the timesteps that are not cached will be requested. It properly handles nested datasets. | |||

== Test Cases == | |||

Three test cases were checked into VTK. The first test case can be found in VTK/Parallel/Testing/Cxx/TestTemporalFractal.cxx. It uses the TemporalFractal class, followed by a TemporalShiftScale, TemporalInterpolator, a Threshold, then a MultiGroupGeometryFilter. Thsi pipeline is driven in a loop to create an animation. This test is run on the dashboards for VTK and has a valid image checked in for it. In this test the first three filters in the pipeline are temporal filters, the next is a regular DataSet Filter (threshold), while the fourth is a composite dataset filter but not temporal. | |||

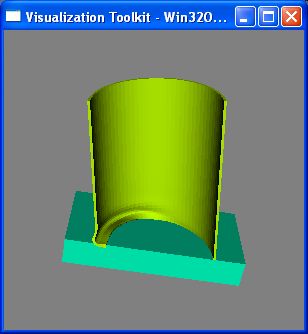

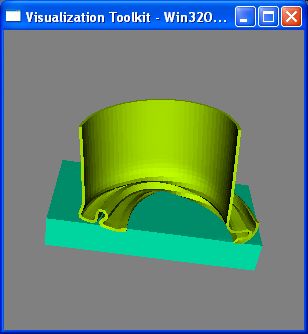

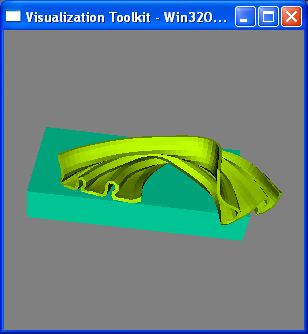

The second test case can be found in VTK/Parallel/Testing/Cxx/TestExodusTime.cxx. It reads in the crushed can datatset which has 43 time steps. A TemporalShiftScale is used to normalize the actual time range to 0.0 to 1.0. Then a TemporalInterpolator is put into the pipeline so that the dataset can be treated as if it were continuous. Finally a threshold filter and MultiGroupGeometryFilter are used prior to connecting to the mapper. A loop then drives the animation in even steps that do not match the original datasets timesteps. The linearly interpolated results are rendered. In this case the first filter in the pipeline was not a composite filter at all. In this case the CompositeDataExecutive loops the simple source over the requested timesteps to generate a TemporalDataSet. This required significant modifications to the CompositeDataPipeline as previously it had no mechanism to loop a source (only filters with composite inputs were looped) Below three frames (interpolated) are shown from this example. | |||

The third test case verifies that the caching functionality of vtkTemporalDataSetCache works properly. It can be found in VTK/Parallel/Testing/Cxx/TestTemporalCache.cxx. | |||

[[Image:Exo1.jpg]][[Image:Exo2.jpg]][[Image:Exo3.jpg]] | |||

{{VTK/Template/Footer}} | |||

Latest revision as of 23:38, 13 January 2011

Time support is now implemented in the VTK pipeline. It is documented in the following paper, which can be downloaded here.

The rest of this document provides the original design discussion of the time support. It is a bit out of date and left here for historical purposes.

Overview

Many visualization datasets and analysis codes deal directly with temporal data. Historically this has been a weak point in VTK with it having effectively no support for temporal data. Researchers from Sandia National Labs have provided a number of typical use cases that have been used to drive the requirements and design. This has led to a new framework for time in VTK along with it supporting classes and new filters.

Requirements

Historically VTK and ParaView supported 'time', in that a couple readers support setting a timestep ivar when reading data files that have a notion of time (in particular, timesteps). And ParaView supports animations that show time sequences. However, there are many improvements, large and small, that need to be made. Below is a list of use cases sorted in terms of how significant they are for the user community right now.

Must Have

- Plot the values of one-to-many elements over a specified range of time (client side graphing).

- Animations of time-series data that handle simulations with nonuniform time steps. This includes optional interpolation of node(cell) variables to intermediate times to avoid choppy playback or nonuniform playback (i.e., varying playback speed as integration step size is varied).

- Get a window of data (all elements between times T1 and T2).

Very Desirable

- Take time series data for one-to-many elements, run that through a filter (such as calculator), and then plot that (client side graphing).

- Ghosting (e.g. see the path of a bouncing ball).

- Plot the values of nodal (or cell-centered) variables taken on over a space curve and over a specified range of time. The space curve may be a line or a circle.

- Having an optional "snap to nodes" feature for the spacetime plots above (Need 3) would be very useful.

- Having an optional "geometry inference" feature for defining circular curves for the spacetime plots above (Need 3) would be very useful.

- Min/max, and other statistical operations. The operation can be for a single element over time or over all elements over time. Want to know the time at which a variable meets or exceeds a given value (either at specified node(s), or for any node in the entire mesh). An example is thermal situations where we need to know whether or when a particular temperature-sensitive component fails.

Desirable

- Provide time series data to a filter that requires multiple time-steps as input (DSP) e.g: for time step N, average the data for N-1, N, and N+1.

- Support alternatives to streamlines - streamline: plot the path through a field at an instant in time. Streak-/path-lines: plot the position of a particle for every instant in time.

- Calculate the integral of a cut plane over time, i.e the integral of a plane (or something) at each time step.

- Would like time interpolation (as required for animations in Need 1) to be nonlinear (i.e., parabolic) so that it matches the interpolation assumed by the integration technique used by the solver. Note that different solvers have different integration schemes. Predictor-corrector methods do not necessarily specify a unique interpolant, but other methods do.

- Per time step field data. That is data that could be a field of a data set that changes over time. We should also be able to represent field data that does not change over time.

- Calculate envelopes (convex hull) over time. Calculate unions and intersections over time, i.e. "what volume was ever occupied by an object", and "what volume was always occupied by an object".

- Retrieve non-contiguous ranges of time-varying data.

- Readers report temporal bounds. Time is part of the extents.

Timesteps

Timesteps are point-samples of continuous time that are defined for a specific data set. They need not be contiguous or uniformly spaced, and in fact often aren't, since many simulations adjust their sample rate dynamically based on the simulation content. When more-than-one data set is present in a visualization, the timesteps in the data sets may represent completely different ranges and rates of time. Thus, it is important to have a single time "reference" that is uniform across all data sets. This document assumes that "time" is a continuous, absolute quantity represented as a real number, and that a "timestep" is an individual sample at a particular point in time.

Because time can vary continuously, it is possible to display a time for which there aren't any dataset timesteps that match. Behavior in this case can vary from displaying nothing or displaying the nearest timestep to interpolation of timestep data.

Playback of an animation means varying time, instead of varying timesteps. By sampling time at regular intervals, animations can play-back at the correct rate, even if the timesteps in a dataset don't represent regular intervals. When dealing with multiple datasets, the correct data can automatically be displayed, as long as the dataset timesteps share a common reference (if they don't, simple offset and scale transformations are trivial to implement and use). In the case of a single dataset, matching the animation sample points to the timesteps in the dataset can provide backwards-compatible behavior.

Prior Workarounds

- Plotting time-varying elements - the Exodus reader has functionality that allows single node or cell values to be requested, but this can only be used to plot time-varying data if the data resides in an Exodus file on the client (client-server won't work), and it limits plotting to the source data (the data cannot be filtered). Effectively this is an "out-of-band" request where the consumer of time-varying data must be hard-coded to seek-out and query the Exodus reader directly.

- Plotting multiple timesteps - in the case of displaying the path of a bouncing ball, multiple copies of a pipeline can be created, with each input displaying the same file, but at a different timestep. This solution cannot scale well since there will be many (perhaps 100s) of sources referencing the same dataset, with corresponding resource consumption. This approach also leads to additional complexity in managing multiple pipelines - does the user see / work with all of the pipelines, or is there a management layer that hides them, leaving the user to manage a single "virtual" pipeline instead?

- Using multiple inputs - DSP-like filters that perform operations across multiple timesteps (e.g: averaging) could be coded with multiple inputs, with each input connected to a different source, each displaying the same file, but at a different timestep. Again, this approach does not scale well due to the memory requirements and management issues associated with multiple otherwise-identical pipelines. It also introduces complexity in the coding of filters.

Design

Previously, VTK assumed that the contents of the pipeline represent a single timestep. VTK's time support consisted of four main keys. TIME_STEPS is a vector of doubles that lists all the time steps that are available (in increasing order) from a source/filter. This is how a reader reports downstream what timesteps are available. Currently only a couple readers actually set this key. UPDATE_TIME_INDEX was a key that was used to request the data for a specific time index. The index corresponds to a index in the TIME_STEPS vector. DATA_TIME_INDEX and DATA_TIME are keys that indicate for a DataObject what index (int) and time (double) that data corresponds to.

This mechanism is limited in that it can only handle discrete time steps. But some analytical sources may be able to produce data for any time requested. To address this need a new information key will be created called TIME_RANGE that will define a T1 to T2 range of time that the source can provide. Likewise the old mechanism only allowed for requesting a single time step by index. This has the problem that some file formats are more efficient at returning multiple times steps at once, requesting by index creates confusion wrt branching filters.

To support these more flexible time requests involves first removing the old request mechanism to reduce complexity. To this end UPDATE_TIME_INDEX was removed as was DATA_TIME_INDEX. A new information key UPDATE_TIME_STEPS (a vector of doubles) was created that allows for requesting temporal data by time, as well as supporting requests for multiple time-steps at once. DATA_TIME_STEPS was added as a key to represent what time steps are in a temporal dataset. The result is that a temporal request will return a multiblock dataset with each time step being an independent dataobject. This will also support returning multiple time steps of multiblock data (multi-multi-block) To this end time support requires using the composite data pipeline executive. This executive has been reworked and extended to include time support.

To support continuous temporal sources a new key TIME_RANGE was added which indicates the range of times which a source can produce. Even discrete sources should set TIME_RANGE. A discrete source can be indentified by the TIME_STEPS key as continuous sources do not provide this key.

Interpolation of time-varying data will be provided by filters written for that purpose; this would minimize the coding impact on sources (which can stay simple), and allows for different types of interpolation to suit user requirements / resources. Interpolation filters would be an example of a filter that expands the temporaral extents of a data request, just as a convolution image filter expands a request's geometric extents. Likewise a shift scale filter will be created that passes the data through but shifts and scales the time. This is useful for visualizing multiple datasets that while intended to be time aligned, are not so due to simple temporal origin and or scale issues.

One side effect of the above is that requesting the data for one cell across many timesteps would still be very slow compared to what it could be for a more optimized path. To address this need in the future we plan on creating a fast-path for such requests. This fast path will be implemented for key readers and the array calculator initially. It is still unclear exactly how this will be implemented. But it will effectively be a separate pipeline possibly connecting to a different output port. Another option is to have a special iformation request that rturns the data as meta-information as opposed to first class data.

With the above design in-place most operations on time agnostic filters will be handled by the executive which will loop the time steps through each filter. Time aware filters will be passed the multiblock dataset if desired and can then operate on mutliple time steps at once. A time aware consumer is responsible for making reasonable requests upstream. The executive will not crop a temporal request to fit in memory. If a temporal consumer asks for a million timesteps, each a gigabyte in size, then the executive will pass the request upstream, so as with geometric extents, try to request what is needed balanced with efficiency.

New Classes Added To VTK

- vtkTemporalDataSet - this subclass of vtkMultiGroupDataSet is the class to hold all temporal data. Each time step is stored as a seperate datsa object (aka group in composite dataset terminology). Many changes were made to the composite data pipeline to support composite datasets withing composite datasets, basically allows many levels of nesting. This class also introduces the notion of a TEMPORAL_EXTENT type. That is update requests previously were either piece based or structured extent based. With the addition of time there must be a notion of a temproal request type and this dataset defines that. (this is different from the actual update keys such as UPDATE_TIME_STEPS).

- vtkTemporalDataSetAlgorithm - this class is a parent class for temporal algorithms. It defaults to taking and producint a temporal dataset.

- vtkTemporalFractal - this class acts as a temporal source and is useful for tesing different aspects of the temporal pipeline. Key features are that it produces composite datasets for each time step, can be switched between acting as a continuous temporal source or discrete, can produce adaptive or non-adaptive subdivision for its hierarchies.

- vtkTemporalShiftScale - this filter performs no actual changes on the data, but instead modifies the meta information about what time range and steps are available. This is useful for cases where the time in a source or file needs to be shifted and or scaled.

- vtkTemporalInterpolator - this filter uses linear interpolation to interpolate between discrete time steps of a discrete source. This filter can be used to convert a discrete time series into a continuous one. It will pass data whenever possible and it only requests the time steps it requires to fullfill a request. It also verifies that the structures and types of the arrays are compatible before interpolating. It properly handles nested datasets.

- vtkTemporalDataSetCache - this filter caches timesteps so that if a recently requested timestep is requested again it may be found in the cache, saving the effort of reloading or recomputing it. If a request is made for multiple timesteps only the timesteps that are not cached will be requested. It properly handles nested datasets.

Test Cases

Three test cases were checked into VTK. The first test case can be found in VTK/Parallel/Testing/Cxx/TestTemporalFractal.cxx. It uses the TemporalFractal class, followed by a TemporalShiftScale, TemporalInterpolator, a Threshold, then a MultiGroupGeometryFilter. Thsi pipeline is driven in a loop to create an animation. This test is run on the dashboards for VTK and has a valid image checked in for it. In this test the first three filters in the pipeline are temporal filters, the next is a regular DataSet Filter (threshold), while the fourth is a composite dataset filter but not temporal.

The second test case can be found in VTK/Parallel/Testing/Cxx/TestExodusTime.cxx. It reads in the crushed can datatset which has 43 time steps. A TemporalShiftScale is used to normalize the actual time range to 0.0 to 1.0. Then a TemporalInterpolator is put into the pipeline so that the dataset can be treated as if it were continuous. Finally a threshold filter and MultiGroupGeometryFilter are used prior to connecting to the mapper. A loop then drives the animation in even steps that do not match the original datasets timesteps. The linearly interpolated results are rendered. In this case the first filter in the pipeline was not a composite filter at all. In this case the CompositeDataExecutive loops the simple source over the requested timesteps to generate a TemporalDataSet. This required significant modifications to the CompositeDataPipeline as previously it had no mechanism to loop a source (only filters with composite inputs were looped) Below three frames (interpolated) are shown from this example.

The third test case verifies that the caching functionality of vtkTemporalDataSetCache works properly. It can be found in VTK/Parallel/Testing/Cxx/TestTemporalCache.cxx.