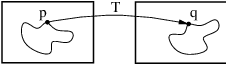

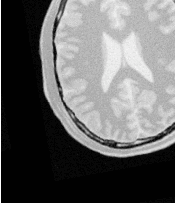

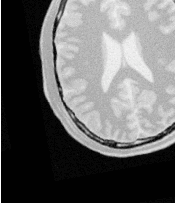

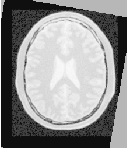

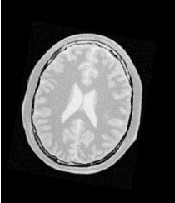

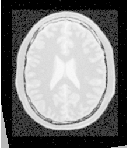

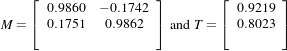

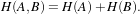

Figure 3.1: Image registration is the task of finding a spatial transform mapping one image into another.

Figure 3.1: Image registration is the task of finding a

spatial transform mapping one image into another.

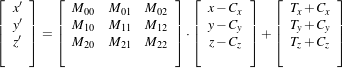

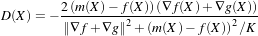

This chapter introduces ITK’s capabilities for performing image registration. Image registration is the process of determining the spatial transform that maps points from one image to homologous points on a object in the second image. This concept is schematically represented in Figure 3.1. In ITK, registration is performed within a framework of pluggable components that can easily be interchanged. This flexibility means that a combinatorial variety of registration methods can be created, allowing users to pick and choose the right tools for their specific application.

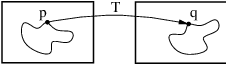

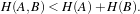

Let’s begin with a simplified typical registration framework where its components and their interconnections are shown in Figure 3.2. The basic input data to the registration process are two images: one is defined as the fixed image f(X) and the other as the moving image m(X), where X represents a position in N-dimensional space. Registration is treated as an optimization problem with the goal of finding the spatial mapping that will bring the moving image into alignment with the fixed image.

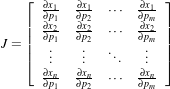

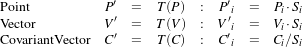

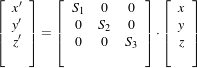

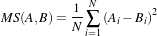

The transform component T (X) represents the spatial mapping of points from the fixed image space to points in the moving image space. The interpolator is used to evaluate moving image intensities at non-grid positions. The metric component S(f,m∘T ) provides a measure of how well the fixed image is matched by the transformed moving image. This measure forms a quantitative criterion to be optimized by the optimizer over the search space defined by the parameters of the transform.

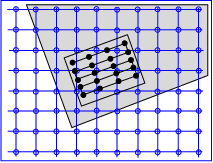

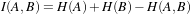

ITKv4 registration framework provides more flexibility to the above traditional registration concept. In this new framework, the registration computations can happen on a physical grid completely different than the fixed image domain having different sampling density. This “sampling domain” is considered as a new component in the registration framework known as virtual image that can be an arbitrary set of physical points, not necessarily a uniform grid of points.

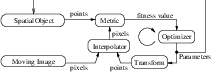

Various ITKv4 registration components are illustrated in Figure 3.3. Boxes with dashed borders show data objects, while those with solid borders show process objects.

The matching Metric class is a key component that controls most parts of the registration process since it handles fixed, moving and virtual images as well as fixed and moving transforms and interpolators.

Fixed and moving transforms and interpolators are used by the metric to evaluate the intensity values of the fixed and moving images at each physical point of the virtual space. Those intensity values are then used by the metric cost function to evaluate the fitness value and derivatives, which are passed to the optimizer that asks the moving transform to update its parameters based on the outputs of the cost function. Since the moving transform is shared between metric and optimizer, the above process will be repeated till the convergence criteria are met.

Later in section 3.3 you will get a better understanding of the behind-the-scenes processes of ITKv4 registration framework. First, we begin with some simple registration examples.

The source code for this section can be found in the file

ImageRegistration1.cxx.

This example illustrates the use of the image registration framework in Insight. It should be read as a “Hello World” for ITK registration. Instead of means to an end, this example should be read as a basic introduction to the elements typically involved when solving a problem of image registration.

A registration method requires the following set of components: two input images, a transform, a metric and an optimizer. Some of these components are parameterized by the image type for which the registration is intended. The following header files provide declarations of common types used for these components.

The type of each registration component should be instantiated first. We start by selecting the image dimension and the types to be used for representing image pixels.

The types of the input images are instantiated by the following lines.

The transform that will map the fixed image space into the moving image space is defined below.

An optimizer is required to explore the parameter space of the transform in search of optimal values of the metric.

The metric will compare how well the two images match each other. Metric types are usually templated over the image types as seen in the following type declaration.

The registration method type is instantiated using the types of the fixed and moving images as well as the output transform type. This class is responsible for interconnecting all the components that we have described so far.

Each one of the registration components is created using its New() method and is assigned to its respective itk::SmartPointer.

Each component is now connected to the instance of the registration method.

In this example the transform object does not need to be created and passed to the registration method like above since the registration filter will instantiate an internal transform object using the transform type that is passed to it as a template parameter.

Metric needs an interpolator to evaluate the intensities of the fixed and moving images at non-grid positions. The types of fixed and moving interpolators are declared here.

Then, fixed and moving interpolators are created and passed to the metric. Since linear interpolators are used as default, we could skip the following step in this example.

In this example, the fixed and moving images are read from files. This requires the itk::ImageRegistrationMethodv4 to acquire its inputs from the output of the readers.

Now the registration process should be initialized. ITKv4 registration framework provides initial transforms for both fixed and moving images. These transforms can be used to setup an initial known correction of the misalignment between the virtual domain and fixed/moving image spaces. In this particular case, a translation transform is being used for initialization of the moving image space. The array of parameters for the initial moving transform is simply composed of the translation values along each dimension. Setting the values of the parameters to zero initializes the transform to an Identity transform. Note that the array constructor requires the number of elements to be passed as an argument.

TransformType::ParametersType initialParameters(

movingInitialTransform->GetNumberOfParameters() );

initialParameters[0] = 0.0; // Initial offset in mm along X

initialParameters[1] = 0.0; // Initial offset in mm along Y

movingInitialTransform->SetParameters( initialParameters );

registration->SetMovingInitialTransform( movingInitialTransform );

In the registration filter this moving initial transform will be added to a composite transform that already includes an instantiation of the output optimizable transform; then, the resultant composite transform will be used by the optimizer to evaluate the metric values at each iteration.

Despite this, the fixed initial transform does not contribute to the optimization process. It is only used to access the fixed image from the virtual image space where the metric evaluation happens.

Virtual images are a new concept added to the ITKv4 registration framework, which potentially lets us to do the registration process in a physical domain totally different from the fixed and moving image domains. In fact, the region over which metric evaluation is performed is called virtual image domain. This domain defines the resolution at which the evaluation is performed, as well as the physical coordinate system.

The virtual reference domain is taken from the “virtual image” buffered region, and the input images should be accessed from this reference space using the fixed and moving initial transforms.

The legacy intuitive registration framework can be considered as a special case where the virtual domain is the same as the fixed image domain. As this case practically happens in most of the real life applications, the virtual image is set to be the same as the fixed image by default. However, the user can define the virtual domain differently than the fixed image domain by calling either SetVirtualDomain or SetVirtualDomainFromImage.

In this example, like the most examples of this chapter, the virtual image is considered the same as the fixed image. Since the registration process happens in the fixed image physical domain, the fixed initial transform maintains its default value of identity and does not need to be set.

However, a “Hello World!” example should show all the basics, so all the registration components are explicity set here.

In the next section of this chapter, you will get a better understanding from behind the scenes of the registration process when the initial fixed transform is not identity.

Note that the above process shows only one way of initializing the registration configuration. Another option is to initialize the output optimizable transform directly. In this approach, a transform object is created, initialized, and then passed to the registration method via SetInitialTransform(). This approach is shown in section 3.6.1.

At this point the registration method is ready for execution. The optimizer is the component that drives the execution of the registration. However, the ImageRegistrationMethodv4 class orchestrates the ensemble to make sure that everything is in place before control is passed to the optimizer.

It is usually desirable to fine tune the parameters of the optimizer. Each optimizer has particular parameters that must be interpreted in the context of the optimization strategy it implements. The optimizer used in this example is a variant of gradient descent that attempts to prevent it from taking steps that are too large. At each iteration, this optimizer will take a step along the direction of the itk::ImageToImageMetricv4 derivative. Each time the direction of the derivative abruptly changes, the optimizer assumes that a local extrema has been passed and reacts by reducing the step length by a relaxation factor. The reducing factor should have a value between 0 and 1. This factor is set to 0.5 by default, and it can be changed to a different value via SetRelaxationFactor(). Also, the default value for the initial step length is 1, and this value can be changed manually with the method SetLearningRate().

In addition to manual settings, the initial step size can also be estimated automatically, either at each iteration or only at the first iteration, by assigning a ScalesEstimator (as will be seen in later examples).

After several reductions of the step length, the optimizer may be moving in a very restricted area of the transform parameter space. By the method SetMinimumStepLength(), the user can define how small the step length should be to consider convergence to have been reached. This is equivalent to defining the precision with which the final transform should be known. User can also set some other stop criteria manually like maximum number of iterations.

In other gradient descent-based optimizers of the ITKv4 framework, such as itk::GradientDescentLineSearchOptimizerv4 and itk::ConjugateGradientLineSearchOptimizerv4, the convergence criteria are set via SetMinimumConvergenceValue() which is computed based on the results of the last few iterations. The number of iterations involved in computations are defined by the convergence window size via SetConvergenceWindowSize() which is shown in later examples of this chapter.

Also note that unlike the previous versions, ITKv4 optimizers do not have a “maximize/minimize” option to modify the effect of the metric derivatives. Each assigned metric is assumed to return a parameter derivative result that ”improves” the optimization.

In case the optimizer never succeeds reaching the desired precision tolerance, it is prudent to establish a limit on the number of iterations to be performed. This maximum number is defined with the method SetNumberOfIterations().

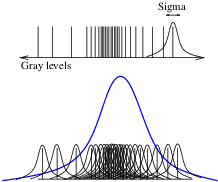

ITKv4 facilitates a multi-level registration framework whereby each stage is different in the resolution of its virtual space and the smoothness of the fixed and moving images. These criteria need to be defined before registration starts. Otherwise, the default values will be used. In this example, we run a simple registration in one level with no space shrinking or smoothing on the input data.

RegistrationType::ShrinkFactorsArrayType shrinkFactorsPerLevel;

shrinkFactorsPerLevel.SetSize( 1 );

shrinkFactorsPerLevel[0] = 1;

RegistrationType::SmoothingSigmasArrayType smoothingSigmasPerLevel;

smoothingSigmasPerLevel.SetSize( 1 );

smoothingSigmasPerLevel[0] = 0;

registration->SetNumberOfLevels ( numberOfLevels );

registration->SetSmoothingSigmasPerLevel( smoothingSigmasPerLevel );

registration->SetShrinkFactorsPerLevel( shrinkFactorsPerLevel );

The registration process is triggered by an invocation of the Update() method. If something goes wrong during the initialization or execution of the registration an exception will be thrown. We should therefore place the Update() method inside a try/catch block as illustrated in the following lines.

In a real life application, you may attempt to recover from the error by taking more effective actions in the catch block. Here we are simply printing out a message and then terminating the execution of the program.

The result of the registration process is obtained using the GetTransform() method that returns a constant pointer to the output transform.

In the case of the itk::TranslationTransform, there is a straightforward interpretation of the parameters. Each element of the array corresponds to a translation along one spatial dimension.

The optimizer can be queried for the actual number of iterations performed to reach convergence. The GetCurrentIteration() method returns this value. A large number of iterations may be an indication that the learning rate has been set too small, which is undesirable since it results in long computational times.

The value of the image metric corresponding to the last set of parameters can be obtained with the GetValue() method of the optimizer.

Let’s execute this example over two of the images provided in Examples/Data:

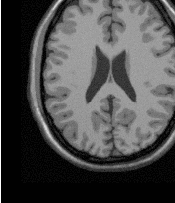

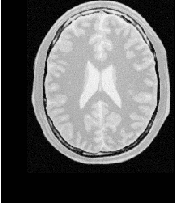

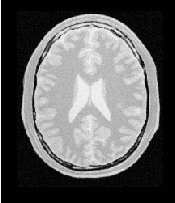

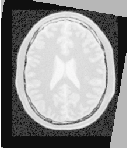

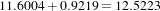

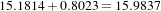

The second image is the result of intentionally translating the first image by (13,17) millimeters. Both images have unit-spacing and are shown in Figure 3.4. The registration takes 20 iterations and the resulting transform parameters are:

As expected, these values match quite well the misalignment that we intentionally introduced in the moving image.

It is common, as the last step of a registration task, to use the resulting transform to map the moving image into the fixed image space.

Before the mapping process, notice that we have not used the direct initialization of the output transform in this example, so the parameters of the moving initial transform are not reflected in the output parameters of the registration filter. Hence, a composite transform is needed to concatenate both initial and output transforms together.

double,

Dimension >;

CompositeTransformType::Pointer outputCompositeTransform =

CompositeTransformType::New();

outputCompositeTransform->AddTransform( movingInitialTransform );

outputCompositeTransform->AddTransform(

registration->GetModifiableTransform() );

Now the mapping process is easily done with the itk::ResampleImageFilter. Please refer to Section 2.9.4 for details on the use of this filter. First, a ResampleImageFilter type is instantiated using the image types. It is convenient to use the fixed image type as the output type since it is likely that the transformed moving image will be compared with the fixed image.

A resampling filter is created and the moving image is connected as its input.

The created output composite transform is also passed as input to the resampling filter.

As described in Section 2.9.4, the ResampleImageFilter requires additional parameters to be specified, in particular, the spacing, origin and size of the output image. The default pixel value is also set to a distinct gray level in order to highlight the regions that are mapped outside of the moving image.

resampler->SetSize( fixedImage->GetLargestPossibleRegion().GetSize() );

resampler->SetOutputOrigin( fixedImage->GetOrigin() );

resampler->SetOutputSpacing( fixedImage->GetSpacing() );

resampler->SetOutputDirection( fixedImage->GetDirection() );

resampler->SetDefaultPixelValue( 100 );

The output of the filter is passed to a writer that will store the image in a file. An itk::CastImageFilter is used to convert the pixel type of the resampled image to the final type used by the writer. The cast and writer filters are instantiated below.

The filters are created by invoking their New() method.

The filters are connected together and the Update() method of the writer is invoked in order to trigger the execution of the pipeline.

The fixed image and the transformed moving image can easily be compared using the itk::SubtractImageFilter. This pixel-wise filter computes the difference between homologous pixels of its two input images.

Note that the use of subtraction as a method for comparing the images is appropriate here because we chose to represent the images using a pixel type float. A different filter would have been used if the pixel type of the images were any of the unsigned integer types.

Since the differences between the two images may correspond to very low values of intensity, we rescale those intensities with a itk::RescaleIntensityImageFilter in order to make them more visible. This rescaling will also make it possible to visualize the negative values even if we save the difference image in a file format that only supports unsigned pixel values1 . We also reduce the DefaultPixelValue to “1” in order to prevent that value from absorbing the dynamic range of the differences between the two images.

FixedImageType,

OutputImageType >;

RescalerType::Pointer intensityRescaler = RescalerType::New();

intensityRescaler->SetInput( difference->GetOutput() );

intensityRescaler->SetOutputMinimum( 0 );

intensityRescaler->SetOutputMaximum( 255 );

resampler->SetDefaultPixelValue( 1 );

Its output can be passed to another writer.

For the purpose of comparison, the difference between the fixed image and the moving image before registration can also be computed by simply setting the transform to an identity transform. Note that the resampling is still necessary because the moving image does not necessarily have the same spacing, origin and number of pixels as the fixed image. Therefore a pixel-by-pixel operation cannot in general be performed. The resampling process with an identity transform will ensure that we have a representation of the moving image in the grid of the fixed image.

The complete pipeline structure of the current example is presented in Figure 3.6. The components of the registration method are depicted as well. Figure 3.5 (left) shows the result of resampling the moving image in order to map it onto the fixed image space. The top and right borders of the image appear in the gray level selected with the SetDefaultPixelValue() in the ResampleImageFilter. The center image shows the difference between the fixed image and the original moving image (i.e. the difference before the registration is performed). The right image shows the difference between the fixed image and the transformed moving image (i.e. after the registration has been performed). Both difference images have been rescaled in intensity in order to highlight those pixels where differences exist. Note that the final registration is still off by a fraction of a pixel, which causes bands around edges of anatomical structures to appear in the difference image. A perfect registration would have produced a null difference image.

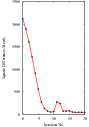

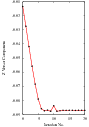

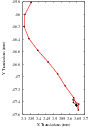

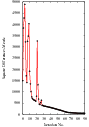

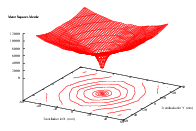

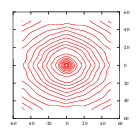

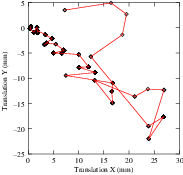

It is always useful to keep in mind that registration is essentially an optimization problem. Figure 3.7 helps to reinforce this notion by showing the trace of translations and values of the image metric at each iteration of the optimizer. It can be seen from the top figure that the step length is reduced progressively as the optimizer gets closer to the metric extrema. The bottom plot clearly shows how the metric value decreases as the optimization advances. The log plot helps to highlight the normal oscillations of the optimizer around the extrema value.

In this section, we used a very simple example to introduce the basic components of a registration process in ITKv4. However, studying this example alone is not enough to start using the itk::ImageRegistrationMethodv4. In order to choose the best registration practice for a specific application, knowledge of other registration method instantiations and their capabilities are required. For example, direct initialization of the output optimizable transform is shown in section 3.6.1. This method can simplify the registration process in many cases. Also, multi-resolution and multistage registration approaches are illustrated in sections 3.7 and 3.8. These examples illustrate the flexibility in the usage of ITKv4 registration method framework that can help to provide faster and more reliable registration processes.

This section presents internals of the registration process in ITKv4. Understanding what actually happens is necessary to have a correct interpretation of the results of a registration filter. It also helps to understand the most common difficulties that users encounter when they start using the ITKv4 registration framework:

These two topics tend to create confusion because they are implemented in different ways in other systems, and community members tend to have different expectations regarding how registration should work in ITKv4. The situation is further complicated by the way most people describe image operations, as if they were manually performed on a continuous picture on a piece of paper.

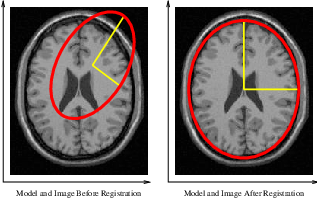

These concepts are discussed in this section through a general example shown in Figure 3.8.

Recall that ITKv4 does the registration in “physical” space where fixed, moving and virtual images are placed. Also, note that the term of virtual image is deceptive here since it does not refer to any actual image. In fact, the virtual image defines the origin, direction and the spacing of a space lattice that holds the output resampled image of the registration process. The virtual pixel lattice is illustrated in green at the top left side of Figure 3.8.

As shown in this figure, generally there are two transforms involved in the registration process even though only one of them is being optimized. T vm maps points from physical virtual space onto the physical space of the moving image, and in the same way T vf finds homologous points between physical virtual space and the physical space of the fixed image. Note that only T vm is optimized during the registration process. T vf cannot be optimized. The fixed transform usually is an identity transform since the virtual image lattice is commonly defined as the fixed image lattice.

When the registration starts, the algorithm goes through each grid point of the virtual lattice in a raster sweep. At each point the fixed and moving transforms find coordinates of the homologous points in the fixed and moving image physical spaces, and interpolators are used to find the pixel intensities if mapped points are in non-grid positions. These intensity values are passed to a cost function to find the current metric value.

Note the direction of the mapping transforms here. For example, if you consider the T vm transform, confusion often occurs since the transform shifts a virtual lattice point on the positive X direction. The visual effect of this mapping, once the moving image is resampled, is equivalent to manually shifting the moving image along the negative X direction. In the same way, when the T vm transform applies a clock-wise rotation to the virtual space points, the visual effect of this mapping, once the moving image has been resampled, is equivalent to manually rotating the moving image counter-clock-wise. The same relationships also occur with the T vf transform between the virtual space and the fixed image space.

This mapping direction is chosen because the moving image is resampled on the grid of the virtual image. In the resampling process, an algorithm iterates through every pixel of the output image and computes the intensity assigned to this pixel by mapping to its location in the moving image.

Instead, if we were to use the transform mapping coordinates from the moving image physical space into the virtual image physical space, then the resampling process would not guarantee that every pixel in the grid of the virtual image would receive one and only one value. In other words, the resampling would result in an image with holes and redundant or overlapping pixel values.

As seen in the previous examples, and as corroborated in the remaining examples in this chapter, the transform computed by the registration framework can be used directly in the resampling filter in order to map the moving image onto the discrete grid of the virtual image.

There are exceptional cases in which the transform desired is actually the inverse transform of the one computed by the ITK registration framework. Only those cases may require invoking the GetInverse() method that most transforms offer. Before attempting this, read the examples on resampling illustrated in section 2.9 in order to familiarize yourself with the correct interpretation of the transforms.

Now we come back to the situation illustrated in Figure 3.8. This figure shows the flexibility of the ITKv4 registration framework. We can register two images with different scales, sizes and resolutions. Also, we can create the output warped image with any desired size and resolution.

Nevertheless, note that the spatial transform computed during the registration process does not need to be concerned about a different number of pixels and different pixel sizes between fixed, moving and output images because the conversion from index space to the physical space implicitly takes care of the required scaling factor between the involved images.

One important consequence of this fact is that having the correct image origin, image pixel size, and image direction is fundamental for the success of the registration process in ITK, since we need this information to compute the exact location of each pixel lattice in the physical space; we must make sure that the correct values for the origin, spacing, and direction of all fixed, moving and virtual images are provided.

In this example, the spatial transform computed will physically map the brain from the moving image onto the virtual space and minimize its difference with the resampled brain from the fixed image into the virtual space. Fortunately in practice there is no need to resample the fixed image since the virtual image physical domain is often assumed to be the same as physical domain of the fixed image.

The source code for this section can be found in the file

ImageRegistration3.cxx.

Given the numerous parameters involved in tuning a registration method for a particular application, it is not uncommon for a registration process to run for several minutes and still produce a useless result. To avoid this situation it is quite helpful to track the evolution of the registration as it progresses. The following section illustrates the mechanisms provided in ITK for monitoring the activity of the ImageRegistrationMethodv4 class.

Insight implements the Observer/Command design pattern [20]. The classes involved in this implementation are the itk::Object, itk::Command and itk::EventObject classes. The Object is the base class of most ITK objects. This class maintains a linked list of pointers to event observers. The role of observers is played by the Command class. Observers register themselves with an Object, declaring that they are interested in receiving notification when a particular event happens. A set of events is represented by the hierarchy of the Event class. Typical events are Start, End, Progress and Iteration.

Registration is controlled by an itk::Optimizer, which generally executes an iterative process. Most Optimizer classes invoke an itk::IterationEvent at the end of each iteration. When an event is invoked by an object, this object goes through its list of registered observers (Commands) and checks whether any one of them has expressed interest in the current event type. Whenever such an observer is found, its corresponding Execute() method is invoked. In this context, Execute() methods should be considered callbacks. As such, some of the common sense rules of callbacks should be respected. For example, Execute() methods should not perform heavy computational tasks. They are expected to execute rapidly, for example, printing out a message or updating a value in a GUI.

The following code illustrates a simple way of creating a Observer/Command to monitor a registration process. This new class derives from the Command class and provides a specific implementation of the Execute() method. First, the header file of the Command class must be included.

Our custom command class is called CommandIterationUpdate. It derives from the Command class and declares for convenience the types Self and Superclass. This facilitates the use of standard macros later in the class implementation.

The following type alias declares the type of the SmartPointer capable of holding a reference to this object.

The itkNewMacro takes care of defining all the necessary code for the New() method. Those with curious minds are invited to see the details of the macro in the file itkMacro.h in the Insight/Code/Common directory.

In order to ensure that the New() method is used to instantiate the class (and not the C++ new operator), the constructor is declared protected.

Since this Command object will be observing the optimizer, the following type alias are useful for converting pointers when the Execute() method is invoked. Note the use of const on the declaration of OptimizerPointer. This is relevant since, in this case, the observer is not intending to modify the optimizer in any way. A const interface ensures that all operations invoked on the optimizer are read-only.

ITK enforces const-correctness. There is hence a distinction between the Execute() method that can be invoked from a const object and the one that can be invoked from a non-const object. In this particular example the non-const version simply invoke the const version. In a more elaborate situation the implementation of both Execute() methods could be quite different. For example, you could imagine a non-const interaction in which the observer decides to stop the optimizer in response to a divergent behavior. A similar case could happen when a user is controlling the registration process from a GUI.

Finally we get to the heart of the observer, the Execute() method. Two arguments are passed to this method. The first argument is the pointer to the object that invoked the event. The second argument is the event that was invoked.

Note that the first argument is a pointer to an Object even though the actual object invoking the event is probably a subclass of Object. In our case we know that the actual object is an optimizer. Thus we can perform a dynamic_cast to the real type of the object.

The next step is to verify that the event invoked is actually the one in which we are interested. This is checked using the RTTI2 support. The CheckEvent() method allows us to compare the actual type of two events. In this case we compare the type of the received event with an IterationEvent. The comparison will return true if event is of type IterationEvent or derives from IterationEvent. If we find that the event is not of the expected type then the Execute() method of this command observer should return without any further action.

If the event matches the type we are looking for, we are ready to query data from the optimizer. Here, for example, we get the current number of iterations, the current value of the cost function and the current position on the parameter space. All of these values are printed to the standard output. You could imagine more elaborate actions like updating a GUI or refreshing a visualization pipeline.

This concludes our implementation of a minimal Command class capable of observing our registration method. We can now move on to configuring the registration process.

Once all the registration components are in place we can create one instance of our observer. This is done with the standard New() method and assigned to a SmartPointer.

The newly created command is registered as observer on the optimizer, using the AddObserver() method. Note that the event type is provided as the first argument to this method. In order for the RTTI mechanism to work correctly, a newly created event of the desired type must be passed as the first argument. The second argument is simply the smart pointer to the observer. Figure 3.9 illustrates the interaction between the Command/Observer class and the registration method.

At this point, we are ready to execute the registration. The typical call to Update() will do it. Note again the use of the try/catch block around the Update() method in case an exception is thrown.

The registration process is applied to the following images in Examples/Data:

It produces the following output.

You can verify from the code in the Execute() method that the first column is the iteration number, the second column is the metric value and the third and fourth columns are the parameters of the transform, which is a 2D translation transform in this case. By tracking these values as the registration progresses, you will be able to determine whether the optimizer is advancing in the right direction and whether the step-length is reasonable or not. That will allow you to interrupt the registration process and fine-tune parameters without having to wait until the optimizer stops by itself.

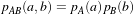

Some of the most challenging cases of image registration arise when images of different modalities are involved. In such cases, metrics based on direct comparison of gray levels are not applicable. It has been extensively shown that metrics based on the evaluation of mutual information are well suited for overcoming the difficulties of multi-modality registration.

The concept of Mutual Information is derived from Information Theory and its application to image registration has been proposed in different forms by different groups [12, 37, 63]; a more detailed review can be found in [23, 46]. The Insight Toolkit currently provides two different implementations of Mutual Information metrics (see section 3.11 for details). The following example illustrates the practical use of one of these metrics.

The source code for this section can be found in the file

ImageRegistration4.cxx.

In this example, we will solve a simple multi-modality problem using an implementation of mutual information. This implementation was published by Mattes et. al [40].

First, we include the header files of the components used in this example.

In this example the image types and all registration components, except the metric, are declared as in Section 3.2. The Mattes mutual information metric type is instantiated using the image types.

The metric is created using the New() method and then connected to the registration object.

The metric requires the user to specify the number of bins used to compute the entropy. In a typical application, 50 histogram bins are sufficient. Note however, that the number of bins may have dramatic effects on the optimizer’s behavior.

To calculate the image gradients, an image gradient calculator based on ImageFunction is used instead of image gradient filters. Image gradient methods are defined in the superclass ImageToImageMetricv4.

Notice that in the ITKv4 registration framework, optimizers always try to minimize the cost function, and the metrics always return a parameter and derivative result that improves the optimization, so this metric computes the negative mutual information. The optimization parameters are tuned for this example, so they are not exactly the same as the parameters used in Section 3.2.

Note that large values of the learning rate will make the optimizer unstable. Small values, on the other hand, may result in the optimizer needing too many iterations in order to walk to the extrema of the cost function. The easy way of fine tuning this parameter is to start with small values, probably in the range of {1.0,5.0}. Once the other registration parameters have been tuned for producing convergence, you may want to revisit the learning rate and start increasing its value until you observe that the optimization becomes unstable. The ideal value for this parameter is the one that results in a minimum number of iterations while still keeping a stable path on the parametric space of the optimization. Keep in mind that this parameter is a multiplicative factor applied on the gradient of the metric. Therefore, its effect on the optimizer step length is proportional to the metric values themselves. Metrics with large values will require you to use smaller values for the learning rate in order to maintain a similar optimizer behavior.

Whenever the regular step gradient descent optimizer encounters change in the direction of movement in the parametric space, it reduces the size of the step length. The rate at which the step length is reduced is controlled by a relaxation factor. The default value of the factor is 0.5. This value, however may prove to be inadequate for noisy metrics since they tend to induce erratic movements on the optimizers and therefore result in many directional changes. In those conditions, the optimizer will rapidly shrink the step length while it is still too far from the location of the extrema in the cost function. In this example we set the relaxation factor to a number higher than the default in order to prevent the premature shrinkage of the step length.

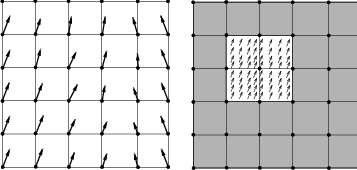

Instead of using the whole virtual domain (usually fixed image domain) for the registration, we can use a spatial sampled point set by supplying an arbitrary point list over which to evaluate the metric. The point list is expected to be in the fixed image domain, and the points are transformed into the virtual domain internally as needed. The user can define the point set via SetFixedSampledPointSet(), and the point set is used by calling SetUsedFixedSampledPointSet().

Also, instead of dealing with the metric directly, the user may define the sampling percentage and sampling strategy for the registration framework at each level. In this case, the registration filter manages the sampling operation over the fixed image space based on the input strategy (REGULAR, RANDOM) and passes the sampled point set to the metric internally.

The number of spatial samples to be used depends on the content of the image. If the images are smooth and do not contain many details, the number of spatial samples can usually be as low as 1% of the total number of pixels in the fixed image. On the other hand, if the images are detailed, it may be necessary to use a much higher proportion, such as 20% to 50%. Increasing the number of samples improves the smoothness of the metric, and therefore helps when this metric is used in conjunction with optimizers that rely of the continuity of the metric values. The trade-off, of course, is that a larger number of samples results in longer computation times per every evaluation of the metric.

One mechanism for bringing the metric to its limit is to disable the sampling and use all the pixels present in the FixedImageRegion. This can be done with the SetUseSampledPointSet( false ) method. You may want to try this option only while you are fine tuning all other parameters of your registration. We don’t use this method in this current example though.

It has been demonstrated empirically that the number of samples is not a critical parameter for the registration process. When you start fine tuning your own registration process, you should start using high values of number of samples, for example in the range of 20% to 50% of the number of pixels in the fixed image. Once you have succeeded to register your images you can then reduce the number of samples progressively until you find a good compromise on the time it takes to compute one evaluation of the metric. Note that it is not useful to have very fast evaluations of the metric if the noise in their values results in more iterations being required by the optimizer to converge. You must then study the behavior of the metric values as the iterations progress, just as illustrated in section 3.4.

In ITKv4, a single virtual domain or spatial sample point set is used for the all iterations of the registration process. The use of a single sample set results in a smooth cost function that can improve the functionality of the optimizer.

The spatial point set is pseudo randomly generated. For reproducible results an integer seed should set.

Let’s execute this example over two of the images provided in Examples/Data:

The second image is the result of intentionally translating the image BrainProtonDensitySliceBorder20.png by (13,17) millimeters. Both images have unit-spacing and are shown in Figure 3.10. The registration process converges after 46 iterations and produces the following results:

These values are a very close match to the true misalignment introduced in the moving image.

The result of resampling the moving image is presented on the left of Figure 3.11. The center and right parts of the figure present a checkerboard composite of the fixed and moving images before and after registration respectively.

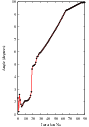

Figure 3.12 (upper-left) shows the sequence of translations followed by the optimizer as it searched the parameter space. The upper-right figure presents a closer look at the convergence basin for the last iterations of the optimizer. The bottom of the same figure shows the sequence of metric values computed as the optimizer searched the parameter space.

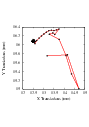

You must note however that there are a number of non-trivial issues involved in the fine tuning of parameters for the optimization. For example, the number of bins used in the estimation of Mutual Information has a dramatic effect on the performance of the optimizer. In order to illustrate this effect, the same example has been executed using a range of different values for the number of bins, from 10 to 30. If you repeat this experiment, you will notice that depending on the number of bins used, the optimizer’s path may get trapped early on in local minima. Figure 3.13 shows the multiple paths that the optimizer took in the parametric space of the transform as a result of different selections on the number of bins used by the Mattes Mutual Information metric. Note that many of the paths die in local minima instead of reaching the extrema value on the upper right corner.

Effects such as the one illustrated here highlight how useless is to compare different algorithms based on a non-exhaustive search of their parameter setting. It is quite difficult to be able to claim that a particular selection of parameters represent the best combination for running a particular algorithm. Therefore, when comparing the performance of two or more different algorithms, we are faced with the challenge of proving that none of the algorithms involved in the comparison are being run with a sub-optimal set of parameters.

The plots in Figures 3.12 and 3.13 were generated using Gnuplot3 . The scripts used for this purpose are available in the ITKSoftwareGuide Git repository under the directory

ITKSoftwareGuide/SoftwareGuide/Art.

Data for the plots were taken directly from the output that the Command/Observer in this example prints out to the console. The output was processed with the UNIX editor sed4 in order to remove commas and brackets that were confusing for Gnuplot’s parser. Both the shell script for running sed and for running Gnuplot are available in the directory indicated above. You may find useful to run them in order to verify the results presented here, and to eventually modify them for profiling your own registrations.

Open Science is not just an abstract concept. Open Science is something to be practiced every day with the simple gesture of sharing information with your peers, and by providing all the tools that they need for replicating the results that you are reporting. In Open Science, the only bad results are those that can not be replicated5 . Science is dead when people blindly trust authorities 6 instead of verifying their statements by performing their own experiments [47, 48].

The ITK image coordinate origin is typically located in one of the image corners (see the Defining Origin and Spacing section of Book 1 for details). This results in counter-intuitive transform behavior when rotations and scaling are involved. Users tend to assume that rotations and scaling are performed around a fixed point at the center of the image. In order to compensate for this difference in expected interpretation, the concept of center of transform has been introduced into the toolkit. This parameter is generally a fixed parameter that is not optimized during registration, so initialization is crucial to get efficient and accurate results. The following sections describe the main characteristics and effects of initializing the center of a transform.

The source code for this section can be found in the file

ImageRegistration5.cxx.

This example illustrates the use of the itk::Euler2DTransform for performing rigid registration in 2D. The example code is for the most part identical to that presented in Section 3.2. The main difference is the use of the Euler2DTransform here instead of the itk::TranslationTransform.

In addition to the headers included in previous examples, the following header must also be included.

The transform type is instantiated using the code below. The only template parameter for this class is the representation type of the space coordinates.

In the Hello World! example, we used Fixed/Moving initial transforms to initialize the registration configuration. That approach was good to get an intuition of the registration method, specifically when we aim to run a multistage registration process, from which the output of each stage can be used to initialize the next registration stage.

To get a better underestanding of the registration process in such situations, consider an example of 3 stages registration process that is started using an initial moving transform (Γmi). Multiple stages are handled by linking multiple instantiations of the itk::ImageRegistrationMethodv4 class. Inside the registration filter of the first stage, the initial moving transform is added to an internal composite transform along with an updatable identity transform (Γu). Although the whole composite transform is used for metric evaluation, only the Γu is set to be updated by the optimizer at each iteration. The Γu will be considered as the output transform of the current stage when the optimization process is converged. This implies that the output of this stage does not include the initialization parameters, so we need to concatenate the output and the initialization transform into a composite transform to be considered as the final transform of the first registration stage.

T 1(x) = Γmi(Γstage1(x))

Consider that, as explained in section 3.3, the above transform is a mapping from the vitual domain (i.e. fixed image space, when no fixed initial transform) to the moving image space.

Then, the result transform of the first stage will be used as the initial moving transform for the second stage of the registration process, and this approach goes on until the last stage of the registration process.

At the end of the registration process, the Γmi and the outputs of each stage can be concatenated into a final composite transform that is considered to be the final output of the whole registration process.

I′m(x) = Im(Γmi(Γstage1(Γstage2(Γstage3(x)))))

The above approach is especially useful if individual stages are characterized by different types of transforms, e.g. when we run a rigid registration process that is proceeded by an affine registration which is completed by a BSpline registration at the end.

In addition to the above method, there is also a direct initialization method in which the initial transform will be optimized directly. In this way the initial transform will be modified during the registration process, so it can be used as the final transform when the registration process is completed. This direct approach is conceptually close to what was happening in ITKv3 registration.

Using this method is very simple and efficient when we have only one level of registration, which is the case in this example. Also, a good application of this initialization method in a multi-stage scenario is when two consequent stages have the same transform types, or at least the initial parameters can easily be inferred from the result of the previous stage, such as when a translation transform is followed by a rigid transform.

The direct initialization approach is shown by the current example in which we try to initialize the parameters of the optimizable transform (Γu) directly.

For this purpose, first, the initial transform object is constructed below. This transform will be initialized, and its initial parameters will be used when the registration process starts.

In this example, the input images are taken from readers. The code below updates the readers in order to ensure that the image parameters (size, origin and spacing) are valid when used to initialize the transform. We intend to use the center of the fixed image as the rotation center and then use the vector between the fixed image center and the moving image center as the initial translation to be applied after the rotation.

The center of rotation is computed using the origin, size and spacing of the fixed image.

const SpacingType fixedSpacing = fixedImage->GetSpacing();

const OriginType fixedOrigin = fixedImage->GetOrigin();

const RegionType fixedRegion = fixedImage->GetLargestPossibleRegion();

const SizeType fixedSize = fixedRegion.GetSize();

TransformType::InputPointType centerFixed;

centerFixed[0] = fixedOrigin[0] + fixedSpacing[0] ⋆ fixedSize[0] / 2.0;

centerFixed[1] = fixedOrigin[1] + fixedSpacing[1] ⋆ fixedSize[1] / 2.0;

The center of the moving image is computed in a similar way.

const SpacingType movingSpacing = movingImage->GetSpacing();

const OriginType movingOrigin = movingImage->GetOrigin();

const RegionType movingRegion = movingImage->GetLargestPossibleRegion();

const SizeType movingSize = movingRegion.GetSize();

TransformType::InputPointType centerMoving;

centerMoving[0] = movingOrigin[0] + movingSpacing[0] ⋆ movingSize[0] / 2.0;

centerMoving[1] = movingOrigin[1] + movingSpacing[1] ⋆ movingSize[1] / 2.0;

Then, we initialize the transform by passing the center of the fixed image as the rotation center with the SetCenter() method. Also, the translation is set as the vector relating the center of the moving image to the center of the fixed image. This last vector is passed with the method SetTranslation().

Let’s finally initialize the rotation with a zero angle.

Now the current parameters of the initial transform will be set to a registration method, so they can be assigned to the Γu directly. Note that you should not confuse the following function with the SetMoving(Fixed)InitialTransform() methods that were used in Hello World! example.

Keep in mind that the scale of units in rotation and translation is quite different. For example, here we know that the first element of the parameters array corresponds to the angle that is measured in radians, while the other parameters correspond to the translations that are measured in millimeters, so a naive application of gradient descent optimizer will not produce a smooth change of parameters, because a similar change of δ to each parameter will produce a different magnitude of impact on the transform. As the result, we need “parameter scales” to customize the learning rate for each parameter. We can take advantage of the scaling functionality provided by the optimizers.

In this example we use small factors in the scales associated with translations. However, for the transforms with larger parameters sets, it is not intuitive for a user to set the scales. Fortunately, a framework for automated estimation of parameter scales is provided by ITKv4 that will be discussed later in the example of section 3.8.

OptimizerScalesType optimizerScales(

initialTransform->GetNumberOfParameters() );

const double translationScale = 1.0 / 1000.0;

optimizerScales[0] = 1.0;

optimizerScales[1] = translationScale;

optimizerScales[2] = translationScale;

optimizer->SetScales( optimizerScales );

Next we set the normal parameters of the optimization method. In this case we are using an itk::RegularStepGradientDescentOptimizerv4. Below, we define the optimization parameters like the relaxation factor, learning rate (initial step length), minimal step length and number of iterations. These last two act as stopping criteria for the optimization.

Let’s execute this example over two of the images provided in Examples/Data:

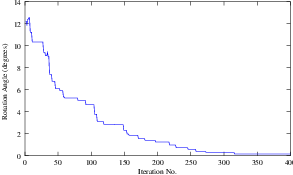

The second image is the result of intentionally rotating the first image by 10 degrees around the geometrical center of the image. Both images have unit-spacing and are shown in Figure 3.14. The registration takes 17 iterations and produces the results:

These results are interpreted as

As expected, these values match the misalignment intentionally introduced into the moving image quite well, since 10 degrees is about 0.174532 radians.

Figure 3.15 shows from left to right the resampled moving image after registration, the difference between the fixed and moving images before registration, and the difference between the fixed and resampled moving image after registration. It can be seen from the last difference image that the rotational component has been solved but that a small centering misalignment persists.

Figure 3.16 shows plots of the main output parameters produced from the registration process. This includes the metric values at every iteration, the angle values at every iteration, and the translation components of the transform as the registration progresses.

Let’s now consider the case in which rotations and translations are present in the initial registration, as in the following pair of images:

The second image is the result of intentionally rotating the first image by 10 degrees and then translating it 13mm in X and 17mm in Y . Both images have unit-spacing and are shown in Figure 3.17. In order to accelerate convergence it is convenient to use a larger step length as shown here.

optimizer->SetMaximumStepLength( 1.3 );

The registration now takes 37 iterations and produces the following results:

These parameters are interpreted as

These values approximately match the initial misalignment intentionally introduced into the moving image, since 10 degrees is about 0.174532 radians. The horizontal translation is well resolved while the vertical translation ends up being off by about one millimeter.

Figure 3.18 shows the output of the registration. The rightmost image of this figure shows the difference between the fixed image and the resampled moving image after registration.

Figure 3.19 shows plots of the main output registration parameters when the rotation and translations are combined. These results include the metric values at every iteration, the angle values at every iteration, and the translation components of the registration as the registration converges. It can be seen from the smoothness of these plots that a larger step length could have been supported easily by the optimizer. You may want to modify this value in order to get a better idea of how to tune the parameters.

The source code for this section can be found in the file

ImageRegistration6.cxx.

This example illustrates the use of the itk::Euler2DTransform for performing registration. The example code is for the most part identical to the one presented in Section 3.6.1. Even though this current example is done in 2D, the class itk::CenteredTransformInitializer is quite generic and could be used in other dimensions. The objective of the initializer class is to simplify the computation of the center of rotation and the translation required to initialize certain transforms such as the Euler2DTransform. The initializer accepts two images and a transform as inputs. The images are considered to be the fixed and moving images of the registration problem, while the transform is the one used to register the images.

The CenteredTransformInitializer supports two modes of operation. In the first mode, the centers of the images are computed as space coordinates using the image origin, size and spacing. The center of the fixed image is assigned as the rotational center of the transform while the vector going from the fixed image center to the moving image center is passed as the initial translation of the transform. In the second mode, the image centers are not computed geometrically but by using the moments of the intensity gray levels. The center of mass of each image is computed using the helper class itk::ImageMomentsCalculator. The center of mass of the fixed image is passed as the rotational center of the transform while the vector going from the fixed image center of mass to the moving image center of mass is passed as the initial translation of the transform. This second mode of operation is quite convenient when the anatomical structures of interest are not centered in the image. In such cases the alignment of the centers of mass provides a better rough initial registration than the simple use of the geometrical centers. The validity of the initial registration should be questioned when the two images are acquired in different imaging modalities. In those cases, the center of mass of intensities in one modality does not necessarily match the center of mass of intensities in the other imaging modality.

The following are the most relevant headers in this example.

The transform type is instantiated using the code below. The only template parameter of this class is the representation type of the space coordinates.

Like the previous section, a direct initialization method is used here. The transform object is constructed below. This transform will be initialized, and its initial parameters will be considered as the parameters to be used when the registration process begins.

The input images are taken from readers. It is not necessary to explicitly call Update() on the readers since the CenteredTransformInitializer class will do it as part of its initialization. The following code instantiates the initializer. This class is templated over the fixed and moving images type as well as the transform type. An initializer is then constructed by calling the New() method and assigning the result to a itk::SmartPointer.

The initializer is now connected to the transform and to the fixed and moving images.

The use of the geometrical centers is selected by calling GeometryOn() while the use of center of mass is selected by calling MomentsOn(). Below we select the center of mass mode.

Finally, the computation of the center and translation is triggered by the InitializeTransform() method. The resulting values will be passed directly to the transform.

The remaining parameters of the transform are initialized as before.

Now the initialized transform object will be set to the registration method, and the starting point of the registration is defined by its initial parameters.

If the InPlaceOn() method is called, this initialized transform will be the output transform object or “grafted” to the output. Otherwise, this “InitialTransform” will be deep-copied or “cloned” to the output.

Since the registration filter has InPlace set, the transform object is grafted to the output and is updated by the registration method.

Let’s execute this example over some of the images provided in Examples/Data, for example:

The second image is the result of intentionally rotating the first image by 10 degrees around the geometric center and shifting it 13mm in X and 17mm in Y . Both images have unit-spacing and are shown in Figure 3.14. The registration takes 21 iterations and produces:

These parameters are interpreted as

Note that the reported translation is not the translation of (13,17) that might be expected. The reason is that we used the center of mass (111.204,131.591) for the fixed center, while the input was rotated about the geometric center (110.5,128.5). It is more illustrative in this case to take a look at the actual rotation matrix and offset resulting from the five parameters.

Which produces the following output.

This output illustrates how counter-intuitive the mix of center of rotation and translations can be. Figure 3.20 will clarify this situation. The figure shows the original image on the left. A rotation of 10∘ around the center of the image is shown in the middle. The same rotation performed around the origin of coordinates is shown on the right. It can be seen here that changing the center of rotation introduces additional translations.

Let’s analyze what happens to the center of the image that we just registered. Under the point of view of rotating 10∘ around the center and then applying a translation of (13mm,17mm). The image has a size of (221×257) pixels and unit spacing. Hence its center has coordinates (110.5,128.5). Since the rotation is done around this point, the center behaves as the fixed point of the transformation and remains unchanged. Then with the (13mm,17mm) translation it is mapped to (123.5,145.5) which becomes its final position.

The matrix and offset that we obtained at the end of the registration indicate that this should be equivalent to a rotation of 10∘ around the origin, followed by a translation of (36.99,-1.23). Let’s compute this in detail. First the rotation of the image center by 10∘ around the origin will move the point (110.5,128.5) to (86.51,145.74). Now, applying a translation of (36.99,-1.23) maps this point to (123.50,144.50), which is very close to the result of our previous computation.

It is unlikely that we could have chosen these translations as the initial guess, since we tend to think about images in a coordinate system whose origin is in the center of the image.

This underscores the importance of using good initialization for the center for a transform fixed parameter. By using either the center of geometry or center of mass for initialization the rotation and translation parameters may have a more intuitive interpretation than if only the optimization parameters of translation and rotation are initialized.

Figure 3.22 shows the output of the registration. The image on the right of this figure shows the differences between the fixed image and the resampled moving image after registration.

Figure 3.23 plots the output parameters of the registration process. It includes the metric values at every iteration, the angle values at every iteration, and the values of the translation components as the registration progresses. Note that this is the complementary translation as used in the transform, not the actual total translation that is used in the transform offset. We could modify the observer to print the total offset instead of printing the array of parameters. Let’s call that an exercise for the reader!

The source code for this section can be found in the file

ImageRegistration7.cxx.

This example illustrates the use of the itk::Simularity2DTransform class for performing registration in 2D. The example code is for the most part identical to the code presented in Section 3.6.2. The main difference is the use of itk::Simularity2DTransform here rather than the itk::Euler2DTransform class.

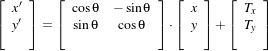

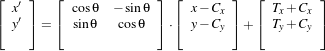

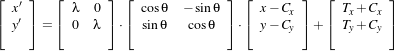

A similarity transform can be seen as a composition of rotations, translations and uniform  scaling. It preserves angles and maps lines into lines. This transform is implemented in the toolkit as

deriving from a rigid 2D transform and with a scale parameter added.

scaling. It preserves angles and maps lines into lines. This transform is implemented in the toolkit as

deriving from a rigid 2D transform and with a scale parameter added.

When using this transform, attention should be paid to the fact that scaling and translations are not independent. In the same way that rotations can locally be seen as translations, scaling also results in local displacements. Scaling is performed in general with respect to the origin of coordinates. However, we already saw how ambiguous that could be in the case of rotations. For this reason, this transform also allows users to setup a specific center. This center is used both for rotation and scaling.

In addition to the headers included in previous examples, here the following header must be included.

The Transform class is instantiated using the code below. The only template parameter of this class is the representation type of the space coordinates.

As before, the transform object is constructed and initialized before it is passed to the registration filter.

In this example, we again use the helper class itk::CenteredTransformInitializer to compute a reasonable value for the initial center of rotation and scaling along with an initial translation.

TransformType,

FixedImageType,

MovingImageType >;

TransformInitializerType::Pointer initializer

= TransformInitializerType::New();

initializer->SetTransform( transform );

initializer->SetFixedImage( fixedImageReader->GetOutput() );

initializer->SetMovingImage( movingImageReader->GetOutput() );

initializer->MomentsOn();

initializer->InitializeTransform();

The remaining parameters of the transform are initialized below.

Now the initialized transform object will be set to the registration method, and its initial parameters are used to initialize the registration process.

Also, by calling the InPlaceOn() method, this initialized transform will be the output transform object or “grafted” to the output of the registration process.

Keeping in mind that the scale of units in scaling, rotation and translation are quite different, we take advantage of the scaling functionality provided by the optimizers. We know that the first element of the parameters array corresponds to the scale factor, the second corresponds to the angle, third and fourth are the remaining translation. We use henceforth small factors in the scales associated with translations.

OptimizerScalesType optimizerScales( transform->GetNumberOfParameters() );

const double translationScale = 1.0 / 100.0;

optimizerScales[0] = 10.0;

optimizerScales[1] = 1.0;

optimizerScales[2] = translationScale;

optimizerScales[3] = translationScale;

optimizer->SetScales( optimizerScales );

We also set the ordinary parameters of the optimization method. In this case we are using a itk::RegularStepGradientDescentOptimizerv4. Below we define the optimization parameters, i.e. initial learning rate (step length), minimal step length and number of iterations. The last two act as stopping criteria for the optimization.

Let’s execute this example over some of the images provided in Examples/Data, for example:

The second image is the result of intentionally rotating the first image by 10 degrees, scaling by 1∕1.2 and then translating by (-13,-17). Both images have unit-spacing and are shown in Figure 3.24. The registration takes 53 iterations and produces:

That are interpreted as

These values approximate the misalignment intentionally introduced into the moving image. Since 10 degrees is about 0.174532 radians.

Figure 3.25 shows the output of the registration. The right image shows the squared magnitude of pixel differences between the fixed image and the resampled moving image.

Figure 3.26 shows the plots of the main output parameters of the registration process. The metric values at every iteration are shown on the left. The rotation angle and scale factor values are shown in the two center plots while the translation components of the registration are presented in the plot on the right.

The source code for this section can be found in the file

ImageRegistration8.cxx.

This example illustrates the use of the itk::VersorRigid3DTransform class for performing registration of two 3D images. The class itk::CenteredTransformInitializer is used to initialize the center and translation of the transform. The case of rigid registration of 3D images is probably one of the most common uses of image registration.

The following are the most relevant headers of this example.

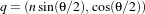

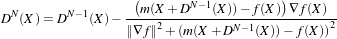

The parameter space of the VersorRigid3DTransform is not a vector space, because addition is not a closed operation in the space of versor components. Hence, we need to use Versor composition operation to update the first three components of the parameter array (rotation parameters), and Vector addition for updating the last three components of the parameters array (translation parameters) [24, 27].

In the previous version of ITK, a special optimizer, itk::VersorRigid3DTransformOptimizer was needed for registration to deal with versor computations. Fortunately in ITKv4, the itk::RegularStepGradientDescentOptimizerv4 can be used for both vector and versor transform optimizations because, in the new registration framework, the task of updating parameters is delegated to the moving transform itself. The UpdateTransformParameters method is implemented in the itk::Transform class as a virtual function, and all the derived transform classes can have their own implementations of this function. Due to this fact, the updating function is re-implemented for versor transforms so it can handle versor composition of the rotation parameters.

The Transform class is instantiated using the code below. The only template parameter to this class is the representation type of the space coordinates.

The initial transform object is constructed below. This transform will be initialized, and its initial parameters will be used when the registration process starts.

The input images are taken from readers. It is not necessary here to explicitly call Update() on the readers since the itk::CenteredTransformInitializer will do it as part of its computations. The following code instantiates the type of the initializer. This class is templated over the fixed and moving image types as well as the transform type. An initializer is then constructed by calling the New() method and assigning the result to a smart pointer.

The initializer is now connected to the transform and to the fixed and moving images.

The use of the geometrical centers is selected by calling GeometryOn() while the use of center of mass is selected by calling MomentsOn(). Below we select the center of mass mode.

Finally, the computation of the center and translation is triggered by the InitializeTransform() method. The resulting values will be passed directly to the transform.

The rotation part of the transform is initialized using a itk::Versor which is simply a unit quaternion. The VersorType can be obtained from the transform traits. The versor itself defines the type of the vector used to indicate the rotation axis. This trait can be extracted as VectorType. The following lines create a versor object and initialize its parameters by passing a rotation axis and an angle.

Now the current initialized transform will be set to the registration method, so its initial parameters can be used to initialize the registration process.

Let’s execute this example over some of the images available in the following website

http://public.kitware.com/pub/itk/Data/BrainWeb.

Note that the images in this website are compressed in .tgz files. You should download these files and decompress them in your local system. After decompressing and extracting the files you could take a pair of volumes, for example the pair:

The second image is the result of intentionally rotating the first image by 10 degrees around the origin and shifting it 15mm in X.

Also, instead of doing the above steps manually, you can turn on the following flag in your build environment:

ITK_USE_BRAINWEB_DATA

Then, the above data will be loaded to your local ITK build directory.

The registration takes 21 iterations and produces:

That are interpreted as

This Versor is equivalent to a rotation of 9.98 degrees around the Z axis.

Note that the reported translation is not the translation of (15.0,0.0,0.0) that we may be naively expecting. The reason is that the VersorRigid3DTransform is applying the rotation around the center found by the CenteredTransformInitializer and then adding the translation vector shown above.

It is more illustrative in this case to take a look at the actual rotation matrix and offset resulting from the 6 parameters.

The output of this print statements is

From the rotation matrix it is possible to deduce that the rotation is happening in the X,Y plane and that the angle is on the order of arcsin(0.173769) which is very close to 10 degrees, as we expected.

Figure 3.28 shows the output of the registration. The center image in this figure shows the differences between the fixed image and the resampled moving image before the registration. The image on the right side presents the difference between the fixed image and the resampled moving image after the registration has been performed. Note that these images are individual slices extracted from the actual volumes. For details, look at the source code of this example, where the ExtractImageFilter is used to extract a slice from the the center of each one of the volumes. One of the main purposes of this example is to illustrate that the toolkit can perform registration on images of any dimension. The only limitations are, as usual, the amount of memory available for the images and the amount of computation time that it will take to complete the optimization process.

Figure 3.29 shows the plots of the main output parameters of the registration process. The Z component of the versor is plotted as an indication of how the rotation progresses. The X,Y translation components of the registration are plotted at every iteration too.

Shell and Gnuplot scripts for generating the diagrams in Figure 3.29 are available in the ITKSoftwareGuide Git repository under the directory

ITKSoftwareGuide/SoftwareGuide/Art.

You are strongly encouraged to run the example code, since only in this way can you gain first-hand experience with the behavior of the registration process. Once again, this is a simple reflection of the philosophy that we put forward in this book:

If you can not replicate it, then it does not exist!

We have seen enough published papers with pretty pictures, presenting results that in practice are impossible to replicate. That is vanity, not science.

The source code for this section can be found in the file

ImageRegistration9.cxx.

This example illustrates the use of the itk::AffineTransform for performing registration in 2D. The example code is, for the most part, identical to that in 3.6.2. The main difference is the use of the AffineTransform here instead of the itk::Euler2DTransform. We will focus on the most relevant changes in the current code and skip the basic elements already explained in previous examples.

Let’s start by including the header file of the AffineTransform.

We then define the types of the images to be registered.

The transform type is instantiated using the code below. The template parameters of this class are the representation type of the space coordinates and the space dimension.

The transform object is constructed below and is initialized before the registration process starts.

In this example, we again use the itk::CenteredTransformInitializer helper class in order to compute reasonable values for the initial center of rotation and the translations. The initializer is set to use the center of mass of each image as the initial correspondence correction.

TransformType,

FixedImageType,

MovingImageType >;

TransformInitializerType::Pointer initializer

= TransformInitializerType::New();

initializer->SetTransform( transform );

initializer->SetFixedImage( fixedImageReader->GetOutput() );

initializer->SetMovingImage( movingImageReader->GetOutput() );

initializer->MomentsOn();

initializer->InitializeTransform();

Now we pass the transform object to the registration filter, and it will be grafted to the output transform of the registration filter by updating its parameters during the the registration process.

Keeping in mind that the scale of units in scaling, rotation and translation are quite different, we take advantage of the scaling functionality provided by the optimizers. We know that the first N ×N elements of the parameters array correspond to the rotation matrix factor, and the last N are the components of the translation to be applied after multiplication with the matrix is performed.

OptimizerScalesType optimizerScales( transform->GetNumberOfParameters() );

optimizerScales[0] = 1.0;

optimizerScales[1] = 1.0;

optimizerScales[2] = 1.0;

optimizerScales[3] = 1.0;

optimizerScales[4] = translationScale;

optimizerScales[5] = translationScale;

optimizer->SetScales( optimizerScales );