This chapter introduces the most commonly used filters found in the toolkit. Most of these filters are intended to process images. They will accept one or more images as input and will produce one or more images as output. ITK is based on a data pipeline architecture in which the output of one filter is passed as input to another filter. (See the Data Processing Pipeline section in Book 1 for more information.)

The thresholding operation is used to change or identify pixel values based on specifying one or more values (called the threshold value). The following sections describe how to perform thresholding operations using ITK.

The source code for this section can be found in the file

BinaryThresholdImageFilter.cxx.

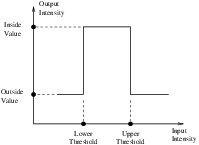

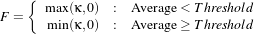

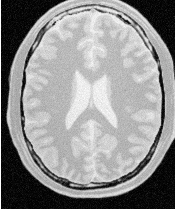

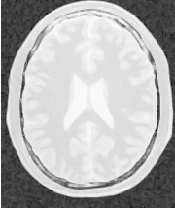

This example illustrates the use of the binary threshold image filter. This filter is used to transform an image into a binary image by changing the pixel values according to the rule illustrated in Figure 2.1. The user defines two thresholds—Upper and Lower—and two intensity values—Inside and Outside. For each pixel in the input image, the value of the pixel is compared with the lower and upper thresholds. If the pixel value is inside the range defined by [Lower,Upper] the output pixel is assigned the InsideValue. Otherwise the output pixels are assigned to the OutsideValue. Thresholding is commonly applied as the last operation of a segmentation pipeline.

The first step required to use the itk::BinaryThresholdImageFilter is to include its header file.

The next step is to decide which pixel types to use for the input and output images.

The input and output image types are now defined using their respective pixel types and dimensions.

The filter type can be instantiated using the input and output image types defined above.

An itk::ImageFileReader class is also instantiated in order to read image data from a file. (See Section 1 on page 3 for more information about reading and writing data.)

An itk::ImageFileWriter is instantiated in order to write the output image to a file.

Both the filter and the reader are created by invoking their New() methods and assigning the result to itk::SmartPointers.

The image obtained with the reader is passed as input to the BinaryThresholdImageFilter.

The method SetOutsideValue() defines the intensity value to be assigned to those pixels whose intensities are outside the range defined by the lower and upper thresholds. The method SetInsideValue() defines the intensity value to be assigned to pixels with intensities falling inside the threshold range.

The methods SetLowerThreshold() and SetUpperThreshold() define the range of the input image intensities that will be transformed into the InsideValue. Note that the lower and upper thresholds are values of the type of the input image pixels, while the inside and outside values are of the type of the output image pixels.

The execution of the filter is triggered by invoking the Update() method. If the filter’s output has been passed as input to subsequent filters, the Update() call on any downstream filters in the pipeline will indirectly trigger the update of this filter.

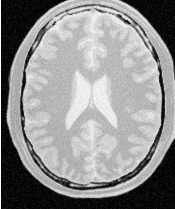

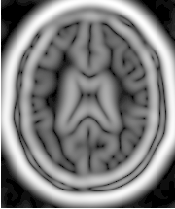

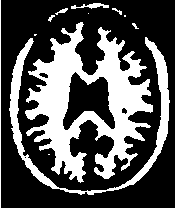

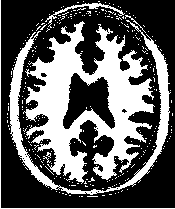

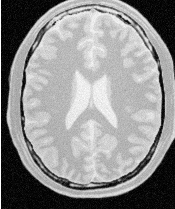

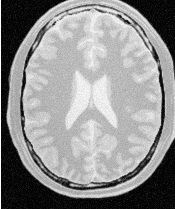

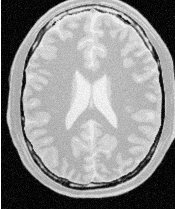

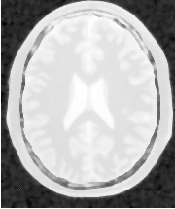

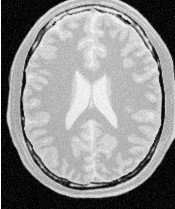

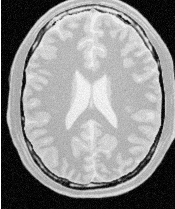

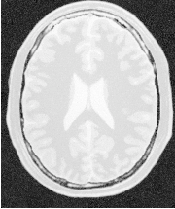

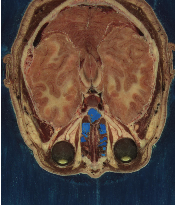

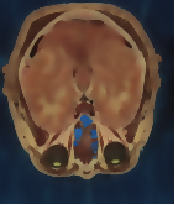

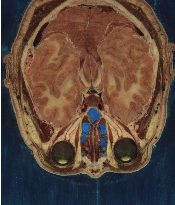

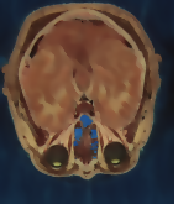

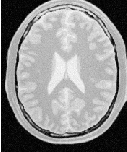

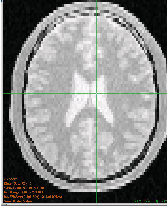

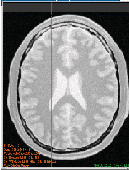

Figure 2.2 illustrates the effect of this filter on a MRI proton density image of the brain. This figure shows the limitations of the filter for performing segmentation by itself. These limitations are particularly noticeable in noisy images and in images lacking spatial uniformity as is the case with MRI due to field bias.

The following classes provide similar functionality:

The source code for this section can be found in the file

ThresholdImageFilter.cxx.

This example illustrates the use of the itk::ThresholdImageFilter. This filter can be used to transform the intensity levels of an image in three different ways.

The following methods choose among the three operating modes of the filter.

The first step required to use this filter is to include its header file.

Then we must decide what pixel type to use for the image. This filter is templated over a single image type because the algorithm only modifies pixel values outside the specified range, passing the rest through unchanged.

The image is defined using the pixel type and the dimension.

The filter can be instantiated using the image type defined above.

An itk::ImageFileReader class is also instantiated in order to read image data from a file.

An itk::ImageFileWriter is instantiated in order to write the output image to a file.

Both the filter and the reader are created by invoking their New() methods and assigning the result to SmartPointers.

The image obtained with the reader is passed as input to the itk::ThresholdImageFilter.

The method SetOutsideValue() defines the intensity value to be assigned to those pixels whose intensities are outside the range defined by the lower and upper thresholds.

The method ThresholdBelow() defines the intensity value below which pixels of the input image will be changed to the OutsideValue.

The filter is executed by invoking the Update() method. If the filter is part of a larger image processing pipeline, calling Update() on a downstream filter will also trigger update of this filter.

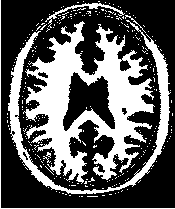

The output of this example is shown in Figure 2.3. The second operating mode of the filter is now enabled by calling the method ThresholdAbove().

Updating the filter with this new setting produces the output shown in Figure 2.4. The third operating mode of the filter is enabled by calling ThresholdOutside().

The output of this third, “band-pass” thresholding mode is shown in Figure 2.5.

The examples in this section also illustrate the limitations of the thresholding filter for performing segmentation by itself. These limitations are particularly noticeable in noisy images and in images lacking spatial uniformity, as is the case with MRI due to field bias.

The following classes provide similar functionality:

The source code for this section can be found in the file

CannyEdgeDetectionImageFilter.cxx.

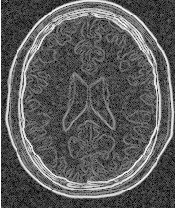

This example introduces the use of the itk::CannyEdgeDetectionImageFilter. Canny edge detection is widely used for edge detection since it is the optimal solution satisfying the constraints of good sensitivity, localization and noise robustness. To achieve this end, Canny edge detection is implemented internally as a multi-stage algorithm, which involves Gaussian smoothing to remove noise, calculation of gradient magnitudes to localize edge features, non-maximum suppression to remove suprious features, and finally thresholding to yield a binary image. Though the specifics of this internal pipeline are largely abstracted from the user of the class, it is nonetheless beneficial to have a general understanding of these components so that parameters can be appropriately adjusted.

The first step required for using this filter is to include its header file.

In this example, images are read and written with unsigned char pixel type. However, Canny edge detection requires floating point pixel types in order to avoid numerical errors. For this reason, a separate internal image type with pixel type double is defined for edge detection.

The CharImageType image is cast to and from RealImageType using itk::CastImageFilter and RescaleIntensityImageFilter, respectively; both the input and output of CannyEdgeDetectionImageFilter are RealImageType.

In this example, three parameters of the Canny edge detection filter may be set via the SetVariance(), SetUpperThreshold(), and SetLowerThreshold() methods. Based on the previous discussion of the steps in the internal pipeline, we understand that variance adjusts the amount of Gaussian smoothing and upperThreshold and lowerThreshold control which edges are selected in the final step.

Finally, Update() is called on writer to trigger excecution of the pipeline. As usual, the call is wrapped in a try/catch block.

The filters discussed in this section perform pixel-wise intensity mappings. Casting is used to convert one pixel type to another, while intensity mappings also take into account the different intensity ranges of the pixel types.

The source code for this section can be found in the file

CastingImageFilters.cxx.

Due to the use of Generic Programming in the toolkit, most types are resolved at compile-time. Few decisions regarding type conversion are left to run-time. It is up to the user to anticipate the pixel type-conversions required in the data pipeline. In medical imaging applications it is usually not desirable to use a general pixel type since this may result in the loss of valuable information.

This section introduces the mechanisms for explicit casting of images that flow through the pipeline. The following four filters are treated in this section: itk::CastImageFilter, itk::RescaleIntensityImageFilter, itk::ShiftScaleImageFilter and itk::NormalizeImageFilter. These filters are not directly related to each other except that they all modify pixel values. They are presented together here for the purpose of comparing their individual features.

The CastImageFilter is a very simple filter that acts pixel-wise on an input image, casting every pixel to the type of the output image. Note that this filter does not perform any arithmetic operation on the intensities. Applying CastImageFilter is equivalent to performing a C-Style cast on every pixel.

outputPixel = static_cast<OutputPixelType>( inputPixel )

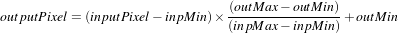

The RescaleIntensityImageFilter linearly scales the pixel values in such a way that the minimum and maximum values of the input are mapped to minimum and maximum values provided by the user. This is a typical process for forcing the dynamic range of the image to fit within a particular scale and is common for image display. The linear transformation applied by this filter can be expressed as

.

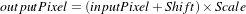

The ShiftScaleImageFilter also applies a linear transformation to the intensities of the input image, but the transformation is specified by the user in the form of a multiplying factor and a value to be added. This can be expressed as

.

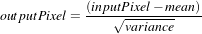

The parameters of the linear transformation applied by the NormalizeImageFilter are computed internally such that the statistical distribution of gray levels in the output image have zero mean and a variance of one. This intensity correction is particularly useful in registration applications as a preprocessing step to the evaluation of mutual information metrics. The linear transformation of NormalizeImageFilter is given as

.

As usual, the first step required to use these filters is to include their header files.

Let’s define pixel types for the input and output images.

Then, the input and output image types are defined.

The filters are instantiated using the defined image types.

InputImageType, OutputImageType >;

using RescaleFilterType = itk::RescaleIntensityImageFilter<

InputImageType, OutputImageType >;

using ShiftScaleFilterType = itk::ShiftScaleImageFilter<

InputImageType, OutputImageType >;

using NormalizeFilterType = itk::NormalizeImageFilter<

InputImageType, OutputImageType >;

Object filters are created by invoking the New() method and assigning the result to itk::SmartPointers.

The output of a reader filter (whose creation is not shown here) is now connected as input to the various casting filters.

Next we proceed to setup the parameters required by each filter. The CastImageFilter and the NormalizeImageFilter do not require any parameters. The RescaleIntensityImageFilter, on the other hand, requires the user to provide the desired minimum and maximum pixel values of the output image. This is done by using the SetOutputMinimum() and SetOutputMaximum() methods as illustrated below.

The ShiftScaleImageFilter requires a multiplication factor (scale) and a post-scaling additive value (shift). The methods SetScale() and SetShift() are used, respectively, to set these values.

Finally, the filters are executed by invoking the Update() method.

The following filter can be seen as a variant of the casting filters. Its main difference is the use of a smooth and continuous transition function of non-linear form.

The source code for this section can be found in the file

SigmoidImageFilter.cxx.

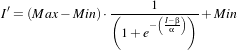

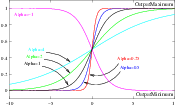

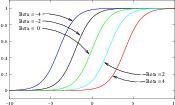

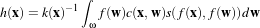

The itk::SigmoidImageFilter is commonly used as an intensity transform. It maps a specific range of intensity values into a new intensity range by making a very smooth and continuous transition in the borders of the range. Sigmoids are widely used as a mechanism for focusing attention on a particular set of values and progressively attenuating the values outside that range. In order to extend the flexibility of the Sigmoid filter, its implementation in ITK includes four parameters that can be tuned to select its input and output intensity ranges. The following equation represents the Sigmoid intensity transformation, applied pixel-wise.

| (2.1) |

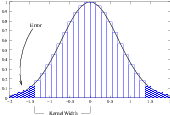

In the equation above, I is the intensity of the input pixel, I′ the intensity of the output pixel, Min,Max are the minimum and maximum values of the output image, α defines the width of the input intensity range, and β defines the intensity around which the range is centered. Figure 2.6 illustrates the significance of each parameter.

This filter will work on images of any dimension and will take advantage of multiple processors when available.

The header file corresponding to this filter should be included first.

Then pixel and image types for the filter input and output must be defined.

Using the image types, we instantiate the filter type and create the filter object.

The minimum and maximum values desired in the output are defined using the methods SetOutputMinimum() and SetOutputMaximum().

The coefficients α and β are set with the methods SetAlpha() and SetBeta(). Note that α is proportional to the width of the input intensity window. As rule of thumb, we may say that the window is the interval [-3α,3α]. The boundaries of the intensity window are not sharp. The α curve approaches its extrema smoothly, as shown in Figure 2.6. You may want to think about this in the same terms as when taking a range in a population of measures by defining an interval of [-3σ,+3σ] around the population mean.

The input to the SigmoidImageFilter can be taken from any other filter, such as an image file reader, for example. The output can be passed down the pipeline to other filters, like an image file writer. An Update() call on any downstream filter will trigger the execution of the Sigmoid filter.

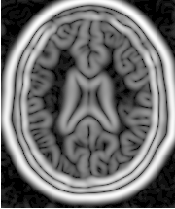

Figure 2.7 illustrates the effect of this filter on a slice of MRI brain image using the following parameters.

As can be seen from the figure, the intensities of the white matter were expanded in their dynamic range, while intensity values lower than β-3α and higher than β+3α became progressively mapped to the minimum and maximum output values. This is the way in which a Sigmoid can be used for performing smooth intensity windowing.

Note that both α and β can be positive and negative. A negative α will have the effect of negating the image. This is illustrated on the left side of Figure 2.6. An application of the Sigmoid filter as preprocessing for segmentation is presented in Section 4.3.1.

Sigmoid curves are common in the natural world. They represent the plot of sensitivity to a stimulus. They are also the integral curve of the Gaussian and, therefore, appear naturally as the response to signals whose distribution is Gaussian.

Computation of gradients is a fairly common operation in image processing. The term “gradient” may refer in some contexts to the gradient vectors and in others to the magnitude of the gradient vectors. ITK filters attempt to reduce this ambiguity by including the magnitude term when appropriate. ITK provides filters for computing both the image of gradient vectors and the image of magnitudes.

The source code for this section can be found in the file

GradientMagnitudeImageFilter.cxx.

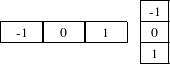

The magnitude of the image gradient is extensively used in image analysis, mainly to help in the determination of object contours and the separation of homogeneous regions. The itk::GradientMagnitudeImageFilter computes the magnitude of the image gradient at each pixel location using a simple finite differences approach. For example, in the case of 2D the computation is equivalent to convolving the image with masks of type

then adding the sum of their squares and computing the square root of the sum.

This filter will work on images of any dimension thanks to the internal use of itk::NeighborhoodIterator and itk::NeighborhoodOperator.

The first step required to use this filter is to include its header file.

Types should be chosen for the pixels of the input and output images.

The input and output image types can be defined using the pixel types.

The type of the gradient magnitude filter is defined by the input image and the output image types.

A filter object is created by invoking the New() method and assigning the result to a itk::SmartPointer.

The input image can be obtained from the output of another filter. Here, the source is an image reader.

Finally, the filter is executed by invoking the Update() method.

If the output of this filter has been connected to other filters in a pipeline, updating any of the downstream filters will also trigger an update of this filter. For example, the gradient magnitude filter may be connected to an image writer.

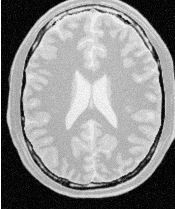

Figure 2.8 illustrates the effect of the gradient magnitude filter on a MRI proton density image of the brain. The figure shows the sensitivity of this filter to noisy data.

Attention should be paid to the image type chosen to represent the output image since the dynamic range of the gradient magnitude image is usually smaller than the dynamic range of the input image. As always, there are exceptions to this rule, for example, synthetic images that contain high contrast objects.

This filter does not apply any smoothing to the image before computing the gradients. The results can therefore be very sensitive to noise and may not be the best choice for scale-space analysis.

The source code for this section can be found in the file

GradientMagnitudeRecursiveGaussianImageFilter.cxx.

Differentiation is an ill-defined operation over digital data. In practice it is convenient to define a scale in which the differentiation should be performed. This is usually done by preprocessing the data with a smoothing filter. It has been shown that a Gaussian kernel is the most convenient choice for performing such smoothing. By choosing a particular value for the standard deviation (σ) of the Gaussian, an associated scale is selected that ignores high frequency content, commonly considered image noise.

The itk::GradientMagnitudeRecursiveGaussianImageFilter computes the magnitude of the image gradient at each pixel location. The computational process is equivalent to first smoothing the image by convolving it with a Gaussian kernel and then applying a differential operator. The user selects the value of σ.

Internally this is done by applying an IIR 1 filter that approximates a convolution with the derivative of the Gaussian kernel. Traditional convolution will produce a more accurate result, but the IIR approach is much faster, especially using large σs [15, 16].

GradientMagnitudeRecursiveGaussianImageFilter will work on images of any dimension by taking advantage of the natural separability of the Gaussian kernel and its derivatives.

The first step required to use this filter is to include its header file.

Types should be instantiated based on the pixels of the input and output images.

With them, the input and output image types can be instantiated.

The filter type is now instantiated using both the input image and the output image types.

A filter object is created by invoking the New() method and assigning the result to a itk::SmartPointer.

The input image can be obtained from the output of another filter. Here, an image reader is used as source.

The standard deviation of the Gaussian smoothing kernel is now set.

Finally the filter is executed by invoking the Update() method.

If connected to other filters in a pipeline, this filter will automatically update when any downstream filters are updated. For example, we may connect this gradient magnitude filter to an image file writer and then update the writer.

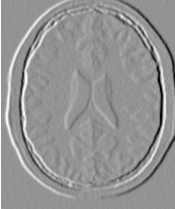

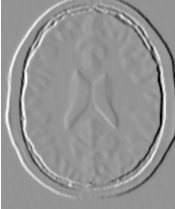

Figure 2.9 illustrates the effect of this filter on a MRI proton density image of the brain using σ values of 3 (left) and 5 (right). The figure shows how the sensitivity to noise can be regulated by selecting an appropriate σ. This type of scale-tunable filter is suitable for performing scale-space analysis.

Attention should be paid to the image type chosen to represent the output image since the dynamic range of the gradient magnitude image is usually smaller than the dynamic range of the input image.

The source code for this section can be found in the file

DerivativeImageFilter.cxx.

The itk::DerivativeImageFilter is used for computing the partial derivative of an image, the derivative of an image along a particular axial direction.

The header file corresponding to this filter should be included first.

Next, the pixel types for the input and output images must be defined and, with them, the image types can be instantiated. Note that it is important to select a signed type for the image, since the values of the derivatives will be positive as well as negative.

Using the image types, it is now possible to define the filter type and create the filter object.

The order of the derivative is selected with the SetOrder() method. The direction along which the derivative will be computed is selected with the SetDirection() method.

The input to the filter can be taken from any other filter, for example a reader. The output can be passed down the pipeline to other filters, for example, a writer. An Update() call on any downstream filter will trigger the execution of the derivative filter.

Figure 2.10 illustrates the effect of the DerivativeImageFilter on a slice of MRI brain image. The derivative is taken along the x direction. The sensitivity to noise in the image is evident from this result.

The source code for this section can be found in the file

SecondDerivativeRecursiveGaussianImageFilter.cxx.

This example illustrates how to compute second derivatives of a 3D image using the itk::RecursiveGaussianImageFilter.

It’s good to be able to compute the raw derivative without any smoothing, but this can be problematic in a medical imaging scenario, when images will often have a certain amount of noise. It’s almost always more desirable to include a smoothing step first, where an image is convolved with a Gaussian kernel in whichever directions the user desires a derivative. The nature of the Gaussian kernel makes it easy to combine these two steps into one, using an infinite impulse response (IIR) filter. In this example, all the second derivatives are computed independently in the same way, as if they were intended to be used for building the Hessian matrix of the image (a square matrix of second-order derivatives of an image, which is useful in many image processing techniques).

First, we will include the relevant header files: the itkRecursiveGaussianImageFilter, the image reader, writer, and duplicator.

Next, we declare our pixel type and output pixel type to be floats, and our image dimension to be 3.

Using these definitions, define the image types, reader and writer types, and duplicator types, which are templated over the pixel types and dimension. Then, instantiate the reader, writer, and duplicator with the New() method.

using OutputImageType = itk::Image< OutputPixelType, Dimension >;

using ReaderType = itk::ImageFileReader< ImageType >;

using WriterType = itk::ImageFileWriter< OutputImageType >;

using DuplicatorType = itk::ImageDuplicator< OutputImageType >;

using FilterType = itk::RecursiveGaussianImageFilter<

ImageType,

ImageType >;

ReaderType::Pointer reader = ReaderType::New();

WriterType::Pointer writer = WriterType::New();

DuplicatorType::Pointer duplicator = DuplicatorType::New();

Here we create three new filters. For each derivative we take, we will want to smooth in that direction first. So after the filters are created, each is given a dimension, and set to (in this example) the same sigma. Note that here, σ represents the standard deviation, whereas the itk::DiscreteGaussianImageFilter exposes the SetVariance method.

FilterType::Pointer gb = FilterType::New();

FilterType::Pointer gc = FilterType::New();

ga->SetDirection( 0 );

gb->SetDirection( 1 );

gc->SetDirection( 2 );

if( argc > 3 )

{

const float sigma = std::stod( argv[3] );

ga->SetSigma( sigma );

gb->SetSigma( sigma );

gc->SetSigma( sigma );

}

First we will compute the second derivative of the z-direction. In order to do this, we smooth in the x- and y- directions, and finally smooth and compute the derivative in the z-direction. Taking the zero-order derivative is equivalent to simply smoothing in that direction. This result is commonly notated Izz.

gb->SetZeroOrder();

gc->SetSecondOrder();

ImageType::Pointer inputImage = reader->GetOutput();

ga->SetInput( inputImage );

gb->SetInput( ga->GetOutput() );

gc->SetInput( gb->GetOutput() );

duplicator->SetInputImage( gc->GetOutput() );

gc->Update();

duplicator->Update();

ImageType::Pointer Izz = duplicator->GetOutput();

Recall that gc is the filter responsible for taking the second derivative. We can now take advantage of the pipeline architecture and, without much hassle, switch the direction of gc and gb, so that gc now takes the derivatives in the y-direction. Now we only need to call Update() on gc to re-run the entire pipeline from ga to gc, obtaining the second-order derivative in the y-direction, which is commonly notated Iyy.

Now we switch the directions of gc with that of ga in order to take the derivatives in the x-direction. This will give us Ixx.

Now we can reset the directions to their original values, and compute first derivatives in different directions. Since we set both gb and gc to compute first derivatives, and ga to zero-order (which is only smoothing) we will obtain Iyz.

Here is how you may easily obtain Ixz.

For the sake of completeness, here is how you may compute Ixz and Ixy.

outputFileName = outputPrefix + "-Ixz.mhd";

writer->SetFileName( outputFileName.c_str() );

writer->Update();

ga->SetDirection( 2 );

gb->SetDirection( 0 );

gc->SetDirection( 1 );

ga->SetZeroOrder();

gb->SetFirstOrder();

gc->SetFirstOrder();

gc->Update();

duplicator->Update();

ImageType::Pointer Ixy = duplicator->GetOutput();

writer->SetInput( Ixy );

outputFileName = outputPrefix + "-Ixy.mhd";

writer->SetFileName( outputFileName.c_str() );

writer->Update();

The source code for this section can be found in the file

LaplacianRecursiveGaussianImageFilter1.cxx.

This example illustrates how to use the itk::RecursiveGaussianImageFilter for computing the Laplacian of a 2D image.

The first step required to use this filter is to include its header file.

Types should be selected on the desired input and output pixel types.

The input and output image types are instantiated using the pixel types.

The filter type is now instantiated using both the input image and the output image types.

This filter applies the approximation of the convolution along a single dimension. It is therefore necessary to concatenate several of these filters to produce smoothing in all directions. In this example, we create a pair of filters since we are processing a 2D image. The filters are created by invoking the New() method and assigning the result to a itk::SmartPointer.

We need two filters for computing the X component of the Laplacian and two other filters for computing the Y component.

Since each one of the newly created filters has the potential to perform filtering along any dimension, we have to restrict each one to a particular direction. This is done with the SetDirection() method.

The itk::RecursiveGaussianImageFilter can approximate the convolution with the Gaussian or with its first and second derivatives. We select one of these options by using the SetOrder() method. Note that the argument is an enum whose values can be ZeroOrder, FirstOrder and SecondOrder. For example, to compute the x partial derivative we should select FirstOrder for x and ZeroOrder for y. Here we want only to smooth in x and y, so we select ZeroOrder in both directions.

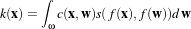

There are two typical ways of normalizing Gaussians depending on their application. For scale-space analysis it is desirable to use a normalization that will preserve the maximum value of the input. This normalization is represented by the following equation.

| (2.2) |

In applications that use the Gaussian as a solution of the diffusion equation it is desirable to use a normalization that preserves the integral of the signal. This last approach can be seen as a conservation of mass principle. This is represented by the following equation.

| (2.3) |

The itk::RecursiveGaussianImageFilter has a boolean flag that allows users to select between these two normalization options. Selection is done with the method SetNormalizeAcrossScale(). Enable this flag when analyzing an image across scale-space. In the current example, this setting has no impact because we are actually renormalizing the output to the dynamic range of the reader, so we simply disable the flag.

The input image can be obtained from the output of another filter. Here, an image reader is used as the source. The image is passed to the x filter and then to the y filter. The reason for keeping these two filters separate is that it is usual in scale-space applications to compute not only the smoothing but also combinations of derivatives at different orders and smoothing. Some factorization is possible when separate filters are used to generate the intermediate results. Here this capability is less interesting, though, since we only want to smooth the image in all directions.

It is now time to select the σ of the Gaussian used to smooth the data. Note that σ must be passed to both filters and that sigma is considered to be in millimeters. That is, at the moment of applying the smoothing process, the filter will take into account the spacing values defined in the image.

Finally the two components of the Laplacian should be added together. The itk::AddImageFilter is used for this purpose.

The filters are triggered by invoking Update() on the Add filter at the end of the pipeline.

The resulting image could be saved to a file using the itk::ImageFileWriter class.

The source code for this section can be found in the file

LaplacianRecursiveGaussianImageFilter2.cxx.

The previous example showed how to use the itk::RecursiveGaussianImageFilter for computing the equivalent of a Laplacian of an image after smoothing with a Gaussian. The elements used in this previous example have been packaged together in the itk::LaplacianRecursiveGaussianImageFilter in order to simplify its usage. This current example shows how to use this convenience filter for achieving the same results as the previous example.

The first step required to use this filter is to include its header file.

Types should be selected on the desired input and output pixel types.

The input and output image types are instantiated using the pixel types.

The filter type is now instantiated using both the input image and the output image types.

This filter packages all the components illustrated in the previous example. The filter is created by invoking the New() method and assigning the result to a itk::SmartPointer.

The option for normalizing across scale space can also be selected in this filter.

The input image can be obtained from the output of another filter. Here, an image reader is used as the source.

It is now time to select the σ of the Gaussian used to smooth the data. Note that σ must be passed to both filters and that sigma is considered to be in millimeters. That is, at the moment of applying the smoothing process, the filter will take into account the spacing values defined in the image.

Finally the pipeline is executed by invoking the Update() method.

The concept of locality is frequently encountered in image processing in the form of filters that compute every output pixel using information from a small region in the neighborhood of the input pixel. The classical form of these filters are the 3×3 filters in 2D images. Convolution masks based on these neighborhoods can perform diverse tasks ranging from noise reduction, to differential operations, to mathematical morphology.

The Insight Toolkit implements an elegant approach to neighborhood-based image filtering. The input image is processed using a special iterator called the itk::NeighborhoodIterator. This iterator is capable of moving over all the pixels in an image and, for each position, it can address the pixels in a local neighborhood. Operators are defined that apply an algorithmic operation in the neighborhood of the input pixel to produce a value for the output pixel. The following section describes some of the more commonly used filters that take advantage of this construction. (See the Iterators chapter in Book 1 for more information.)

The source code for this section can be found in the file

MeanImageFilter.cxx.

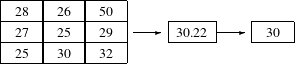

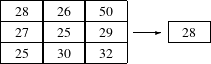

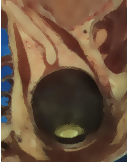

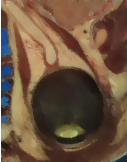

The itk::MeanImageFilter is commonly used for noise reduction. The filter computes the value of each output pixel by finding the statistical mean of the neighborhood of the corresponding input pixel. The following figure illustrates the local effect of the MeanImageFilter in a 2D case. The statistical mean of the neighborhood on the left is passed as the output value associated with the pixel at the center of the neighborhood.

Note that this algorithm is sensitive to the presence of outliers in the neighborhood. This filter will work on images of any dimension thanks to the internal use of itk::SmartNeighborhoodIterator and itk::NeighborhoodOperator. The size of the neighborhood over which the mean is computed can be set by the user.

The header file corresponding to this filter should be included first.

Then the pixel types for input and output image must be defined and, with them, the image types can be instantiated.

Using the image types it is now possible to instantiate the filter type and create the filter object.

The size of the neighborhood is defined along every dimension by passing a SizeType object with the

corresponding values. The value on each dimension is used as the semi-size of a rectangular box. For

example, in 2D a size of  will result in a 3×5 neighborhood.

will result in a 3×5 neighborhood.

The input to the filter can be taken from any other filter, for example a reader. The output can be passed down the pipeline to other filters, for example, a writer. An update call on any downstream filter will trigger the execution of the mean filter.

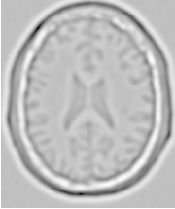

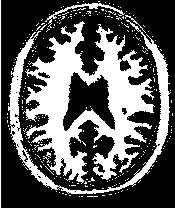

Figure 2.12 illustrates the effect of this filter on a slice of MRI brain image using neighborhood radii of

which corresponds to a 3×3 classical neighborhood. It can be seen from this picture that edges are

rapidly degraded by the diffusion of intensity values among neighbors.

which corresponds to a 3×3 classical neighborhood. It can be seen from this picture that edges are

rapidly degraded by the diffusion of intensity values among neighbors.

The source code for this section can be found in the file

MedianImageFilter.cxx.

The itk::MedianImageFilter is commonly used as a robust approach for noise reduction. This filter is particularly efficient against salt-and-pepper noise. In other words, it is robust to the presence of gray-level outliers. MedianImageFilter computes the value of each output pixel as the statistical median of the neighborhood of values around the corresponding input pixel. The following figure illustrates the local effect of this filter in a 2D case. The statistical median of the neighborhood on the left is passed as the output value associated with the pixel at the center of the neighborhood.

This filter will work on images of any dimension thanks to the internal use of itk::NeighborhoodIterator and itk::NeighborhoodOperator. The size of the neighborhood over which the median is computed can be set by the user.

The header file corresponding to this filter should be included first.

Then the pixel and image types of the input and output must be defined.

Using the image types, it is now possible to define the filter type and create the filter object.

The size of the neighborhood is defined along every dimension by passing a SizeType object with the

corresponding values. The value on each dimension is used as the semi-size of a rectangular box. For

example, in 2D a size of  will result in a 3×5 neighborhood.

will result in a 3×5 neighborhood.

The input to the filter can be taken from any other filter, for example a reader. The output can be passed down the pipeline to other filters, for example, a writer. An update call on any downstream filter will trigger the execution of the median filter.

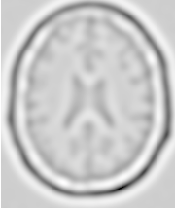

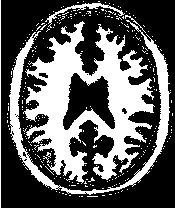

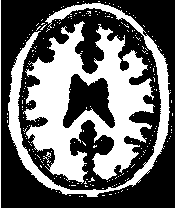

Figure 2.13 illustrates the effect of the MedianImageFilter filter on a slice of MRI brain image using a

neighborhood radius of  , which corresponds to a 3×3 classical neighborhood. The filtered image

demonstrates the moderate tendency of the median filter to preserve edges.

, which corresponds to a 3×3 classical neighborhood. The filtered image

demonstrates the moderate tendency of the median filter to preserve edges.

Mathematical morphology has proved to be a powerful resource for image processing and analysis [55]. ITK implements mathematical morphology filters using NeighborhoodIterators and itk::NeighborhoodOperators. The toolkit contains two types of image morphology algorithms: filters that operate on binary images and filters that operate on grayscale images.

The source code for this section can be found in the file

MathematicalMorphologyBinaryFilters.cxx.

The following section illustrates the use of filters that perform basic mathematical morphology operations on binary images. The itk::BinaryErodeImageFilter and itk::BinaryDilateImageFilter are described here. The filter names clearly specify the type of image on which they operate. The header files required to construct a simple example of the use of the mathematical morphology filters are included below.

The following code defines the input and output pixel types and their associated image types.

Mathematical morphology operations are implemented by applying an operator over the neighborhood of each input pixel. The combination of the rule and the neighborhood is known as structuring element. Although some rules have become de facto standards for image processing, there is a good deal of freedom as to what kind of algorithmic rule should be applied to the neighborhood. The implementation in ITK follows the typical rule of minimum for erosion and maximum for dilation.

The structuring element is implemented as a NeighborhoodOperator. In particular, the default structuring element is the itk::BinaryBallStructuringElement class. This class is instantiated using the pixel type and dimension of the input image.

The structuring element type is then used along with the input and output image types for instantiating the type of the filters.

The filters can now be created by invoking the New() method and assigning the result to itk::SmartPointers.

The structuring element is not a reference counted class. Thus it is created as a C++ stack object instead of using New() and SmartPointers. The radius of the neighborhood associated with the structuring element is defined with the SetRadius() method and the CreateStructuringElement() method is invoked in order to initialize the operator. The resulting structuring element is passed to the mathematical morphology filter through the SetKernel() method, as illustrated below.

A binary image is provided as input to the filters. This image might be, for example, the output of a binary threshold image filter.

The values that correspond to “objects” in the binary image are specified with the methods SetErodeValue() and SetDilateValue(). The value passed to these methods will be considered the value over which the dilation and erosion rules will apply.

The filter is executed by invoking its Update() method, or by updating any downstream filter, such as an image writer.

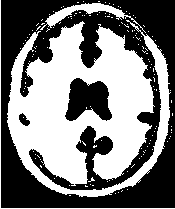

Figure 2.14 illustrates the effect of the erosion and dilation filters on a binary image from a MRI brain slice. The figure shows how these operations can be used to remove spurious details from segmented images.

The source code for this section can be found in the file

MathematicalMorphologyGrayscaleFilters.cxx.

The following section illustrates the use of filters for performing basic mathematical morphology operations on grayscale images. The itk::GrayscaleErodeImageFilter and itk::GrayscaleDilateImageFilter are covered in this example. The filter names clearly specify the type of image on which they operate. The header files required for a simple example of the use of grayscale mathematical morphology filters are presented below.

The following code defines the input and output pixel types and their associated image types.

Mathematical morphology operations are based on the application of an operator over a neighborhood of each input pixel. The combination of the rule and the neighborhood is known as structuring element. Although some rules have become the de facto standard in image processing there is a good deal of freedom as to what kind of algorithmic rule should be applied on the neighborhood. The implementation in ITK follows the typical rule of minimum for erosion and maximum for dilation.

The structuring element is implemented as a itk::NeighborhoodOperator. In particular, the default structuring element is the itk::BinaryBallStructuringElement class. This class is instantiated using the pixel type and dimension of the input image.

The structuring element type is then used along with the input and output image types for instantiating the type of the filters.

The filters can now be created by invoking the New() method and assigning the result to SmartPointers.

The structuring element is not a reference counted class. Thus it is created as a C++ stack object instead of using New() and SmartPointers. The radius of the neighborhood associated with the structuring element is defined with the SetRadius() method and the CreateStructuringElement() method is invoked in order to initialize the operator. The resulting structuring element is passed to the mathematical morphology filter through the SetKernel() method, as illustrated below.

A grayscale image is provided as input to the filters. This image might be, for example, the output of a reader.

The filter is executed by invoking its Update() method, or by updating any downstream filter, such as an image writer.

Figure 2.15 illustrates the effect of the erosion and dilation filters on a binary image from a MRI brain slice. The figure shows how these operations can be used to remove spurious details from segmented images.

Voting filters are quite a generic family of filters. In fact, both the Dilate and Erode filters from Mathematical Morphology are very particular cases of the broader family of voting filters. In a voting filter, the outcome of a pixel is decided by counting the number of pixels in its neighborhood and applying a rule to the result of that counting. For example, the typical implementation of erosion in terms of a voting filter will be to label a foreground pixel as background if the number of background neighbors is greater than or equal to 1. In this context, you could imagine variations of erosion in which the count could be changed to require at least 3 foreground pixels in its neighborhood.

One case of a voting filter is the BinaryMedianImageFilter. This filter is equivalent to applying a Median filter over a binary image. Having a binary image as input makes it possible to optimize the execution of the filter since there is no real need for sorting the pixels according to their frequency in the neighborhood.

The source code for this section can be found in the file

BinaryMedianImageFilter.cxx.

The itk::BinaryMedianImageFilter is commonly used as a robust approach for noise reduction. BinaryMedianImageFilter computes the value of each output pixel as the statistical median of the neighborhood of values around the corresponding input pixel. When the input images are binary, the implementation can be optimized by simply counting the number of pixels ON/OFF around the current pixel.

This filter will work on images of any dimension thanks to the internal use of itk::NeighborhoodIterator and itk::NeighborhoodOperator. The size of the neighborhood over which the median is computed can be set by the user.

The header file corresponding to this filter should be included first.

Then the pixel and image types of the input and output must be defined.

Using the image types, it is now possible to define the filter type and create the filter object.

The size of the neighborhood is defined along every dimension by passing a SizeType object with the

corresponding values. The value on each dimension is used as the semi-size of a rectangular box. For

example, in 2D a size of  will result in a 3×5 neighborhood.

will result in a 3×5 neighborhood.

The input to the filter can be taken from any other filter, for example a reader. The output can be passed down the pipeline to other filters, for example, a writer. An update call on any downstream filter will trigger the execution of the median filter.

Figure 2.16 illustrates the effect of the BinaryMedianImageFilter filter on a slice of MRI brain image using

a neighborhood radius of  , which corresponds to a 5×5 classical neighborhood. The filtered image

demonstrates the capability of this filter for reducing noise both in the background and foreground of the

image, as well as smoothing the contours of the regions.

, which corresponds to a 5×5 classical neighborhood. The filtered image

demonstrates the capability of this filter for reducing noise both in the background and foreground of the

image, as well as smoothing the contours of the regions.

The typical effect of median filtration on a noisy digital image is a dramatic reduction in impulse noise spikes. The filter also tends to preserve brightness differences across signal steps, resulting in reduced blurring of regional boundaries. The filter also tends to preserve the positions of boundaries in an image.

Figure 2.17 below shows the effect of running the median filter with a 3x3 classical window size 1, 10 and 50 times. There is a tradeoff in noise reduction and the sharpness of the image when the window size is increased

.

Another variation of voting filters is the Hole Filling filter. This filter converts background pixels into foreground only when the number of foreground pixels is a majority of the neighbors. By selecting the size of the majority, this filter can be tuned to fill in holes of different sizes. To be more precise, the effect of the filter is actually related to the curvature of the edge in which the pixel is located.

The source code for this section can be found in the file

VotingBinaryHoleFillingImageFilter.cxx.

The itk::VotingBinaryHoleFillingImageFilter applies a voting operation in order to fill in cavities. This can be used for smoothing contours and for filling holes in binary images.

The header file corresponding to this filter should be included first.

Then the pixel and image types of the input and output must be defined.

Using the image types, it is now possible to define the filter type and create the filter object.

The size of the neighborhood is defined along every dimension by passing a SizeType object with the

corresponding values. The value on each dimension is used as the semi-size of a rectangular box. For

example, in 2D a size of  will result in a 3×5 neighborhood.

will result in a 3×5 neighborhood.

Since the filter is expecting a binary image as input, we must specify the levels that are going to be considered background and foreground. This is done with the SetForegroundValue() and SetBackgroundValue() methods.

We must also specify the majority threshold that is going to be used as the decision criterion for converting a background pixel into a foreground pixel. The rule of conversion is that a background pixel will be converted into a foreground pixel if the number of foreground neighbors surpass the number of background neighbors by the majority value. For example, in a 2D image, with neighborhood of radius 1, the neighborhood will have size 3×3. If we set the majority value to 2, then we are requiring that the number of foreground neighbors should be at least (3x3 -1 )/2 + majority. This is done with the SetMajorityThreshold() method.

The input to the filter can be taken from any other filter, for example a reader. The output can be passed down the pipeline to other filters, for example, a writer. An update call on any downstream filter will trigger the execution of the median filter.

Figure 2.18 illustrates the effect of the VotingBinaryHoleFillingImageFilter filter on a thresholded slice of

MRI brain image using neighborhood radii of  ,

,  and

and  that correspond respectively to

neighborhoods of size 3×3, 5×5, 7×7. The filtered image demonstrates the capability of this filter for

reducing noise both in the background and foreground of the image, as well as smoothing the contours of

the regions.

that correspond respectively to

neighborhoods of size 3×3, 5×5, 7×7. The filtered image demonstrates the capability of this filter for

reducing noise both in the background and foreground of the image, as well as smoothing the contours of

the regions.

The Hole Filling filter can be used in an iterative way, by applying it repeatedly until no pixel changes. In this context, the filter can be seen as a binary variation of a Level Set filter.

The source code for this section can be found in the file

VotingBinaryIterativeHoleFillingImageFilter.cxx.

The itk::VotingBinaryIterativeHoleFillingImageFilter applies a voting operation in order to fill in cavities. This can be used for smoothing contours and for filling holes in binary images. This filter runs a itk::VotingBinaryHoleFillingImageFilter internally until no pixels change or the maximum number of iterations has been reached.

The header file corresponding to this filter should be included first.

Then the pixel and image types must be defined. Note that this filter requires the input and output images to be of the same type, therefore a single image type is required for the template instantiation.

Using the image types, it is now possible to define the filter type and create the filter object.

The size of the neighborhood is defined along every dimension by passing a SizeType object with the

corresponding values. The value on each dimension is used as the semi-size of a rectangular box. For

example, in 2D a size of  will result in a 3×5 neighborhood.

will result in a 3×5 neighborhood.

Since the filter is expecting a binary image as input, we must specify the levels that are going to be considered background and foreground. This is done with the SetForegroundValue() and SetBackgroundValue() methods.

We must also specify the majority threshold that is going to be used as the decision criterion for converting a background pixel into a foreground pixel. The rule of conversion is that a background pixel will be converted into a foreground pixel if the number of foreground neighbors surpass the number of background neighbors by the majority value. For example, in a 2D image, with neighborhood of radius 1, the neighborhood will have size 3×3. If we set the majority value to 2, then we are requiring that the number of foreground neighbors should be at least (3x3 -1 )/2 + majority. This is done with the SetMajorityThreshold() method.

Finally we specify the maximum number of iterations for which this filter should run. The number of iterations will determine the maximum size of holes and cavities that this filter will be able to fill. The more iterations you run, the larger the cavities that will be filled in.

The input to the filter can be taken from any other filter, for example a reader. The output can be passed down the pipeline to other filters, for example, a writer. An update call on any downstream filter will trigger the execution of the median filter.

Figure 2.19 illustrates the effect of the VotingBinaryIterativeHoleFillingImageFilter filter on a thresholded

slice of MRI brain image using neighborhood radii of  ,

,  and

and  that correspond respectively to

neighborhoods of size 3×3, 5×5, 7×7. The filtered image demonstrates the capability of this filter for

reducing noise both in the background and foreground of the image, as well as smoothing the contours of

the regions.

that correspond respectively to

neighborhoods of size 3×3, 5×5, 7×7. The filtered image demonstrates the capability of this filter for

reducing noise both in the background and foreground of the image, as well as smoothing the contours of

the regions.

Real image data has a level of uncertainty which is manifested in the variability of measures assigned to pixels. This uncertainty is usually interpreted as noise and considered an undesirable component of the image data. This section describes several methods that can be applied to reduce noise on images.

Blurring is the traditional approach for removing noise from images. It is usually implemented in the form of a convolution with a kernel. The effect of blurring on the image spectrum is to attenuate high spatial frequencies. Different kernels attenuate frequencies in different ways. One of the most commonly used kernels is the Gaussian. Two implementations of Gaussian smoothing are available in the toolkit. The first one is based on a traditional convolution while the other is based on the application of IIR filters that approximate the convolution with a Gaussian [15, 16].

The source code for this section can be found in the file

DiscreteGaussianImageFilter.cxx.

Figure 2.20: Discretized Gaussian.

The itk::DiscreteGaussianImageFilter computes the convolution of the input image with a Gaussian kernel. This is done in ND by taking advantage of the separability of the Gaussian kernel. A one-dimensional Gaussian function is discretized on a convolution kernel. The size of the kernel is extended until there are enough discrete points in the Gaussian to ensure that a user-provided maximum error is not exceeded. Since the size of the kernel is unknown a priori, it is necessary to impose a limit to its growth. The user can thus provide a value to be the maximum admissible size of the kernel. Discretization error is defined as the difference between the area under the discrete Gaussian curve (which has finite support) and the area under the continuous Gaussian.

Gaussian kernels in ITK are constructed according to the theory of Tony Lindeberg [34] so that smoothing and derivative operations commute before and after discretization. In other words, finite difference derivatives on an image I that has been smoothed by convolution with the Gaussian are equivalent to finite differences computed on I by convolving with a derivative of the Gaussian.

The first step required to use this filter is to include its header file. As with other examples, the includes here

are truncated to those specific for this example.

Types should be chosen for the pixels of the input and output images. Image types can be instantiated using the pixel type and dimension.

The discrete Gaussian filter type is instantiated using the input and output image types. A corresponding filter object is created.

The input image can be obtained from the output of another filter. Here, an image reader is used as its input.

The filter requires the user to provide a value for the variance associated with the Gaussian kernel. The method SetVariance() is used for this purpose. The discrete Gaussian is constructed as a convolution kernel. The maximum kernel size can be set by the user. Note that the combination of variance and kernel-size values may result in a truncated Gaussian kernel.

Finally, the filter is executed by invoking the Update() method.

If the output of this filter has been connected to other filters down the pipeline, updating any of the downstream filters will trigger the execution of this one. For example, a writer could be used after the filter.

Figure 2.21 illustrates the effect of this filter on a MRI proton density image of the brain.

Note that large Gaussian variances will produce large convolution kernels and correspondingly longer computation times. Unless a high degree of accuracy is required, it may be more desirable to use the approximating itk::RecursiveGaussianImageFilter with large variances.

The source code for this section can be found in the file

BinomialBlurImageFilter.cxx.

The itk::BinomialBlurImageFilter computes a nearest neighbor average along each dimension. The process is repeated a number of times, as specified by the user. In principle, after a large number of iterations the result will approach the convolution with a Gaussian.

The first step required to use this filter is to include its header file.

Types should be chosen for the pixels of the input and output images. Image types can be instantiated using the pixel type and dimension.

The filter type is now instantiated using both the input image and the output image types. Then a filter object is created.

The input image can be obtained from the output of another filter. Here, an image reader is used as the source. The number of repetitions is set with the SetRepetitions() method. Computation time will increase linearly with the number of repetitions selected. Finally, the filter can be executed by calling the Update() method.

Figure 2.22 illustrates the effect of this filter on a MRI proton density image of the brain.

Note that the standard deviation σ of the equivalent Gaussian is fixed. In the spatial spectrum, the effect of every iteration of this filter is like a multiplication with a sinus cardinal function.

The source code for this section can be found in the file

SmoothingRecursiveGaussianImageFilter.cxx.

The classical method of smoothing an image by convolution with a Gaussian kernel has the drawback that it is slow when the standard deviation σ of the Gaussian is large. This is due to the larger size of the kernel, which results in a higher number of computations per pixel.

The itk::RecursiveGaussianImageFilter implements an approximation of convolution with the Gaussian and its derivatives by using IIR2 filters. In practice this filter requires a constant number of operations for approximating the convolution, regardless of the σ value [15, 16].

The first step required to use this filter is to include its header file.

Types should be selected on the desired input and output pixel types.

The input and output image types are instantiated using the pixel types.

The filter type is now instantiated using both the input image and the output image types.

This filter applies the approximation of the convolution along a single dimension. It is therefore necessary to concatenate several of these filters to produce smoothing in all directions. In this example, we create a pair of filters since we are processing a 2D image. The filters are created by invoking the New() method and assigning the result to a itk::SmartPointer.

Since each one of the newly created filters has the potential to perform filtering along any dimension, we have to restrict each one to a particular direction. This is done with the SetDirection() method.

The itk::RecursiveGaussianImageFilter can approximate the convolution with the Gaussian or with its first and second derivatives. We select one of these options by using the SetOrder() method. Note that the argument is an enum whose values can be ZeroOrder, FirstOrder and SecondOrder. For example, to compute the x partial derivative we should select FirstOrder for x and ZeroOrder for y. Here we want only to smooth in x and y, so we select ZeroOrder in both directions.

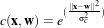

There are two typical ways of normalizing Gaussians depending on their application. For scale-space analysis it is desirable to use a normalization that will preserve the maximum value of the input. This normalization is represented by the following equation.

| (2.4) |

In applications that use the Gaussian as a solution of the diffusion equation it is desirable to use a normalization that preserve the integral of the signal. This last approach can be seen as a conservation of mass principle. This is represented by the following equation.

| (2.5) |

The itk::RecursiveGaussianImageFilter has a boolean flag that allows users to select between these two normalization options. Selection is done with the method SetNormalizeAcrossScale(). Enable this flag to analyzing an image across scale-space. In the current example, this setting has no impact because we are actually renormalizing the output to the dynamic range of the reader, so we simply disable the flag.

The input image can be obtained from the output of another filter. Here, an image reader is used as the source. The image is passed to the x filter and then to the y filter. The reason for keeping these two filters separate is that it is usual in scale-space applications to compute not only the smoothing but also combinations of derivatives at different orders and smoothing. Some factorization is possible when separate filters are used to generate the intermediate results. Here this capability is less interesting, though, since we only want to smooth the image in all directions.

It is now time to select the σ of the Gaussian used to smooth the data. Note that σ must be passed to both filters and that sigma is considered to be in millimeters. That is, at the moment of applying the smoothing process, the filter will take into account the spacing values defined in the image.

Finally the pipeline is executed by invoking the Update() method.

Figure 2.23 illustrates the effect of this filter on a MRI proton density image of the brain using σ values of 3 (left) and 5 (right). The figure shows how the attenuation of noise can be regulated by selecting the appropriate standard deviation. This type of scale-tunable filter is suitable for performing scale-space analysis.

The RecursiveGaussianFilters can also be applied on multi-component images. For instance, the above filter could have applied with RGBPixel as the pixel type. Each component is then independently filtered. However the RescaleIntensityImageFilter will not work on RGBPixels since it does not mathematically make sense to rescale the output of multi-component images.

In some cases it is desirable to compute smoothing in restricted regions of the image, or to do it using different parameters that are computed locally. The following sections describe options for applying local smoothing in images.

The source code for this section can be found in the file

GaussianBlurImageFunction.cxx.

The drawback of image denoising (smoothing) is that it tends to blur away the sharp boundaries in the image that help to distinguish between the larger-scale anatomical structures that one is trying to characterize (which also limits the size of the smoothing kernels in most applications). Even in cases where smoothing does not obliterate boundaries, it tends to distort the fine structure of the image and thereby changes subtle aspects of the anatomical shapes in question.

Perona and Malik [45] introduced an alternative to linear-filtering that they called anisotropic diffusion. Anisotropic diffusion is closely related to the earlier work of Grossberg [22], who used similar nonlinear diffusion processes to model human vision. The motivation for anisotropic diffusion (also called nonuniform or variable conductance diffusion) is that a Gaussian smoothed image is a single time slice of the solution to the heat equation, that has the original image as its initial conditions. Thus, the solution to

| (2.6) |

where g(x,y,0) = f(x,y) is the input image, is g(x,y,t) = G( )⊗f(x,y), where G(σ) is a Gaussian with

standard deviation σ.

)⊗f(x,y), where G(σ) is a Gaussian with

standard deviation σ.

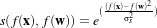

Anisotropic diffusion includes a variable conductance term that, in turn, depends on the differential structure of the image. Thus, the variable conductance can be formulated to limit the smoothing at “edges” in images, as measured by high gradient magnitude, for example.

| (2.7) |

where, for notational convenience, we leave off the independent parameters of g and use the subscripts with respect to those parameters to indicate partial derivatives. The function c(|∇g|) is a fuzzy cutoff that reduces the conductance at areas of large |∇g|, and can be any one of a number of functions. The literature has shown

| (2.8) |

to be quite effective. Notice that conductance term introduces a free parameter k, the conductance parameter, that controls the sensitivity of the process to edge contrast. Thus, anisotropic diffusion entails two free parameters: the conductance parameter, k, and the time parameter, t, that is analogous to σ, the effective width of the filter when using Gaussian kernels.

Equation 2.7 is a nonlinear partial differential equation that can be solved on a discrete grid using finite forward differences. Thus, the smoothed image is obtained only by an iterative process, not a convolution or non-stationary, linear filter. Typically, the number of iterations required for practical results are small, and large 2D images can be processed in several tens of seconds using carefully written code running on modern, general purpose, single-processor computers. The technique applies readily and effectively to 3D images, but requires more processing time.

In the early 1990’s several research groups [21, 67] demonstrated the effectiveness of anisotropic diffusion on medical images. In a series of papers on the subject [71, 69, 70, 67, 68, 65], Whitaker described a detailed analytical and empirical analysis, introduced a smoothing term in the conductance that made the process more robust, invented a numerical scheme that virtually eliminated directional artifacts in the original algorithm, and generalized anisotropic diffusion to vector-valued images, an image processing technique that can be used on vector-valued medical data (such as the color cryosection data of the Visible Human Project).

For a vector-valued input  : U

: U ℜm the process takes the form

ℜm the process takes the form

| (2.9) |

where

is a dissimilarity measure of

is a dissimilarity measure of  , a generalization of the gradient magnitude to vector-valued

images, that can incorporate linear and nonlinear coordinate transformations on the range of

, a generalization of the gradient magnitude to vector-valued

images, that can incorporate linear and nonlinear coordinate transformations on the range of  . In this way,

the smoothing of the multiple images associated with vector-valued data is coupled through the

conductance term, that fuses the information in the different images. Thus vector-valued, nonlinear

diffusion can combine low-level image features (e.g. edges) across all “channels” of a vector-valued image

in order to preserve or enhance those features in all of image “channels”.

. In this way,

the smoothing of the multiple images associated with vector-valued data is coupled through the

conductance term, that fuses the information in the different images. Thus vector-valued, nonlinear

diffusion can combine low-level image features (e.g. edges) across all “channels” of a vector-valued image

in order to preserve or enhance those features in all of image “channels”.

Vector-valued anisotropic diffusion is useful for denoising data from devices that produce multiple values such as MRI or color photography. When performing nonlinear diffusion on a color image, the color channels are diffused separately, but linked through the conductance term. Vector-valued diffusion is also useful for processing registered data from different devices or for denoising higher-order geometric or statistical features from scalar-valued images [65, 72].

The output of anisotropic diffusion is an image or set of images that demonstrates reduced noise and texture but preserves, and can also enhance, edges. Such images are useful for a variety of processes including statistical classification, visualization, and geometric feature extraction. Previous work has shown [68] that anisotropic diffusion, over a wide range of conductance parameters, offers quantifiable advantages over linear filtering for edge detection in medical images.

Since the effectiveness of nonlinear diffusion was first demonstrated, numerous variations of this approach have surfaced in the literature [60]. These include alternatives for constructing dissimilarity measures [53], directional (i.e., tensor-valued) conductance terms [64, 3] and level set interpretations [66].

The source code for this section can be found in the file

GradientAnisotropicDiffusionImageFilter.cxx.

The itk::GradientAnisotropicDiffusionImageFilter implements an N-dimensional version of the classic Perona-Malik anisotropic diffusion equation for scalar-valued images [45].

The conductance term for this implementation is chosen as a function of the gradient magnitude of the image at each point, reducing the strength of diffusion at edge pixels.

| (2.10) |

The numerical implementation of this equation is similar to that described in the Perona-Malik paper [45], but uses a more robust technique for gradient magnitude estimation and has been generalized to N-dimensions.

The first step required to use this filter is to include its header file.

Types should be selected based on the pixel types required for the input and output images. The image types are defined using the pixel type and the dimension.

The filter type is now instantiated using both the input image and the output image types. The filter object is created by the New() method.

The input image can be obtained from the output of another filter. Here, an image reader is used as source.

This filter requires three parameters: the number of iterations to be performed, the time step and the conductance parameter used in the computation of the level set evolution. These parameters are set using the methods SetNumberOfIterations(), SetTimeStep() and SetConductanceParameter() respectively. The filter can be executed by invoking Update().

Typical values for the time step are 0.25 in 2D images and 0.125 in 3D images. The number of iterations is typically set to 5; more iterations result in further smoothing and will increase the computing time linearly.

Figure 2.24 illustrates the effect of this filter on a MRI proton density image of the brain. In this example the filter was run with a time step of 0.25, and 5 iterations. The figure shows how homogeneous regions are smoothed and edges are preserved.

The following classes provide similar functionality:

The source code for this section can be found in the file

CurvatureAnisotropicDiffusionImageFilter.cxx.

The itk::CurvatureAnisotropicDiffusionImageFilter performs anisotropic diffusion on an image using a modified curvature diffusion equation (MCDE).

MCDE does not exhibit the edge enhancing properties of classic anisotropic diffusion, which can under certain conditions undergo a “negative” diffusion, which enhances the contrast of edges. Equations of the form of MCDE always undergo positive diffusion, with the conductance term only varying the strength of that diffusion.

Qualitatively, MCDE compares well with other non-linear diffusion techniques. It is less sensitive to contrast than classic Perona-Malik style diffusion, and preserves finer detailed structures in images. There is a potential speed trade-off for using this function in place of itkGradientNDAnisotropicDiffusionFunction. Each iteration of the solution takes roughly twice as long. Fewer iterations, however, may be required to reach an acceptable solution.

The MCDE equation is given as:

| (2.11) |

where the conductance modified curvature term is

| (2.12) |

The first step required for using this filter is to include its header file.

Types should be selected based on the pixel types required for the input and output images. The image types are defined using the pixel type and the dimension.

The filter type is now instantiated using both the input image and the output image types. The filter object is created by the New() method.

The input image can be obtained from the output of another filter. Here, an image reader is used as source.

This filter requires three parameters: the number of iterations to be performed, the time step used in the computation of the level set evolution and the value of conductance. These parameters are set using the methods SetNumberOfIterations(), SetTimeStep() and SetConductance() respectively. The filter can be executed by invoking Update().

Typical values for the time step are 0.125 in 2D images and 0.0625 in 3D images. The number of iterations can be usually around 5, more iterations will result in further smoothing and will increase the computing time linearly. The conductance parameter is usually around 3.0.

Figure 2.25 illustrates the effect of this filter on a MRI proton density image of the brain. In this example the filter was run with a time step of 0.125, 5 iterations and a conductance value of 3.0. The figure shows how homogeneous regions are smoothed and edges are preserved.

The following classes provide similar functionality:

The source code for this section can be found in the file

CurvatureFlowImageFilter.cxx.

The itk::CurvatureFlowImageFilter performs edge-preserving smoothing in a similar fashion to the classical anisotropic diffusion. The filter uses a level set formulation where the iso-intensity contours in an image are viewed as level sets, where pixels of a particular intensity form one level set. The level set function is then evolved under the control of a diffusion equation where the speed is proportional to the curvature of the contour:

| (2.13) |

where κ is the curvature.

Areas of high curvature will diffuse faster than areas of low curvature. Hence, small jagged noise artifacts will disappear quickly, while large scale interfaces will be slow to evolve, thereby preserving sharp boundaries between objects. However, it should be noted that although the evolution at the boundary is slow, some diffusion will still occur. Thus, continual application of this curvature flow scheme will eventually result in the removal of information as each contour shrinks to a point and disappears.

The first step required to use this filter is to include its header file.

Types should be selected based on the pixel types required for the input and output images.

With them, the input and output image types can be instantiated.

The CurvatureFlow filter type is now instantiated using both the input image and the output image types.

A filter object is created by invoking the New() method and assigning the result to a itk::SmartPointer.

The input image can be obtained from the output of another filter. Here, an image reader is used as source.

The CurvatureFlow filter requires two parameters: the number of iterations to be performed and the time step used in the computation of the level set evolution. These two parameters are set using the methods SetNumberOfIterations() and SetTimeStep() respectively. Then the filter can be executed by invoking Update().

Typical values for the time step are 0.125 in 2D images and 0.0625 in 3D images. The number of iterations can be usually around 10, more iterations will result in further smoothing and will increase the computing time linearly. Edge-preserving behavior is not guaranteed by this filter. Some degradation will occur on the edges and will increase as the number of iterations is increased.

If the output of this filter has been connected to other filters down the pipeline, updating any of the downstream filters will trigger the execution of this one. For example, a writer filter could be used after the curvature flow filter.

Figure 2.26 illustrates the effect of this filter on a MRI proton density image of the brain. In this example the filter was run with a time step of 0.25 and 10 iterations. The figure shows how homogeneous regions are smoothed and edges are preserved.

The following classes provide similar functionality:

The source code for this section can be found in the file

MinMaxCurvatureFlowImageFilter.cxx.

The MinMax curvature flow filter applies a variant of the curvature flow algorithm where diffusion is turned on or off depending of the scale of the noise that one wants to remove. The evolution speed is switched between min(κ,0) and max(κ,0) such that:

| (2.14) |

where F is defined as

| (2.15) |